Customer focus

When we hear that a company is launching a ML feature, building a ML product or hiring a ML team, we get a little cynical. Sprinkle some ML and AI into your presentation to VCs or managers and suddenly you’re hotly in demand.

At Voiceflow we don’t see ML as a magic elixir, rather it’s closer to the search for one: something that can quickly turn into a wild goose chase if you aren’t focused and deliberate. So to keep us from running in circles we focus on our customer, how can adding ML make their lives easier and more productive.

We also recognize the opportunity cost of working on ML, every ML engineer means less funding to hire people to work on our current product and fix current problems.

So we’re working closely with our customers to see how we bring some simple, but inspiring features to the platform that leverage our understanding of voice conversations. We’re focused on a highly active segment who’s raised their hand to become beta users and are passionate about the platform.

After initial customer tests we can review the qualitative and quantitative data and give the project more time or shelve it.

Rapid prototyping

The beauty of software is the ability to automate and reuse. The darker side of beauty is perfectionism, and in the software world it’s building scalability and impeccable code quality when all you need is a prototype.

This perfectionism is often in direct conflict with customer focus in the early days of a project since your goal is to validate, iterate and figure out what to build. As developers we know the struggle of balancing shipping features, minimizing technical debt and evolving requirements.

When we want to develop a new capability at Voiceflow, we try to get there as quickly as possible both on the UX and technical side. This has led to us building some tools outside our core application to decouple from the application team and to get results faster. Once we validate that our ML model works and has a positive UX, we want to start integrating into our stack and building higher fidelity experiences.

Infrastructure as code, templates and testing

After an iteration of this process we built some tools and templates along the way. These include customer facing tools, helper functions and a standardized way to create new models. When a ML Engineer at Voiceflow wants to create a new model all they to do is have our MLCLI install and then type ml create model -n my-model and a sample model will be generated for them. Not only is it generated, it can immediately be deployed with a ml deploy model -n my-model.

We invested time into the ML CLI because we want to focus on deploying models into prod as quickly as possible, not chasing environment variables and parameters that may linger through bad copy pasting. We’re aiming to automate the boring and error prone part of ML, allowing us to focus on customers and outcomes.

Likewise we want developers and data scientists to be able to create isolated end to end Machine Learning environments quickly, so that’s why we created MLEnvs. Using the ML CLI you can create a new environment in 15 minutes, based on our terraform templates. This allows us to try new platform features while stopping stable environments from being contaminated with unstable additions.

Each Model and MLEnv is generated using python and is backed by a set of terraform and terragrunt modules. Staging and Prod are always based off of the main branch, while Dev environments allow models to be deployed that are branch specific. This allows our ML team to work in parallel within the same MLEnv when testing changes or doing A/B tests.

A Real Time Platform

One of Voiceflow’s key features is the ability for a team to collaborate and build voice apps in real time. To keep up with our users, we need to provide real time insights from our ML Models, so we incorporated this as a key platform requirement.

We’re using sockets and an event driven architecture to handle user requests, process them and respond. This is a relatively niche approach to many ML systems that rely on REST endpoints for inference, which is another reason we decided to build the ML Platform in house.

Separate Model Development and Deployment

This one may seem controversial, given the current movement on MLOps and unifying ML processes.

There are a couple reasons for this idea.

The first is the idea of starting somewhere. When you draw out a detailed, end to end MLOps architecture it gets overwhelming very quickly. The last time I did so, I had 50+ boxes, 100+ arrows and had to divide it into 14 subsections to keep it organized. So when building for Voiceflow, logically separating model development and deployment makes it easier to focus on building a V1 of a platform.

The second reason is that model development is a much more mature space than model deployment and serving. Many platforms like Databricks, Sagemaker, Azure ML and Vertex AI are adding features to create a better model hosting experience, but many are still premature or focused on the model development portion. Due to this situation, decoupling these two components will allow us to focus on building in house where the industry is still maturing while leveraging key SAAS and cloud offerings where it makes sense.

The third reason is that model development and deployment are logically separated, but with the intention of integrating seamlessly. We are still working towards a fully integrated MLOps model, but it’s too early to tightly couple to a given model development framework and methodology. Once we have enough production data, we can make more definite decisions of where we need key integrations between model development and deployment. For now we assume that models will be developed in some pre-existing (cloud/local) environment, which must supply the platform with this information before deployment.

Leverage and contribute to open source

If we were building a ML platform in 2016 our approach would look very different. Tooling and model architectures were much less common in the open source community, with tensorflow recently released, and pytorch and transformers still early in their development.

Now we have many large language models available to us, countless ML frameworks and python packages galore. This has brought down the barrier to entry immensely within the ML space and we’re grateful to all the developers and companies which have contributed to a flourishing community.

As we continue to grow as a team we will increase our open source contributions through articles, code snippets and packages. Our first open source post came from our MLCLI development where we shared some key ideas and a repo of building a CLI. We also posted about data ingestion using Terraform and Google PubSub.

Hiring for three core technical skills

Many times when companies are starting their ML or Data Science teams they don’t know what they want. They want a mixture of business outcomes and technological flare with poorly defined requirements.

For a startup it’s even harder because as a company you don’t know what you want yet from a product. So we stick to three things that we know are true.

- We want ML to improve our platform and customers’ voice experience

- We need to develop capabilities in the NLU, NLP and Recommender system spaces

- We need flexibility from our platform and people to accommodate rapidly changing use cases

As the company and market matures, our core ML offerings will change, but we’ll continue to stick to these ideas that will guide our team’s growth.

So what technical skills are we looking to hire for?

- Data science in our core competency areas (NLU, NLP, Rec Systems)

- Data engineering

- Cloud/Platform Engineering

The important thing we recognize is that there are very few people with expertise in all three areas, they are unicorns of this space. So instead we are looking for people with strong experience in one of the three areas, and exposure to the other two. Combined with the key value of flexibility, we are looking for people who are interested in solving interesting problems and will grow with the team.

Where we're at

We are currently three months into our ML Platform journey and wanted to share what we have built so far:

- V1 of a ML Platform that can deploy models end to end in 5 min

- A fairly comprehensive ML CLI that can handle versioning, model promotions and the creation of isolated ML Environments

- Five models exiting the R&D stage and two models in a customer validation stage

- A comprehensive ML Strategy

- A highly capable engineering team

We’re excited about what we’ve built, but we are even more excited about what’s to come.

Where we're going

As our team continues evolve we want to focus on three things:

- Work closely with our core creators and enterprise customers to improve their user experience

- Build a world class ML platform that can scale to millions of users and a comprehensive set of use cases.

- Contributing to the ML Community through articles, talks and open source projects

As we grow our team, we’d like people who are excited about these three things and bring a unique perspective to tackling these challenges. If you’re excited about these things send us a message at engineering@voiceflow.com

Wrapping up

After six months of building our ML platform, we wanted to share the thinking that has guided us so far and will guide our future endeavours.

Customer focus

When we hear that a company is launching a ML feature, building a ML product or hiring a ML team, we get a little cynical. Sprinkle some ML and AI into your presentation to VCs or managers and suddenly you’re hotly in demand.

At Voiceflow we don’t see ML as a magic elixir, rather it’s closer to the search for one: something that can quickly turn into a wild goose chase if you aren’t focused and deliberate. So to keep us from running in circles we focus on our customer, how can adding ML make their lives easier and more productive.

We also recognize the opportunity cost of working on ML, every ML engineer means less funding to hire people to work on our current product and fix current problems.

So we’re working closely with our customers to see how we bring some simple, but inspiring features to the platform that leverage our understanding of voice conversations. We’re focused on a highly active segment who’s raised their hand to become beta users and are passionate about the platform.

After initial customer tests we can review the qualitative and quantitative data and give the project more time or shelve it.

Rapid prototyping

The beauty of software is the ability to automate and reuse. The darker side of beauty is perfectionism, and in the software world it’s building scalability and impeccable code quality when all you need is a prototype.

This perfectionism is often in direct conflict with customer focus in the early days of a project since your goal is to validate, iterate and figure out what to build. As developers we know the struggle of balancing shipping features, minimizing technical debt and evolving requirements.

When we want to develop a new capability at Voiceflow, we try to get there as quickly as possible both on the UX and technical side. This has led to us building some tools outside our core application to decouple from the application team and to get results faster. Once we validate that our ML model works and has a positive UX, we want to start integrating into our stack and building higher fidelity experiences.

Infrastructure as code, templates and testing

After an iteration of this process we built some tools and templates along the way. These include customer facing tools, helper functions and a standardized way to create new models. When a ML Engineer at Voiceflow wants to create a new model all they to do is have our MLCLI install and then type ml create model -n my-model and a sample model will be generated for them. Not only is it generated, it can immediately be deployed with a ml deploy model -n my-model.

We invested time into the ML CLI because we want to focus on deploying models into prod as quickly as possible, not chasing environment variables and parameters that may linger through bad copy pasting. We’re aiming to automate the boring and error prone part of ML, allowing us to focus on customers and outcomes.

Likewise we want developers and data scientists to be able to create isolated end to end Machine Learning environments quickly, so that’s why we created MLEnvs. Using the ML CLI you can create a new environment in 15 minutes, based on our terraform templates. This allows us to try new platform features while stopping stable environments from being contaminated with unstable additions.

Each Model and MLEnv is generated using python and is backed by a set of terraform and terragrunt modules. Staging and Prod are always based off of the main branch, while Dev environments allow models to be deployed that are branch specific. This allows our ML team to work in parallel within the same MLEnv when testing changes or doing A/B tests.

A Real Time Platform

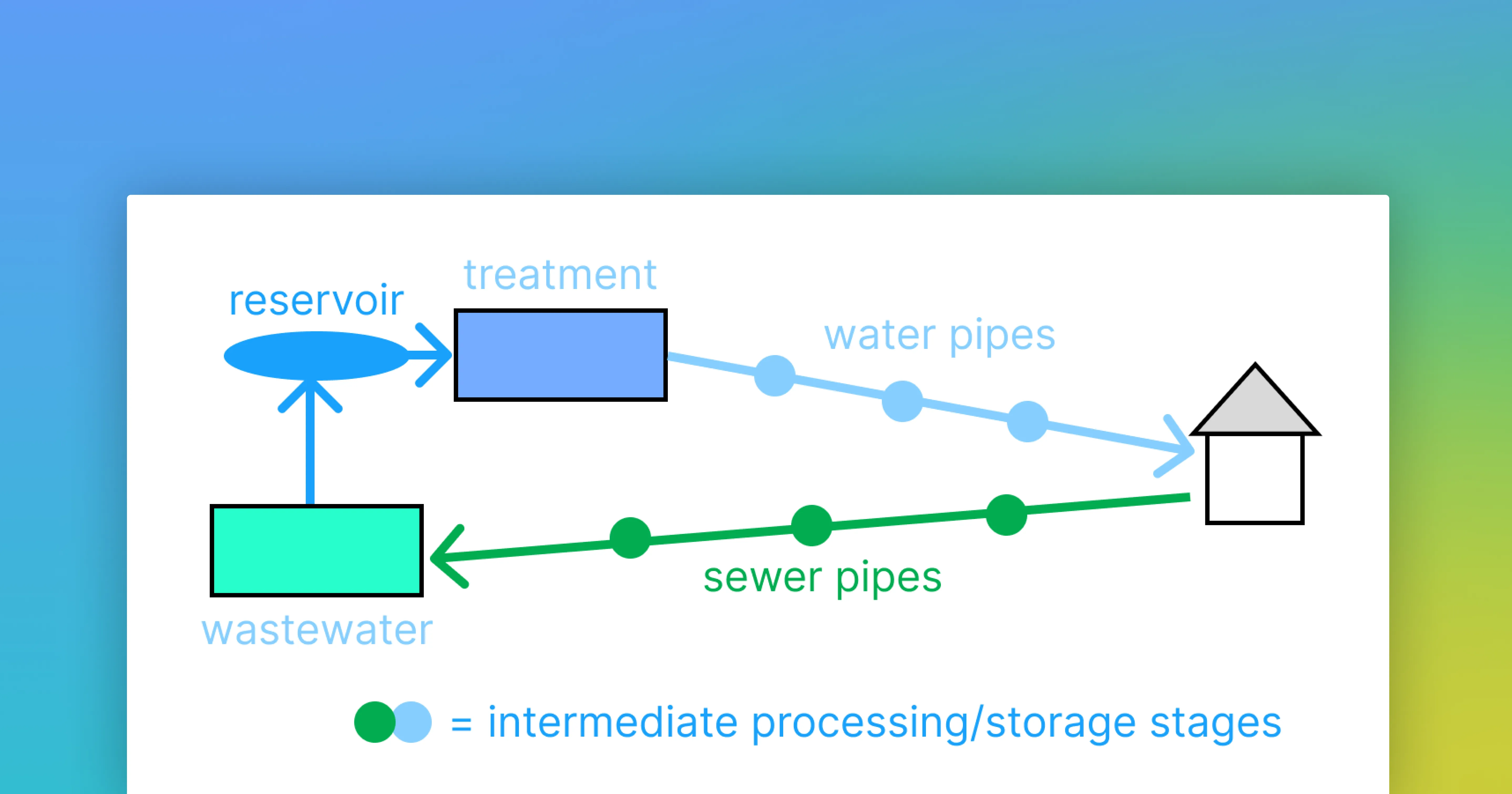

One of Voiceflow’s key features is the ability for a team to collaborate and build voice apps in real time. To keep up with our users, we need to provide real time insights from our ML Models, so we incorporated this as a key platform requirement.

We’re using sockets and an event driven architecture to handle user requests, process them and respond. This is a relatively niche approach to many ML systems that rely on REST endpoints for inference, which is another reason we decided to build the ML Platform in house.

Separate Model Development and Deployment

This one may seem controversial, given the current movement on MLOps and unifying ML processes.

There are a couple reasons for this idea.

The first is the idea of starting somewhere. When you draw out a detailed, end to end MLOps architecture it gets overwhelming very quickly. The last time I did so, I had 50+ boxes, 100+ arrows and had to divide it into 14 subsections to keep it organized. So when building for Voiceflow, logically separating model development and deployment makes it easier to focus on building a V1 of a platform.

The second reason is that model development is a much more mature space than model deployment and serving. Many platforms like Databricks, Sagemaker, Azure ML and Vertex AI are adding features to create a better model hosting experience, but many are still premature or focused on the model development portion. Due to this situation, decoupling these two components will allow us to focus on building in house where the industry is still maturing while leveraging key SAAS and cloud offerings where it makes sense.

The third reason is that model development and deployment are logically separated, but with the intention of integrating seamlessly. We are still working towards a fully integrated MLOps model, but it’s too early to tightly couple to a given model development framework and methodology. Once we have enough production data, we can make more definite decisions of where we need key integrations between model development and deployment. For now we assume that models will be developed in some pre-existing (cloud/local) environment, which must supply the platform with this information before deployment.

Leverage and contribute to open source

If we were building a ML platform in 2016 our approach would look very different. Tooling and model architectures were much less common in the open source community, with tensorflow recently released, and pytorch and transformers still early in their development.

Now we have many large language models available to us, countless ML frameworks and python packages galore. This has brought down the barrier to entry immensely within the ML space and we’re grateful to all the developers and companies which have contributed to a flourishing community.

As we continue to grow as a team we will increase our open source contributions through articles, code snippets and packages. Our first open source post came from our MLCLI development where we shared some key ideas and a repo of building a CLI. We also posted about data ingestion using Terraform and Google PubSub.

Hiring for three core technical skills

Many times when companies are starting their ML or Data Science teams they don’t know what they want. They want a mixture of business outcomes and technological flare with poorly defined requirements.

For a startup it’s even harder because as a company you don’t know what you want yet from a product. So we stick to three things that we know are true.

- We want ML to improve our platform and customers’ voice experience

- We need to develop capabilities in the NLU, NLP and Recommender system spaces

- We need flexibility from our platform and people to accommodate rapidly changing use cases

As the company and market matures, our core ML offerings will change, but we’ll continue to stick to these ideas that will guide our team’s growth.

So what technical skills are we looking to hire for?

- Data science in our core competency areas (NLU, NLP, Rec Systems)

- Data engineering

- Cloud/Platform Engineering

The important thing we recognize is that there are very few people with expertise in all three areas, they are unicorns of this space. So instead we are looking for people with strong experience in one of the three areas, and exposure to the other two. Combined with the key value of flexibility, we are looking for people who are interested in solving interesting problems and will grow with the team.

Where we're at

We are currently three months into our ML Platform journey and wanted to share what we have built so far:

- V1 of a ML Platform that can deploy models end to end in 5 min

- A fairly comprehensive ML CLI that can handle versioning, model promotions and the creation of isolated ML Environments

- Five models exiting the R&D stage and two models in a customer validation stage

- A comprehensive ML Strategy

- A highly capable engineering team

We’re excited about what we’ve built, but we are even more excited about what’s to come.

Where we're going

As our team continues evolve we want to focus on three things:

- Work closely with our core creators and enterprise customers to improve their user experience

- Build a world class ML platform that can scale to millions of users and a comprehensive set of use cases.

- Contributing to the ML Community through articles, talks and open source projects

As we grow our team, we’d like people who are excited about these three things and bring a unique perspective to tackling these challenges. If you’re excited about these things send us a message at engineering@voiceflow.com

Wrapping up

After six months of building our ML platform, we wanted to share the thinking that has guided us so far and will guide our future endeavours.

.svg)