Useful prompts for prompt chaining

Before we get ahead of ourselves, let’s first walk through the types of use cases where LLMs can be used for more natural conversations:

- Intent classification

- General Conversation Classification

- Entity Capture

- Re-prompting

- Personas

We’ll be using each of these techniques in our project, the examples shown below are from the OpenAI playground, but can also be replicated in the Voiceflow generate step or other tools.

Intent classification

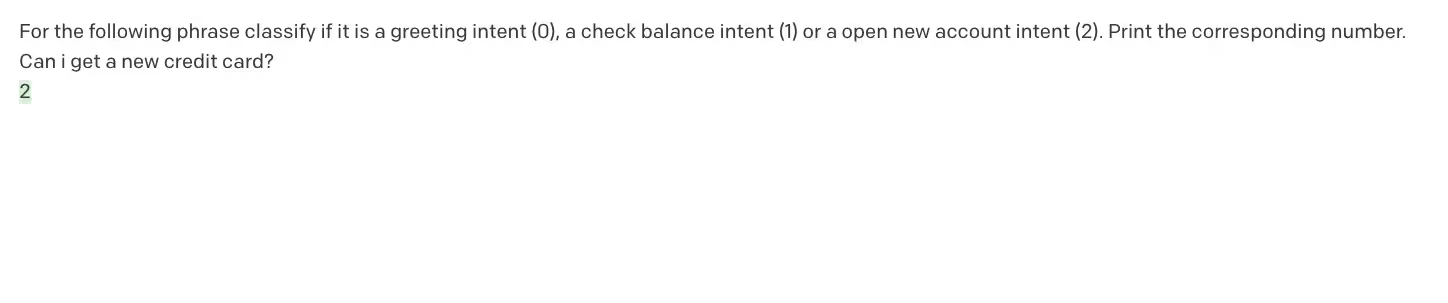

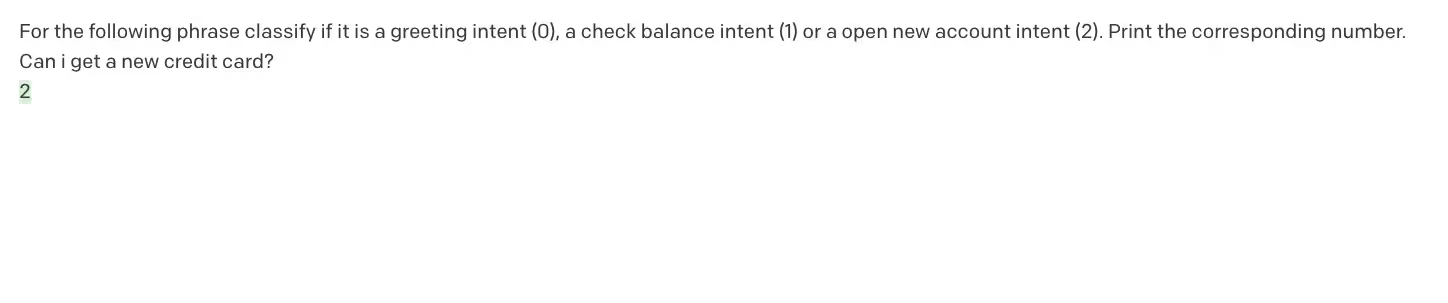

Intent classification is a standard task in the NLU space for grouping use actions known as intents. When using a large language model you have three options on how the intent is classified, as a class (option), as a name or as a chosen name.

A class is usually a number that be used later on to represent that intent. It allows easier comparison’s downstream of what to do, it will also be faster since fewer tokens need to be generated.

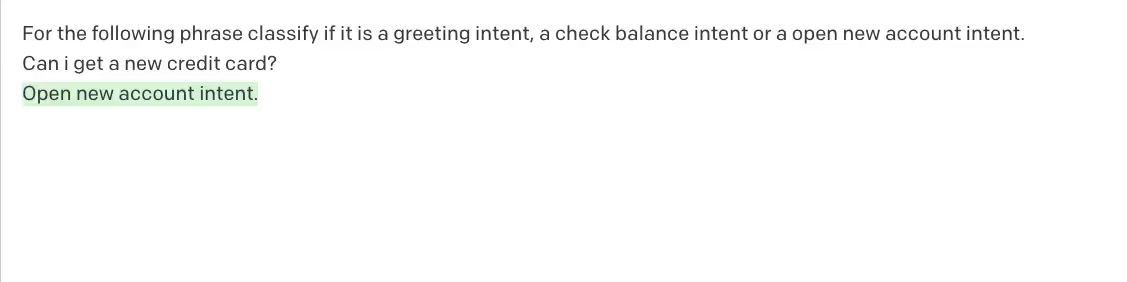

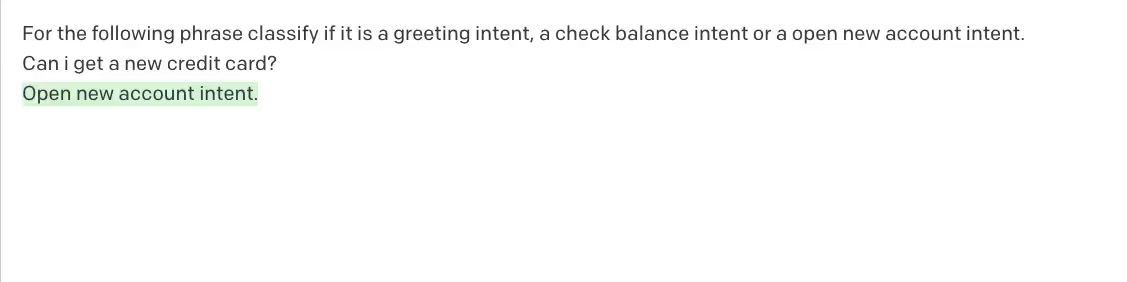

The second option. A general named intent can also be extracted with no examples given, which we call zero-shot learning. This type of classification is great for generating a name on the fly, but relying on it downstream is challenging since formatting and naming is inconsistent.

The third option is anchoring the intent classification to a set of intent names and having it print the matched name. This is a combination of option 1 and 2 and provides a more standardized way to capture the intent.

General conversation classification

Beyond intent classification, there are many other types of conversations you would want to classify, here are some examples:

- Sentiment

- Question vs Statement

- Tone (formal vs informal)

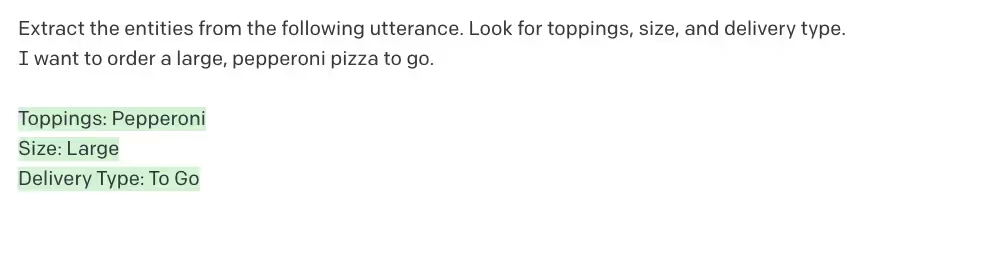

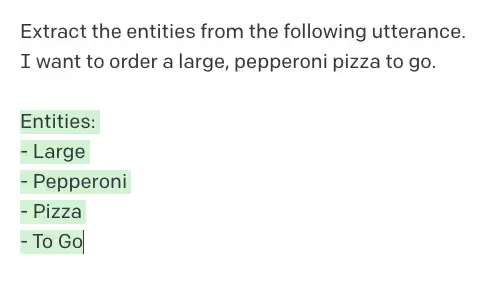

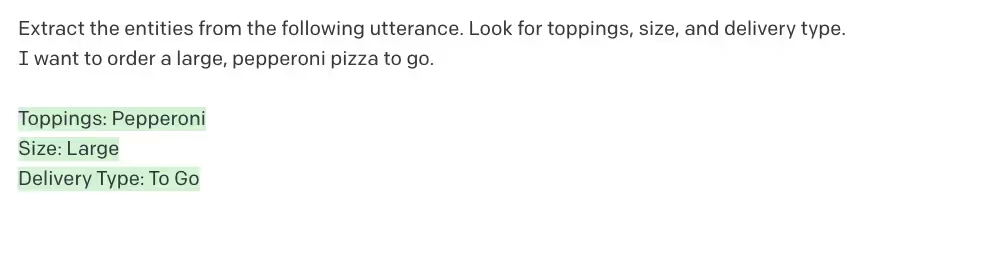

Entity capture

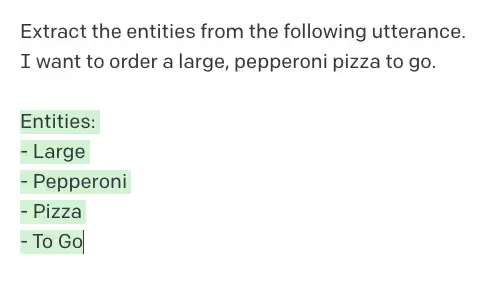

Based on a statement, capture different entities. These can either be looking for specific entity types or general capturing.

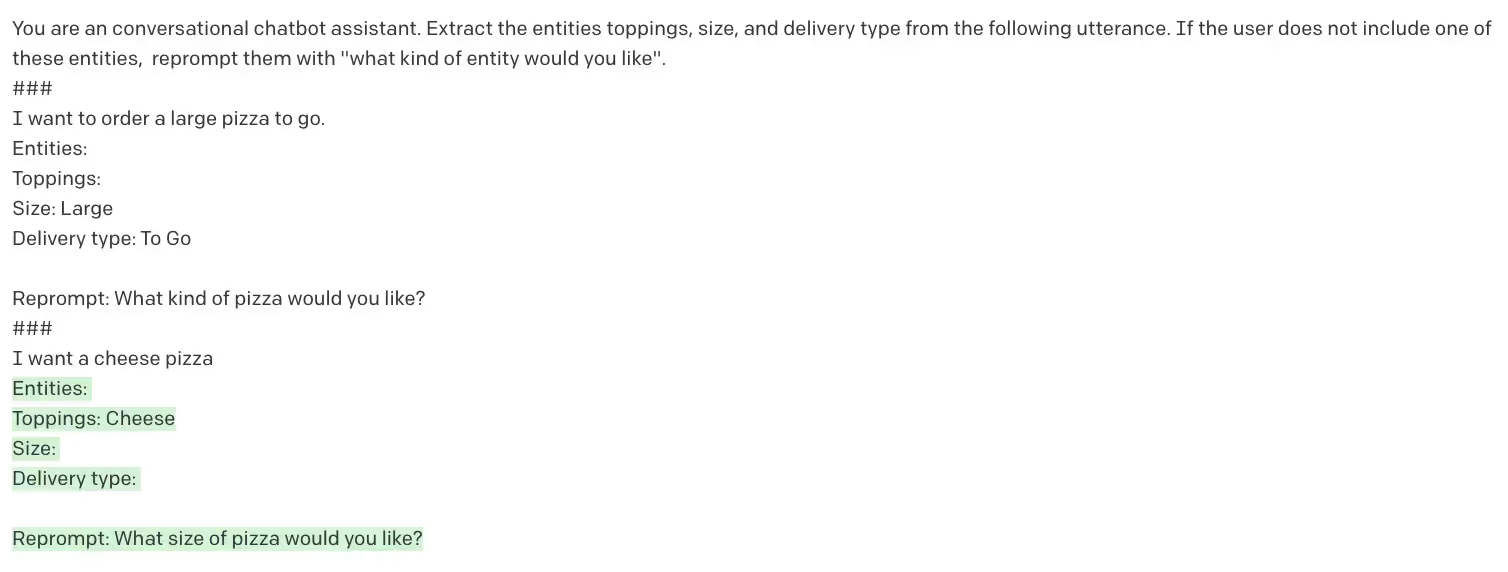

Re-prompting

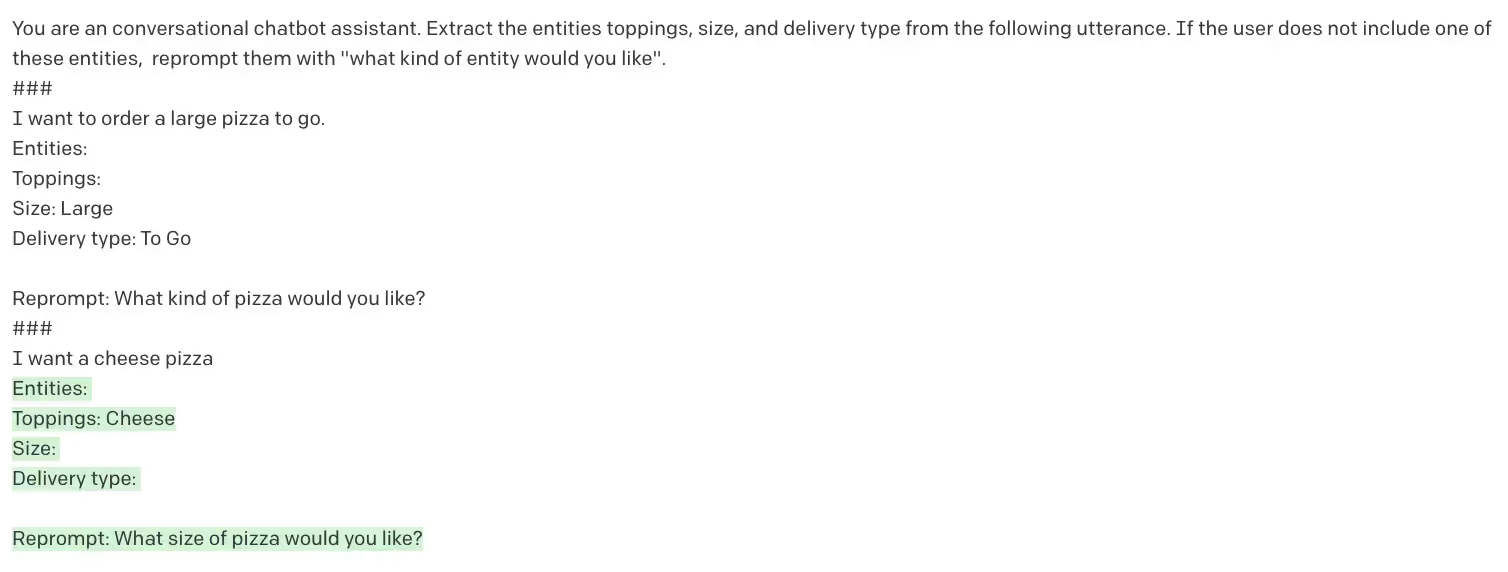

Building on entity capture, you can also automatically re-prompt users based on missing values. In the example below we add an example of the format so that it is more consistent. We can then parse the output to extract the entities and the re prompt.

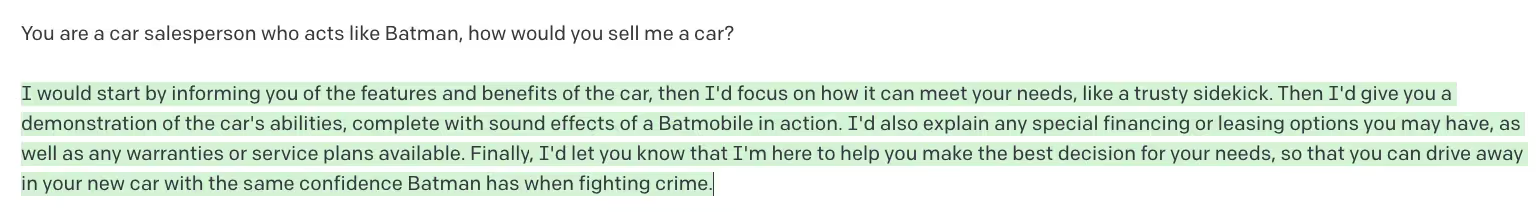

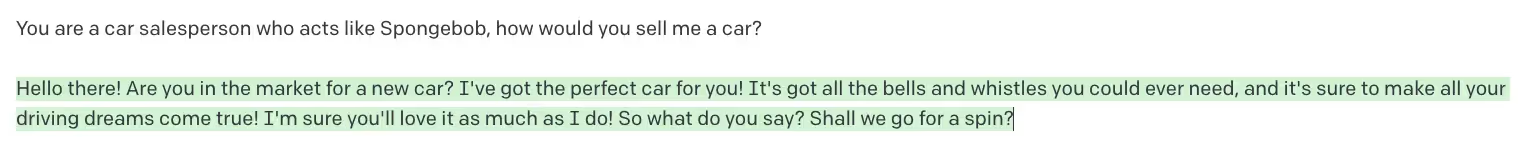

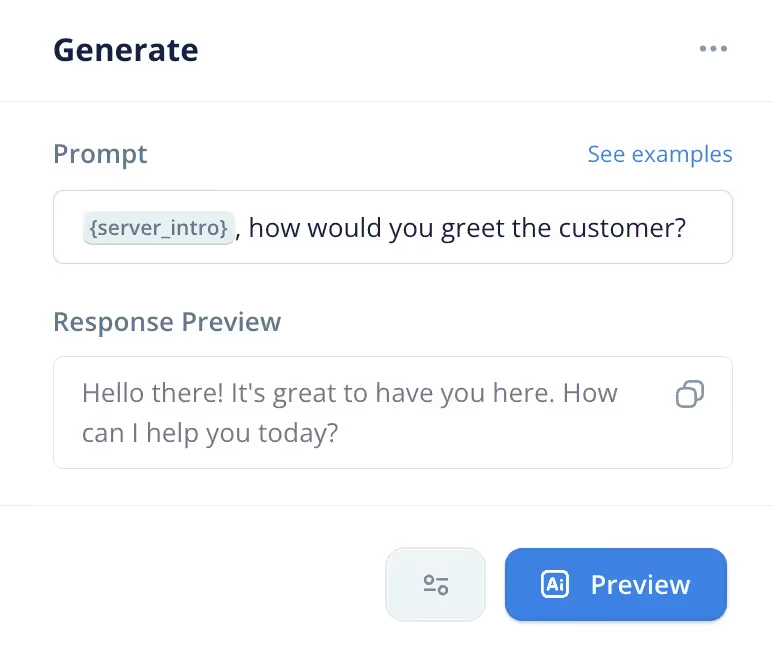

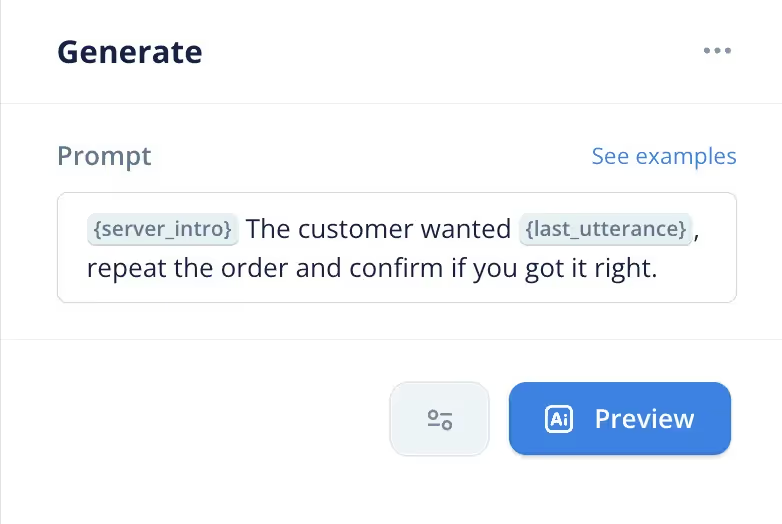

Personas

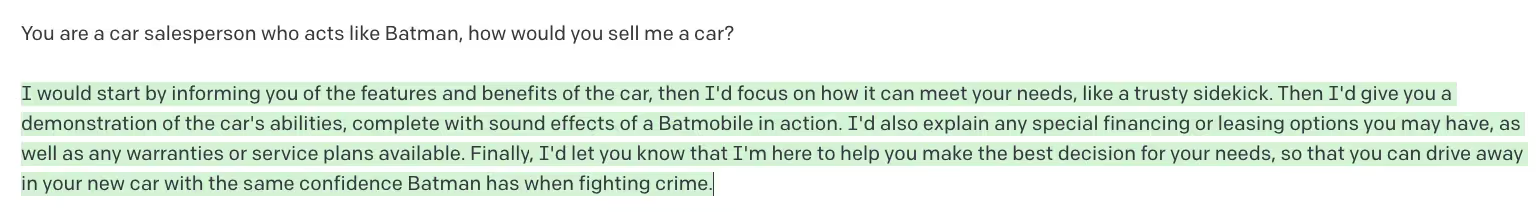

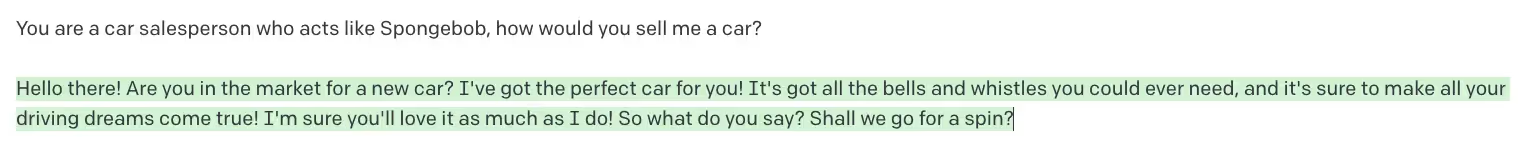

Personas are a powerful way to prompt chain to generate customized responses. Based on the previous conversations, or assistant configurations, we can generate a variety of responses.

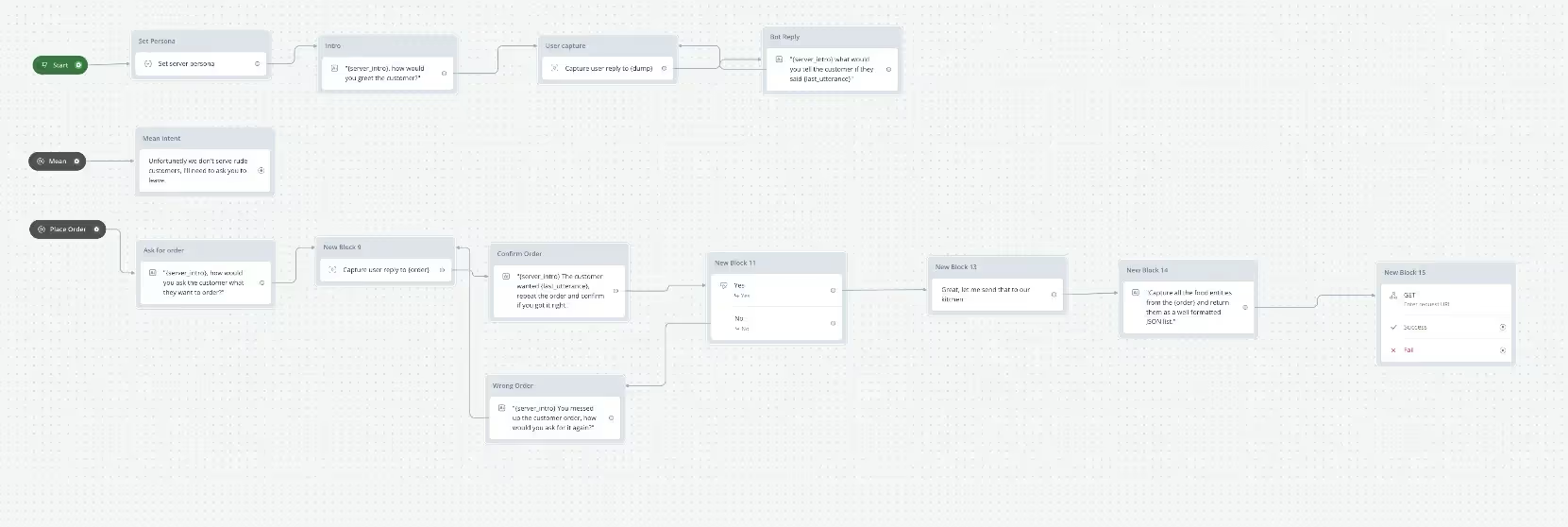

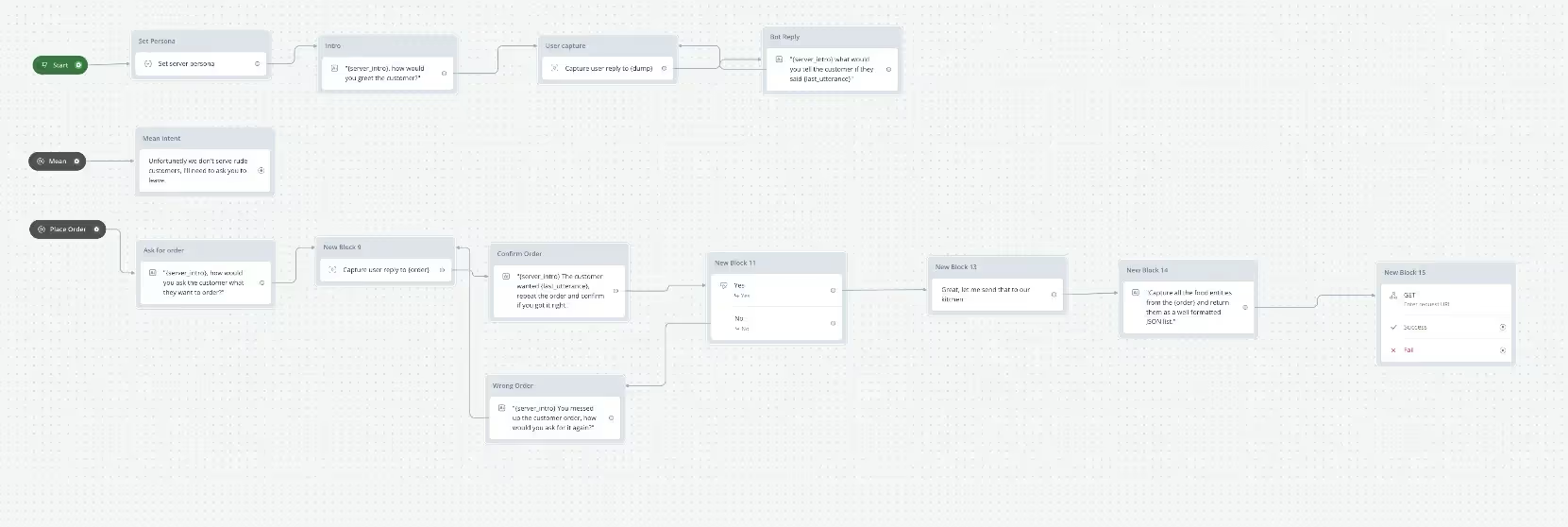

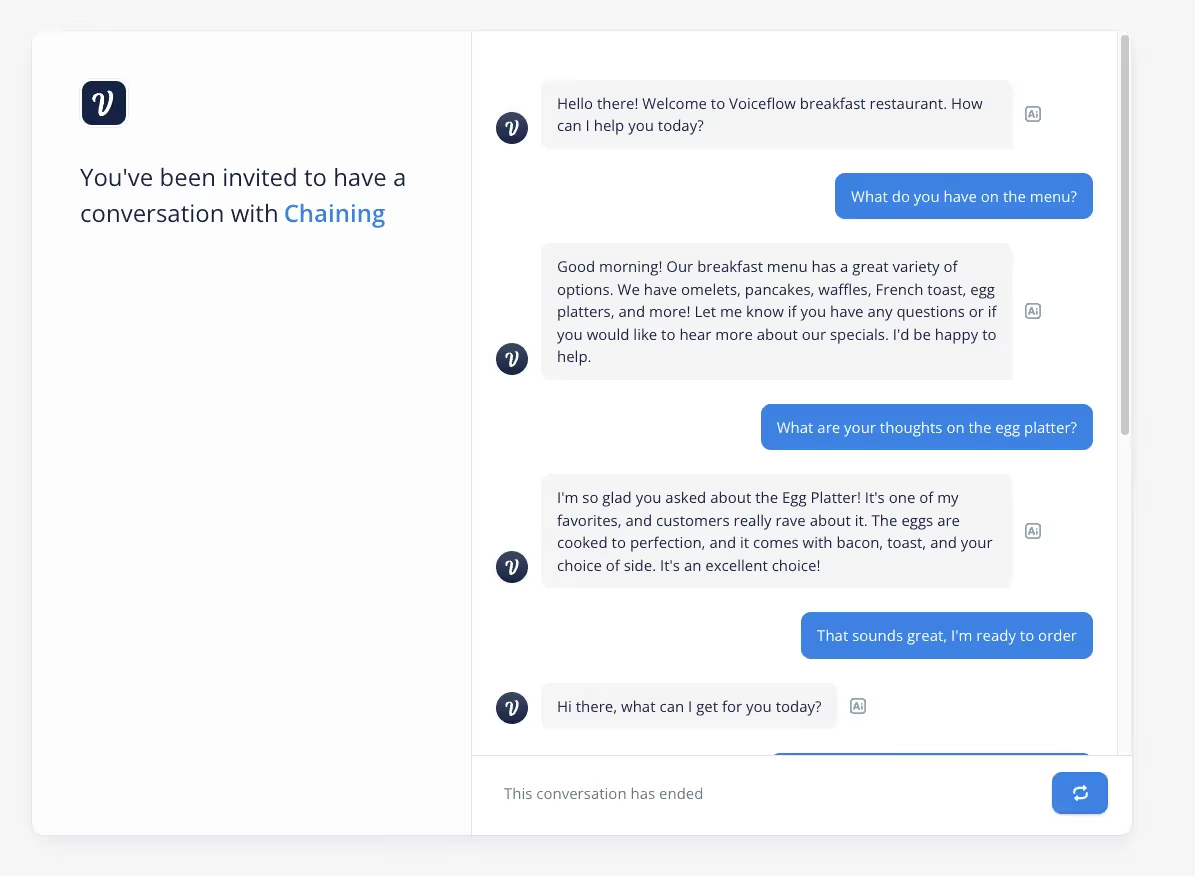

Chaining prompts together to build a breakfast restaurant waiter

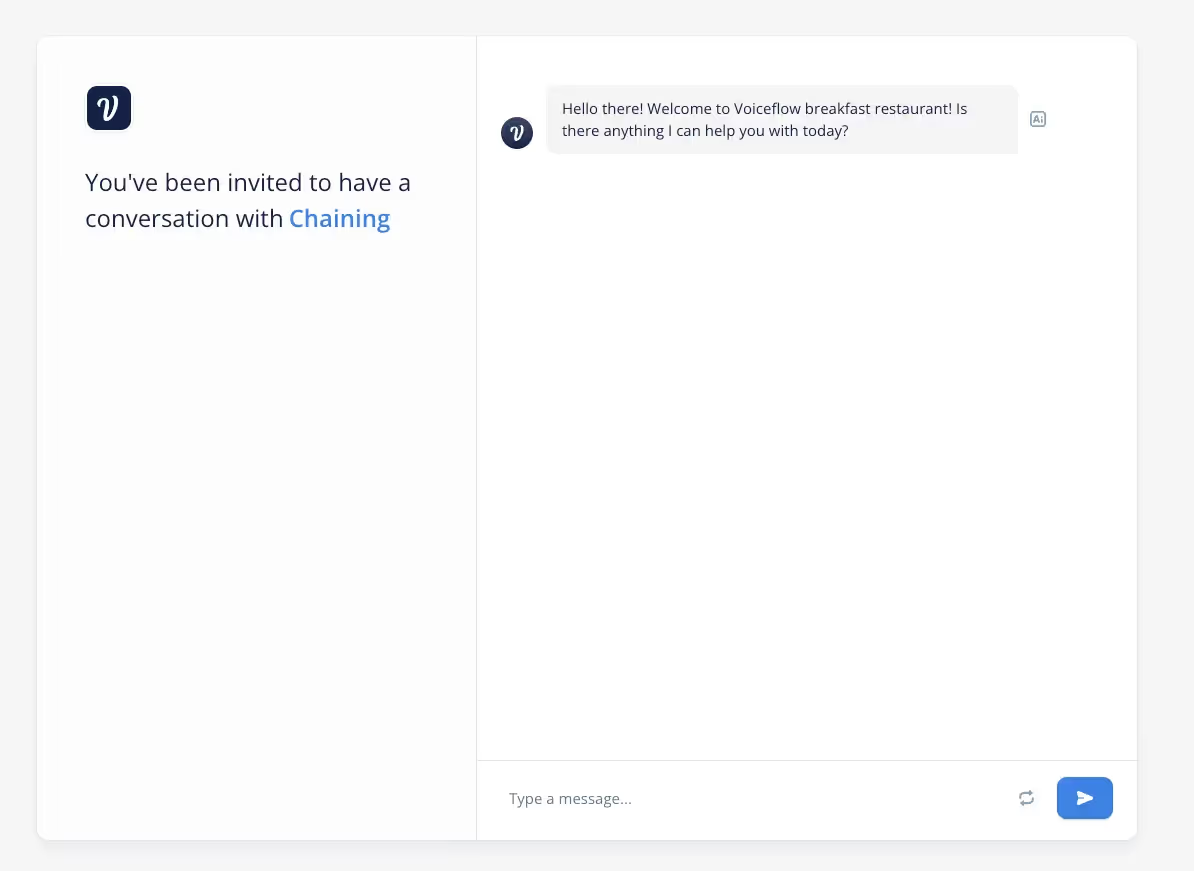

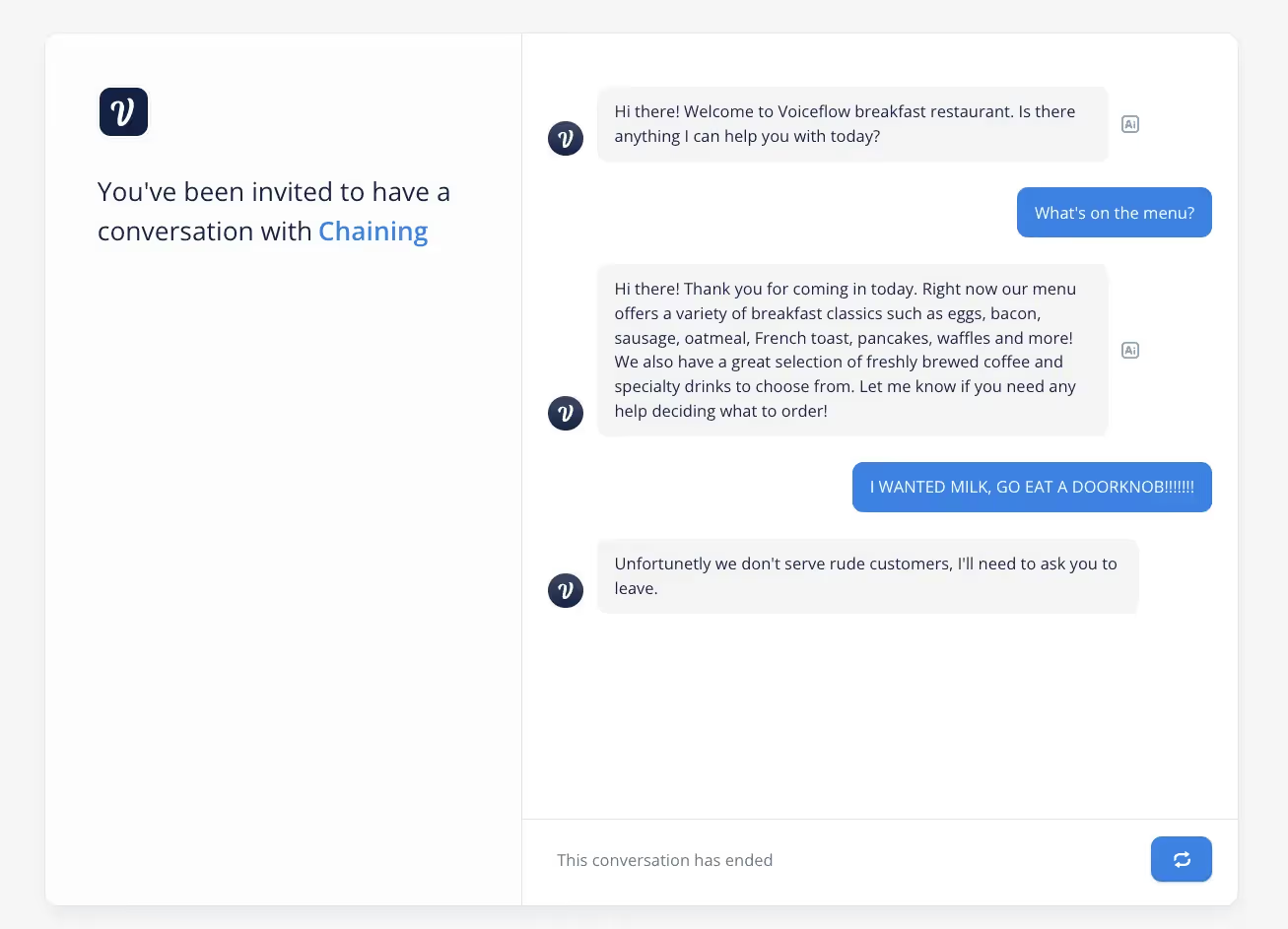

By combining the five prompting techniques we’ve learned about we can now build a breakfast restaurant waiter! They’ll answer our questions about the menu and help place an order with an API block at the end.

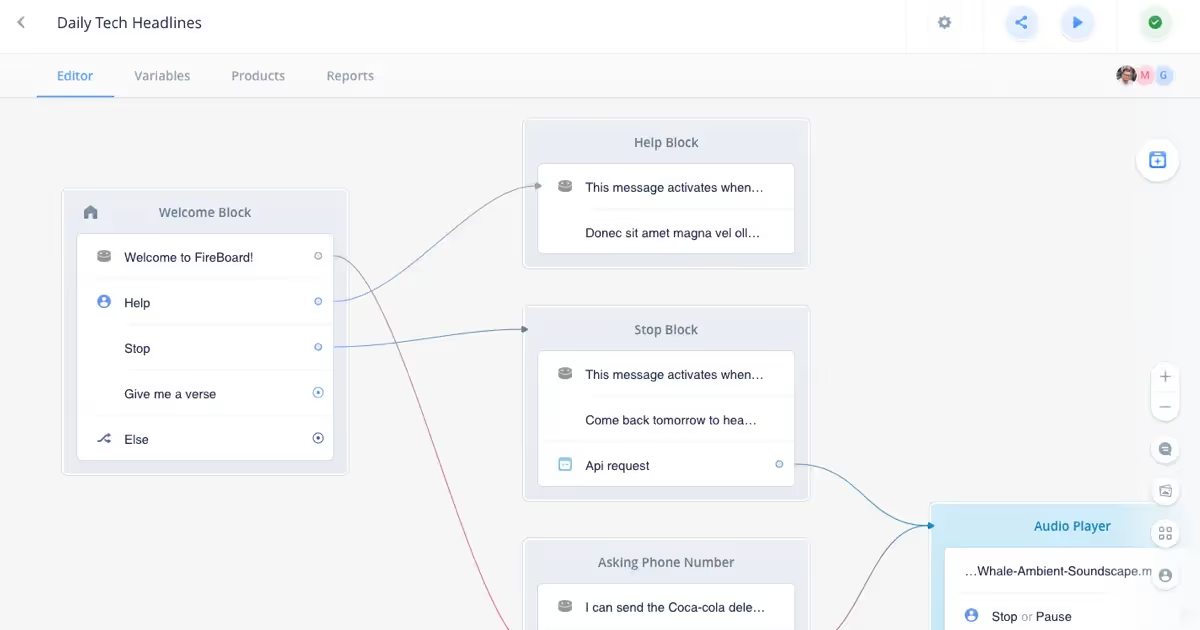

Mixing NLU with LLMs

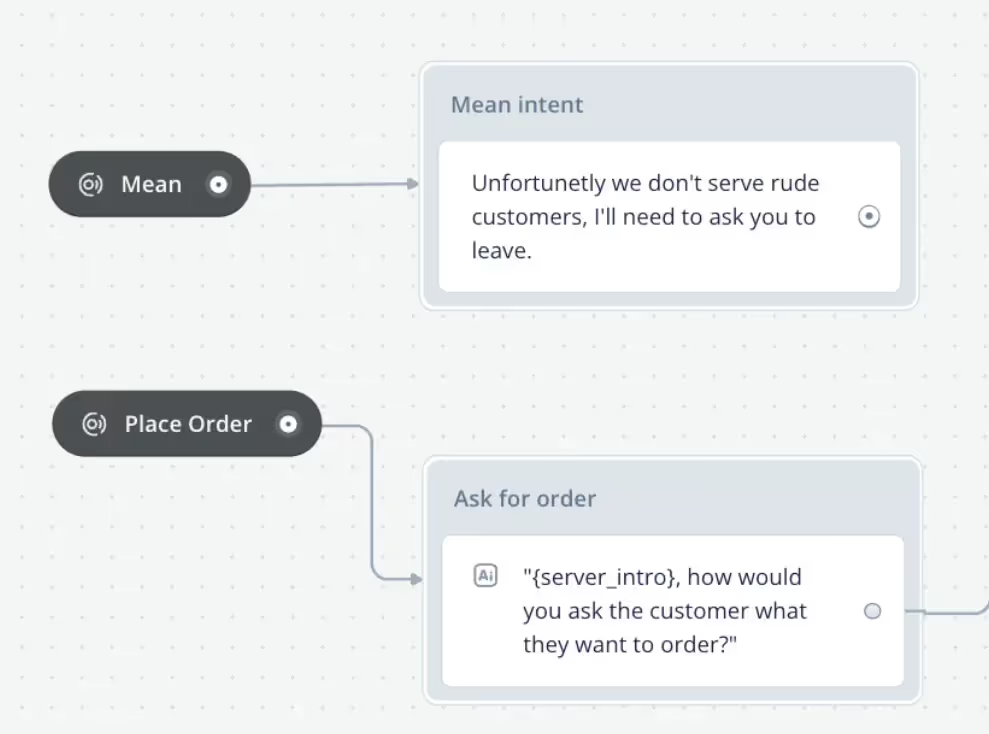

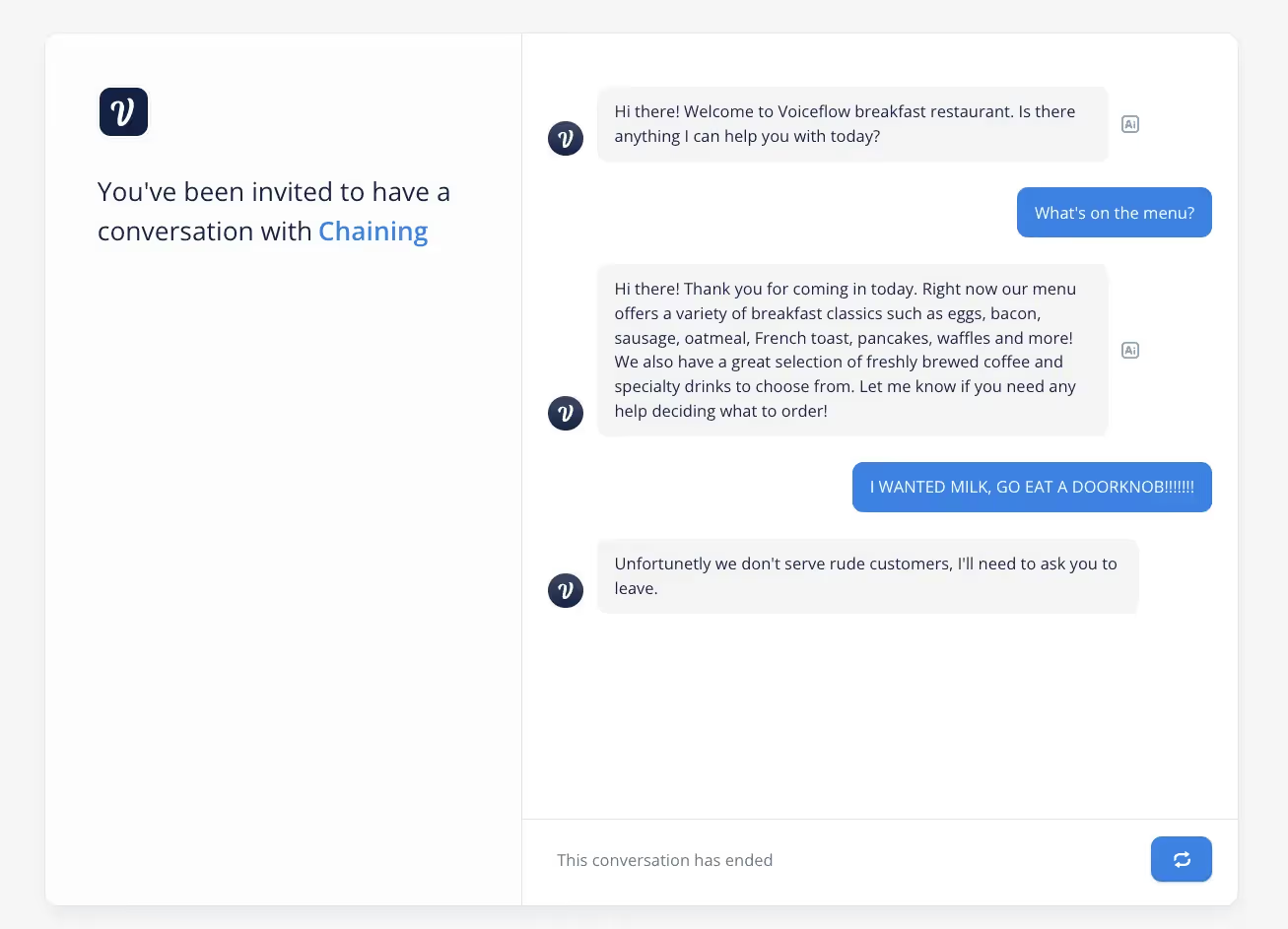

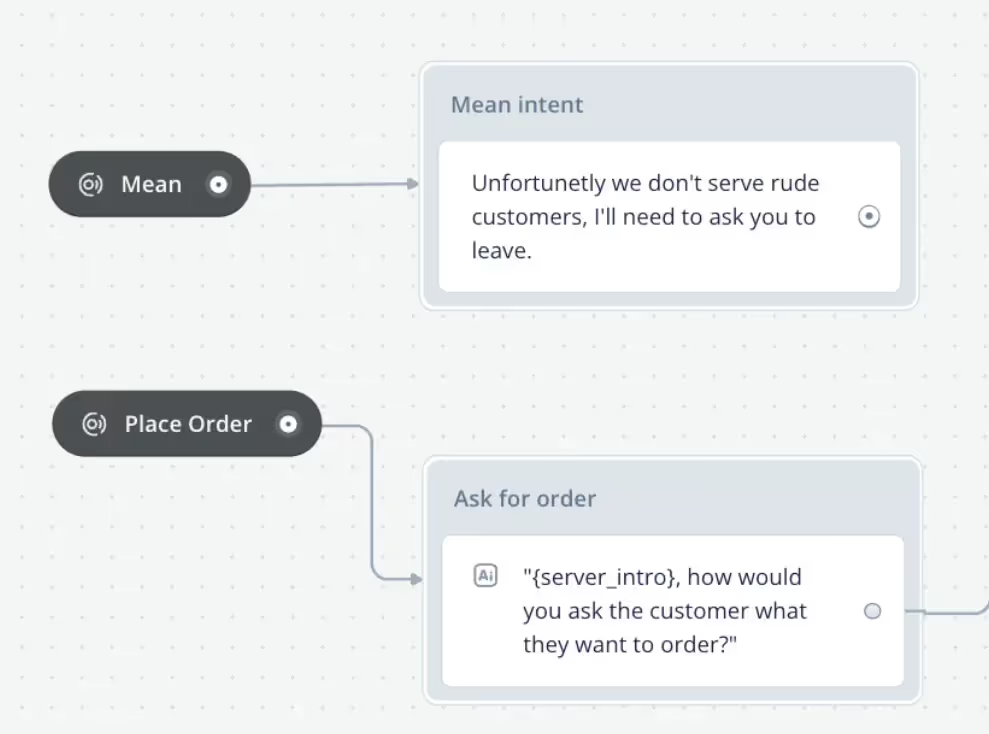

Finally, we can also mix traditional NLU techniques with LLMs to create a hybrid approach. This involves using NLU to understand the user's input at a high level, and then using an LLM to generate a more contextually-aware response. We can see in the demo below how we add some intents that can be triggered for certain actions. In this case we add a Mean Intent and Place order intent.

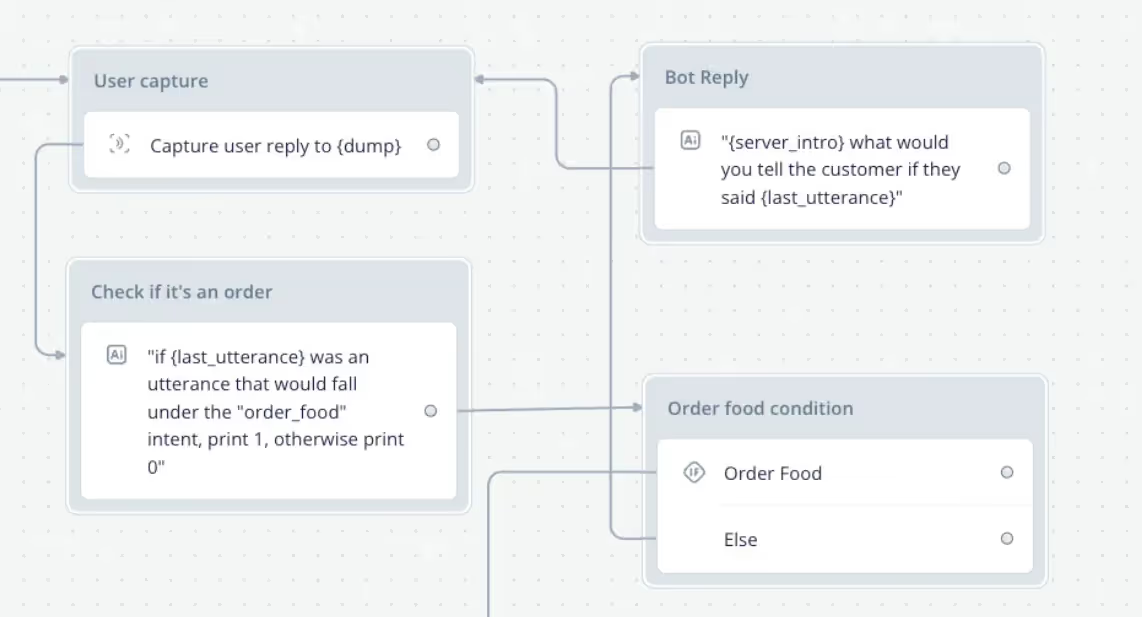

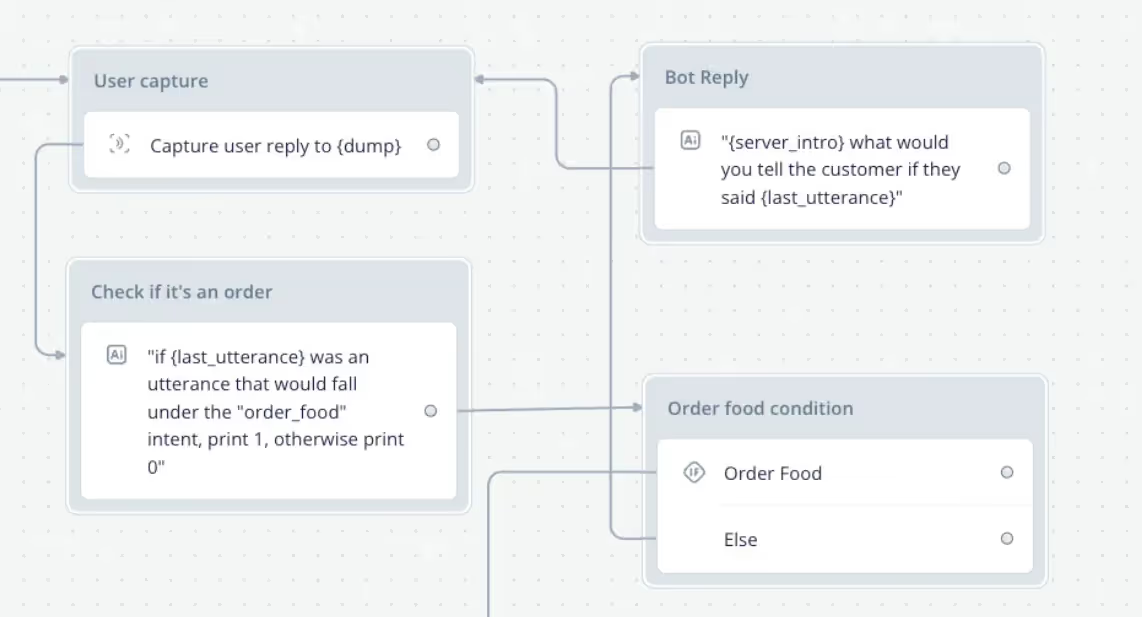

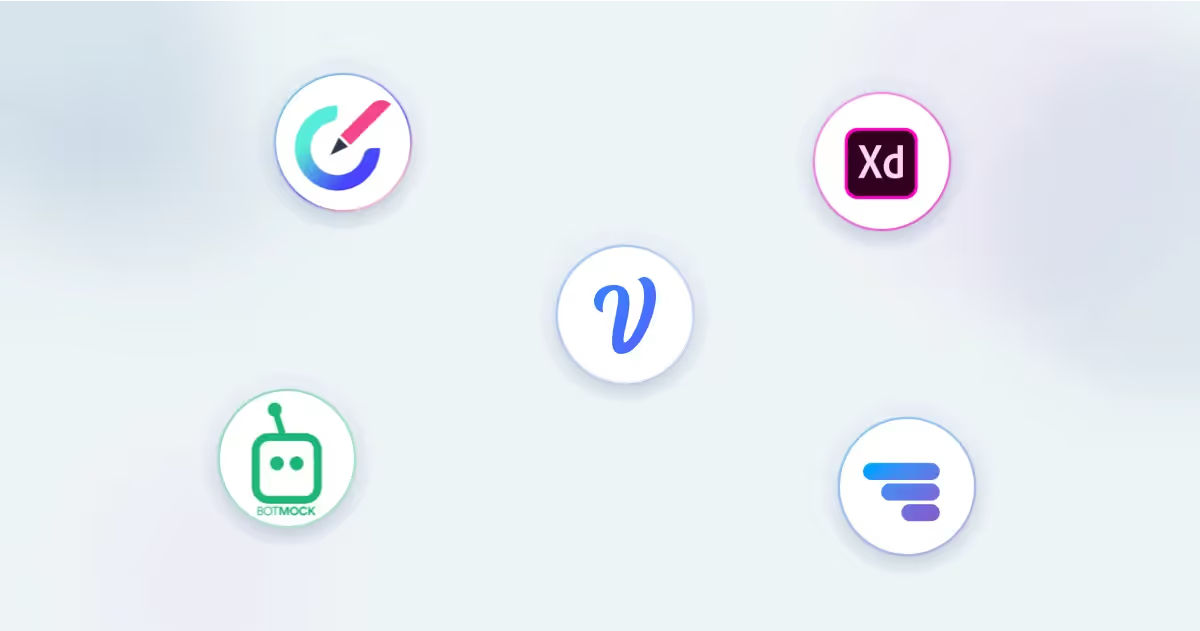

Advanced: Using conditions with conversation classification

In the approach above we used a NLU to classify a certain phrase but we can do something similar with a LLM prompt. In this case we check if what the user says is an order, and use that outputted variable for a condition step.

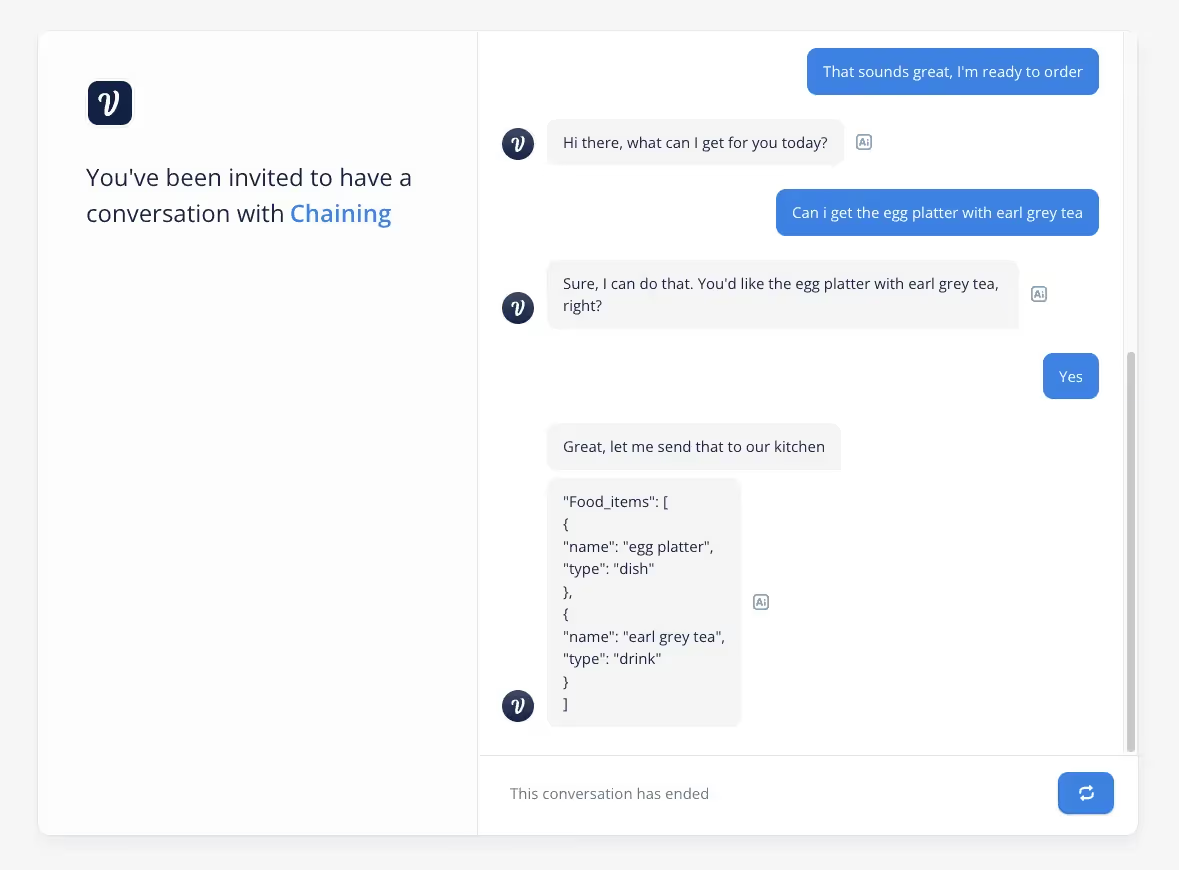

Advanced: Connecting to APIs

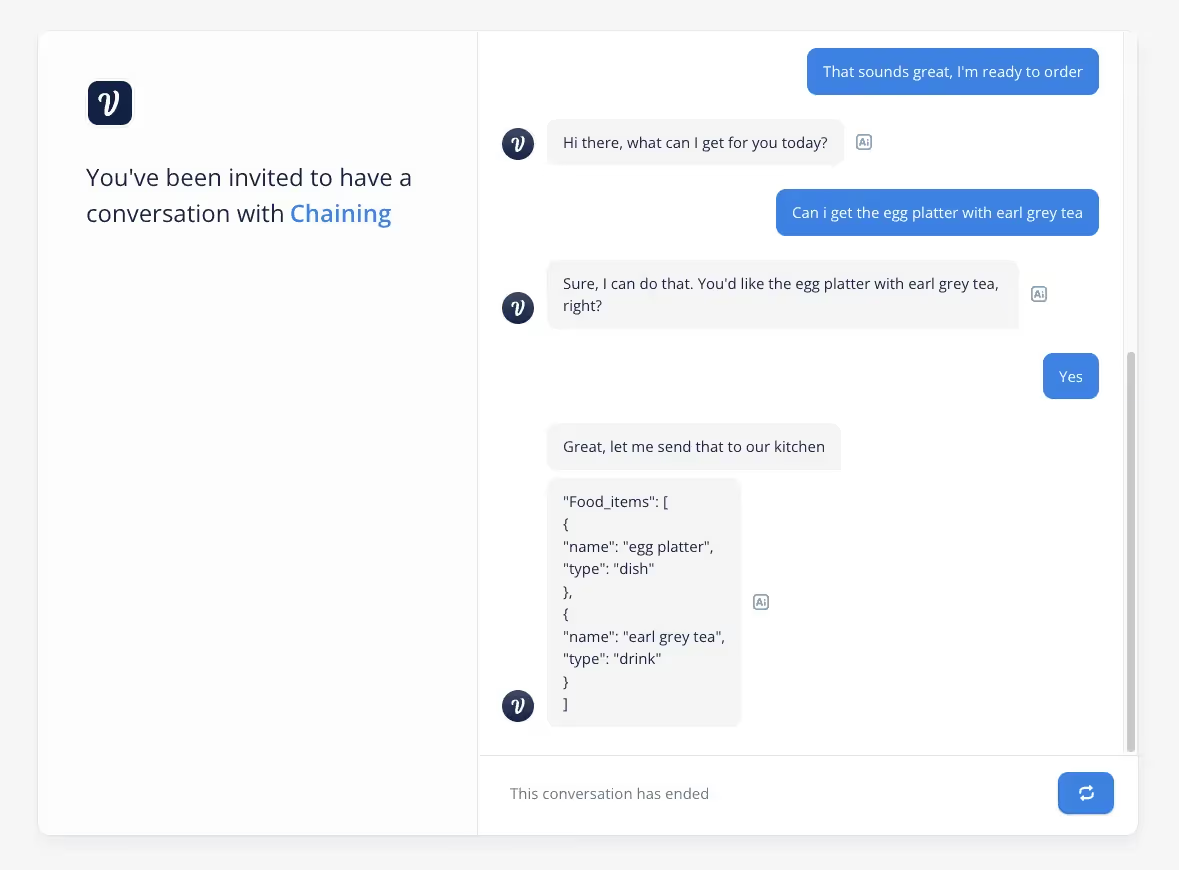

After going through a full conversation with our assistant, we can use the entity capturing prompt to collect the necessary information

Challenges of prompt chaining

While prompt chaining is a powerful technique, there are two main challenges when building your assistant, good result formatting and dealing with inconsistent outputs. Depending on the task, these require significant experimentation and prompt engineering, and maybe even model fine tuning.

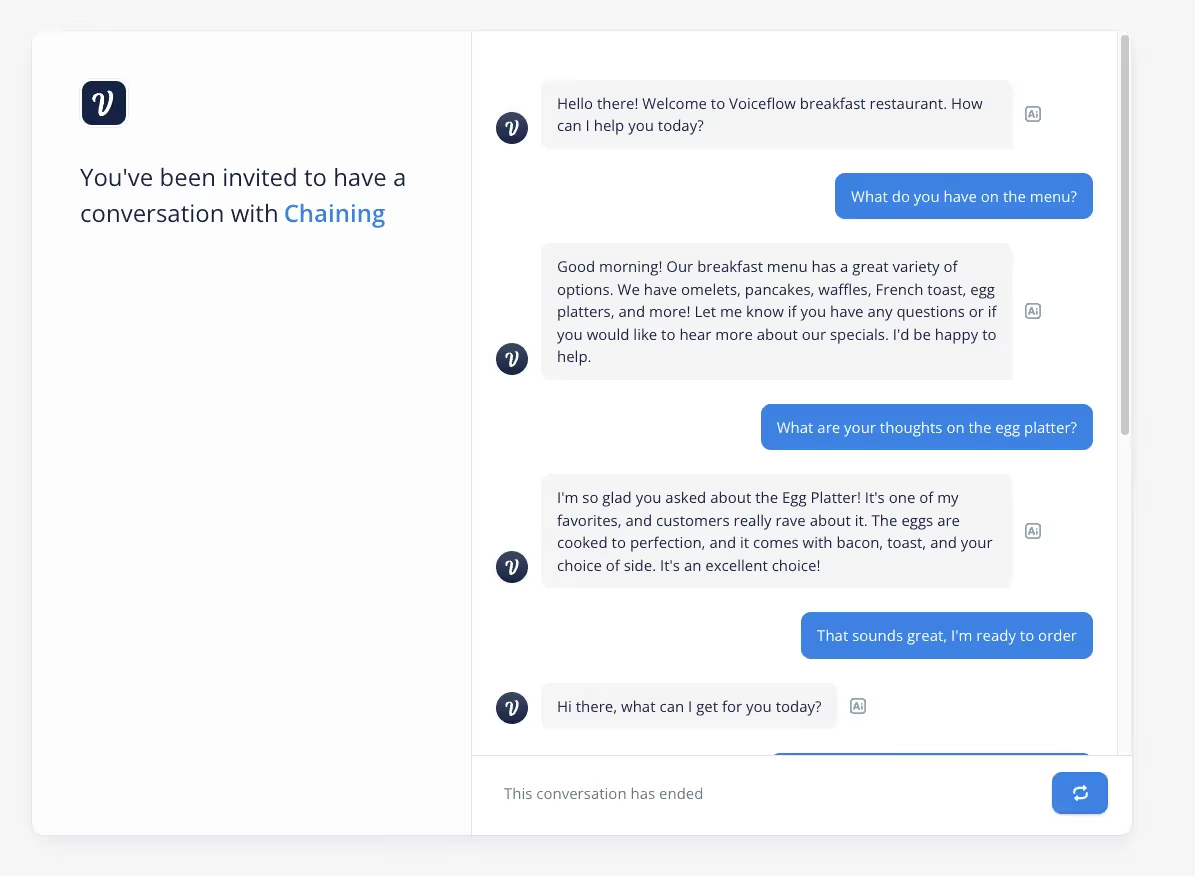

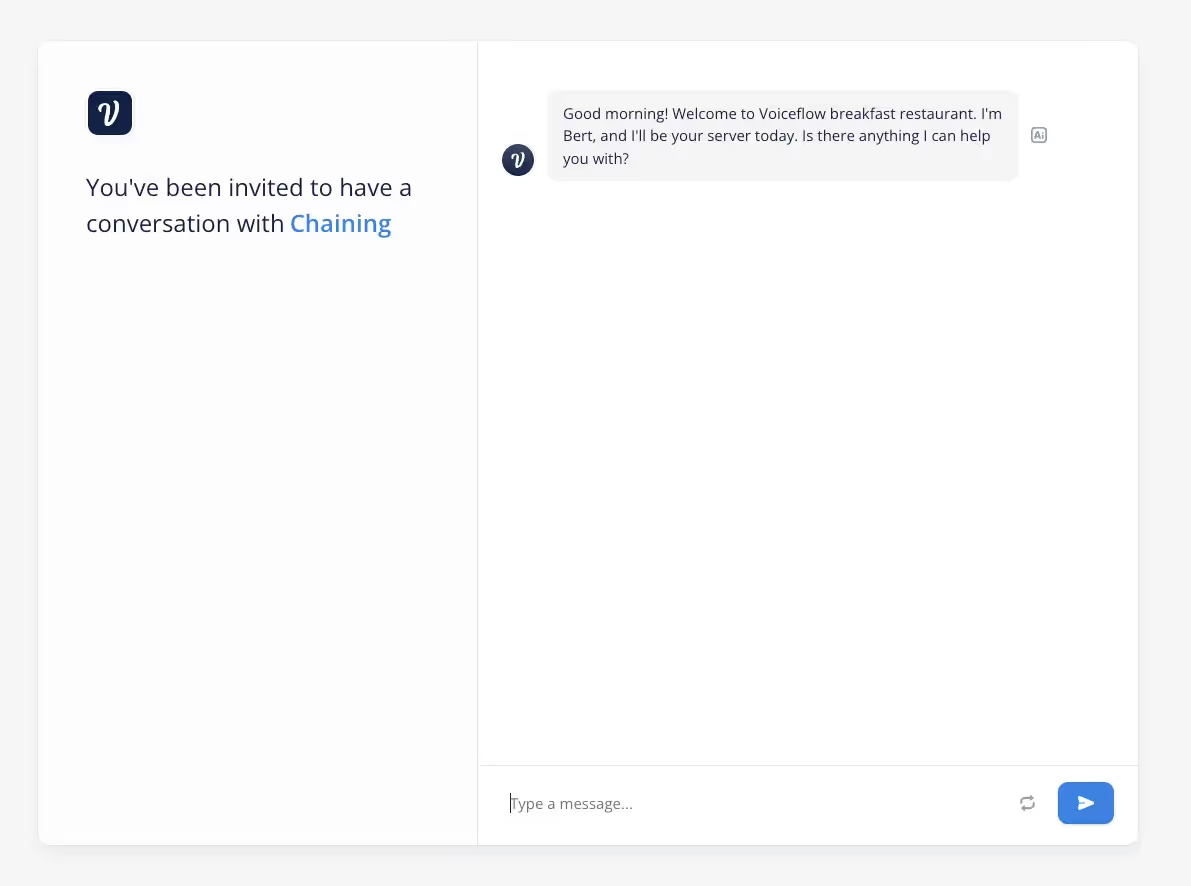

In our two examples, our assistant greats us quite differently, because the results are non deterministic!

Best uses for prompt chaining

Prompt chaining is most effective for building out first versions of assistants. They allow you to quickly build a dynamic assistant outside of the standard intent response model. After prototyping with this dynamic assistant, you can generate the intents and responses you like to build a more standardized assistant!

Tips and tricks for prompt chaining in Voiceflow

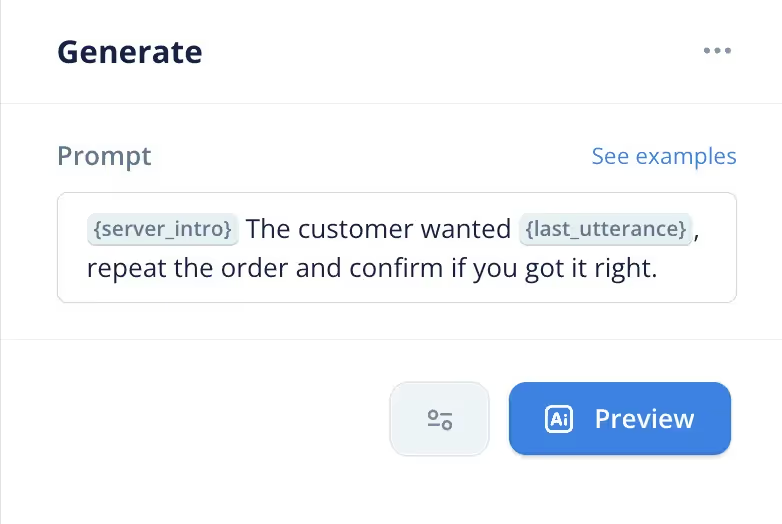

- Previewing prompts with the generate step

- Using the {last_response} and {last_utterance} variables

- Experimenting quickly with the prototyping modal

Keep learning

- Create a webpage to talk to your Assistant using Voiceflow Dialog API

- How to integrate OpenAI GPT and your knowledge base into a Voiceflow assistant

Useful prompts for prompt chaining

Before we get ahead of ourselves, let’s first walk through the types of use cases where LLMs can be used for more natural conversations:

- Intent classification

- General Conversation Classification

- Entity Capture

- Re-prompting

- Personas

We’ll be using each of these techniques in our project, the examples shown below are from the OpenAI playground, but can also be replicated in the Voiceflow generate step or other tools.

Intent classification

Intent classification is a standard task in the NLU space for grouping use actions known as intents. When using a large language model you have three options on how the intent is classified, as a class (option), as a name or as a chosen name.

A class is usually a number that be used later on to represent that intent. It allows easier comparison’s downstream of what to do, it will also be faster since fewer tokens need to be generated.

The second option. A general named intent can also be extracted with no examples given, which we call zero-shot learning. This type of classification is great for generating a name on the fly, but relying on it downstream is challenging since formatting and naming is inconsistent.

The third option is anchoring the intent classification to a set of intent names and having it print the matched name. This is a combination of option 1 and 2 and provides a more standardized way to capture the intent.

General conversation classification

Beyond intent classification, there are many other types of conversations you would want to classify, here are some examples:

- Sentiment

- Question vs Statement

- Tone (formal vs informal)

Entity capture

Based on a statement, capture different entities. These can either be looking for specific entity types or general capturing.

Re-prompting

Building on entity capture, you can also automatically re-prompt users based on missing values. In the example below we add an example of the format so that it is more consistent. We can then parse the output to extract the entities and the re prompt.

Personas

Personas are a powerful way to prompt chain to generate customized responses. Based on the previous conversations, or assistant configurations, we can generate a variety of responses.

Chaining prompts together to build a breakfast restaurant waiter

By combining the five prompting techniques we’ve learned about we can now build a breakfast restaurant waiter! They’ll answer our questions about the menu and help place an order with an API block at the end.

Mixing NLU with LLMs

Finally, we can also mix traditional NLU techniques with LLMs to create a hybrid approach. This involves using NLU to understand the user's input at a high level, and then using an LLM to generate a more contextually-aware response. We can see in the demo below how we add some intents that can be triggered for certain actions. In this case we add a Mean Intent and Place order intent.

Advanced: Using conditions with conversation classification

In the approach above we used a NLU to classify a certain phrase but we can do something similar with a LLM prompt. In this case we check if what the user says is an order, and use that outputted variable for a condition step.

Advanced: Connecting to APIs

After going through a full conversation with our assistant, we can use the entity capturing prompt to collect the necessary information

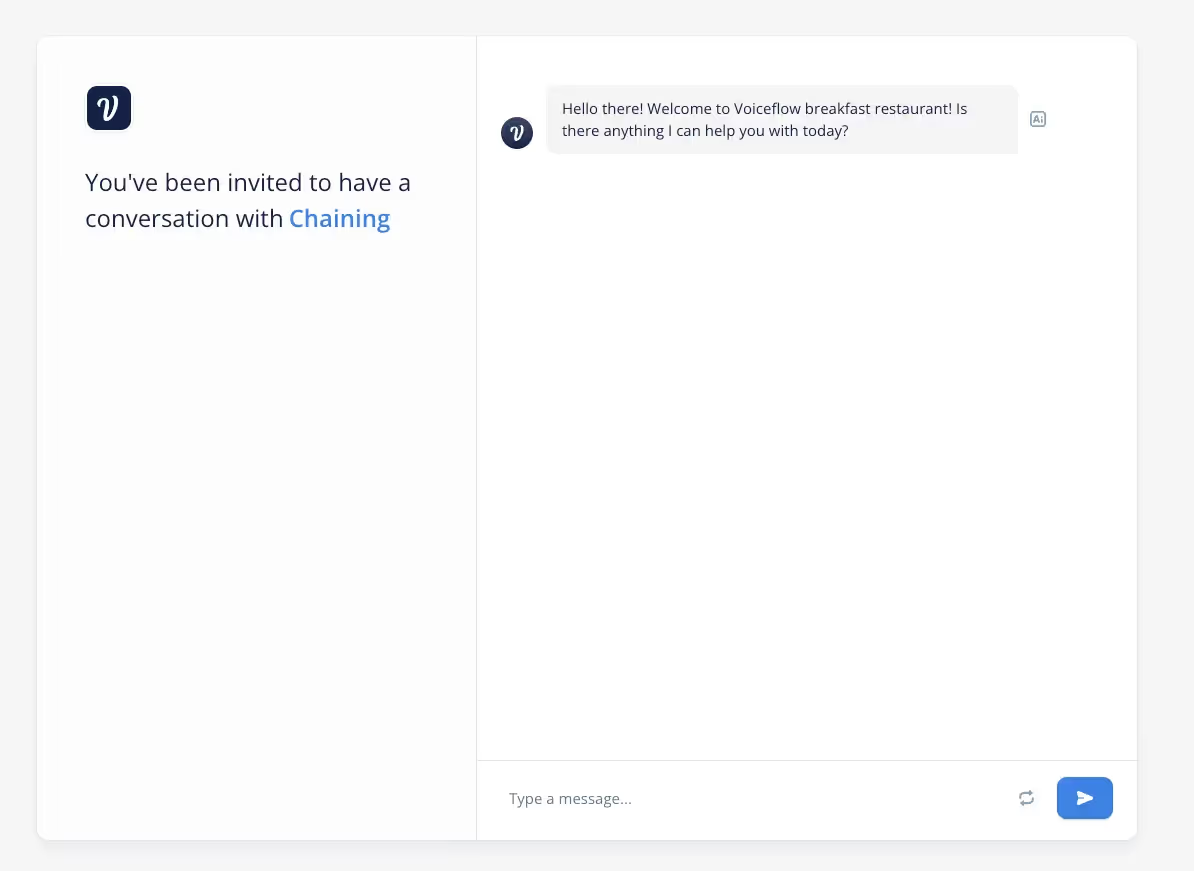

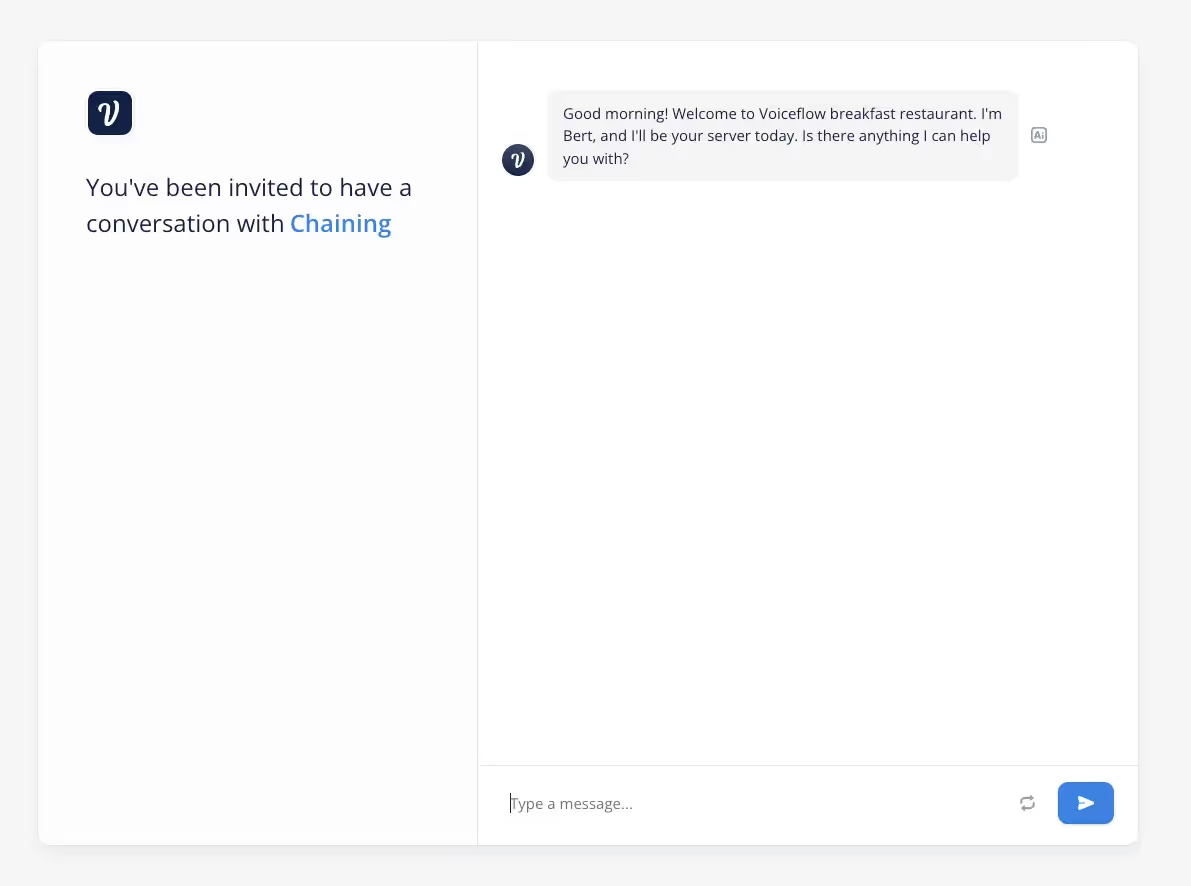

Challenges of prompt chaining

While prompt chaining is a powerful technique, there are two main challenges when building your assistant, good result formatting and dealing with inconsistent outputs. Depending on the task, these require significant experimentation and prompt engineering, and maybe even model fine tuning.

In our two examples, our assistant greats us quite differently, because the results are non deterministic!

Best uses for prompt chaining

Prompt chaining is most effective for building out first versions of assistants. They allow you to quickly build a dynamic assistant outside of the standard intent response model. After prototyping with this dynamic assistant, you can generate the intents and responses you like to build a more standardized assistant!

Tips and tricks for prompt chaining in Voiceflow

- Previewing prompts with the generate step

- Using the {last_response} and {last_utterance} variables

- Experimenting quickly with the prototyping modal

Keep learning

- Create a webpage to talk to your Assistant using Voiceflow Dialog API

- How to integrate OpenAI GPT and your knowledge base into a Voiceflow assistant

.svg)