There's A Right Way To Fail

Let’s begin with a dialogue I pieced together from multiple Google Action horoscope bots to highlight not so effective ways bots respond to unexpected user responses:

Bot: What’s your zodiac sign?

User: #$&@!

Bot: Sorry I didn’t understand. Can you say that again?

User: #$&@!

Bot: Sorry I didn’t quite catch that. What was that?

User: #$&@!

Bot: Sorry I can’t help.

Maybe you’re wondering at this point as I did: why does the bot say “sorry” so much? Does it even need to say this? Isn’t this defeatist? When I hear a bot say "sorry", too often or too quickly, I tend to think “this bot sucks”.

And then there’s the strategy of admitting to a mistake when things go wrong, like saying "I didn’t understand”. This seems like a step in the right direction, but not if you don’t actually know what went wrong. Then it’d be presumptuous.

For example, what if I mumbled? Or what if the device didn’t pick up what I said? Or what if the recording of my audio got through, but something else happened on the backend beyond my control?

You may also have noticed some nuance in the bot’s tone. Surely, softening the blow does some good. You certainly don’t want to alienate the user. But a gentler confession like “I didn’t quite catch that” by itself, without any attempt to make things right, sounds wishy washy. I’ve even seen some voice apps take a more neutral route and say “something happened”.

But that comes across as vague, even ominous.

Regardless of whether you give a million sorrys or no apology at all, you still need to help users do what they came to your app for. And that means getting possible fixes out in front of them when things go wrong.

For example, a miscommunication scenario with an Alexa Skill I designed, called Interview Practice, would go down like this:

Bot: Why do you think you’re a good fit for the role? Are you ready to practice this question?

User: #$&@!

Bot: Again, the question is: Why do you think you’re a good fit for the role? Ready? You can also ask for a new question.

.png)

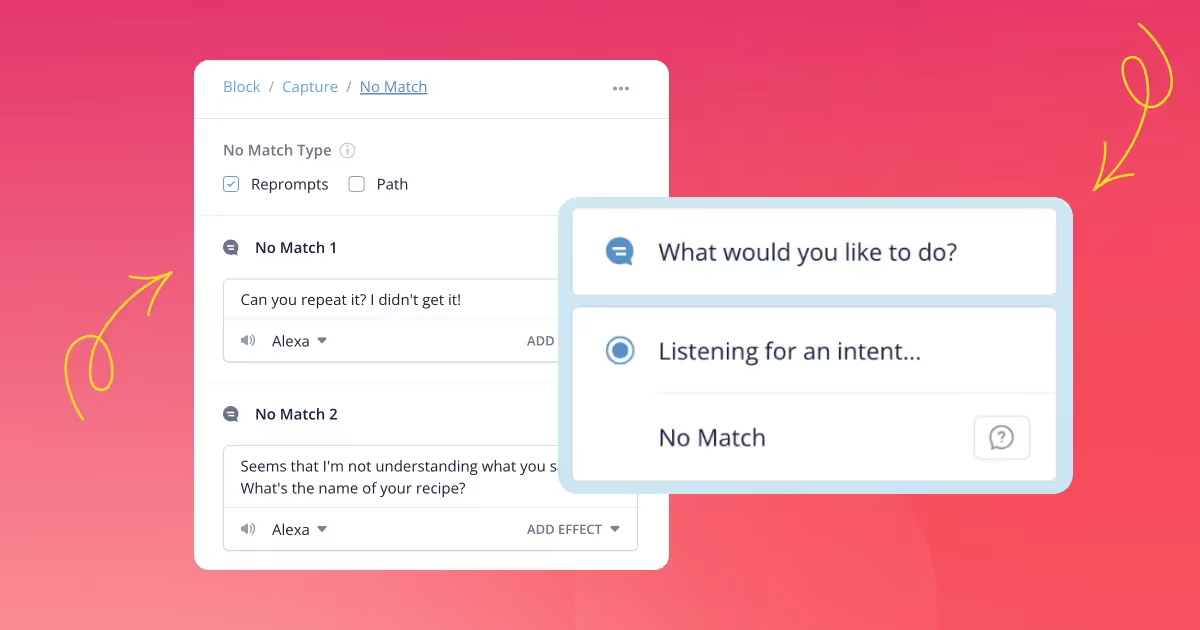

Notice the only thing that matters is moving the conversation forward. First, it repeats the interview question at play in case you didn’t hear it. Then, it re-asks the original prompt in condensed form (“Ready?").

Finally, if that’s not enough, you get another option that addresses a difficulty you might be having. I’ve designed it so you hear straightforward fixes like “you can also repeat the question” the very first time you say something that doesn’t register.

Less obvious ones like “you can also ask me to wait” surface later if triggered. These secondary fixes rotate endlessly in a succession from more to less obvious. Ideally, any options suggested would be based on specific user utterances (so not random).

In fact, a 2019 chatbot repair study by IBM researchers came to this exact conclusion: when things go wrong, the most preferred repair strategy is giving the user options based on what the user just said.

Even “problems” such as unexpected, out-of-context, barge-in requests, when anticipated and designed for, can open up new vistas of engagement. For example, in early testing of my app I noticed some users needed more time to think, even a few seconds, before starting their answers.

So I included this detour:

Bot: What are your salary expectations? Are you ready to answer this?

User: Wait!

Bot: No problem, I’ll come back in a moment” (10 silent seconds go by). I’m back. Again, the question is: what are your salary expectations? Ready? Or do you need help?

.png)

If taking a pause doesn’t work, you can always ask for help, which opens up other possible fixes. For example, you may want to re-practice the previous question. Or you may wish to do a related question and dive deeper into a specific category. Or maybe you just want to spin up new questions at random until you find a good one. These and other options are suggested when you say help.

The goal is to gracefully redirect the user back to one of several possible fixes, getting them back on the “happy path”, so the user can get done what they came to your app for.

By the way, in my example I used human intuition and good ol’ fashion guessing to generate possible fixes. In a more perfect system, solutions would be educated guesses (predictive) and leverage not only intuition-based rules from human experts but also machine learning models that mimic memory and contextual knowledge (see OpenAI’s GPT-3 and Facebook’s BlenderBot 2.0).

Using socially appropriate, repair-focused re-prompts like reminding, paraphrasing, and giving options, when intents are mis-recognized or missing, is an approach I came to through trial and error, and is inspired by James Giangola, a Google conversation design expert.

Steve Jobs also has a saying on design that I love, which easily applies here: “Most people make the mistake of thinking (conversation) design is what it looks (and sounds) like. But it's not just what it looks (sounds) and feels like. (Conversation) design is how it works”.

What matters is not just what words mean, it’s what they do.

Paraphrasing as a tactic reminds me of my account management days years ago, when I had a client with a crazy strong accent. He spoke fast too. I never understood what he was saying. One day it dawned on me: asking him what he meant only made things worse if he couldn’t easily be understood. And you can only apologize so much.

So instead I paraphrased what I thought he said, based on bits and pieces I could make out - guesses really - and then asking him for confirmation. When this worked, he’d usually say back to me: “THAT’S what I’ve been SAYING the WHOLE TIME!”

Redirecting as a tactic is something skilled parents do. I have a friend who does this masterfully. Her kid would start climbing the table or pick up a bowl to throw, and then my friend, instead of losing her cool, would suddenly point to the window and say “Wow, look at that bird with the huge beak!”

This would stop her kid in his tracks. He’d look over to the window, turn to his mom and say “Really? and forget all about whatever mischief he was about to cause. Buddhists and relationship therapists call this “skillful means”.

Repair strategies are central to making bots (and humans) better and more focused. Why? Because repair is a universal aspect of language itself.

“That’s repair. Ubiquitous and necessary. The human language fixing device. The more you start thinking about repair, the more you start to notice it. Without it, we would be lost in an ocean of intersubjective failures. Good thing language invented it”, says Emma Hoffstetter, a linguistic researcher.

The more happy paths, the better.

Conversation design is about building a network of more traveled and less traveled roads that all lead to great destinations.

In this way, effective conversational bots and good conversationalists do and want the same things. They keep things moving. They fix things. They take you places. Above all, they don’t focus on themselves. They make it about you.

Further references

- A video presentation entitled “Applying Built-in Hacks of Conversation to Your Voice UI” (starts at 15:16 for repair discussion) by Google conversation design expert James Giangola

- A video lecture on “Repair” as a central feature of human conversation by linguistic researcher Emma Hoffstetter

- A video presentation and paper entitled “Resilient Chatbots: Repair Strategy Preferences for Conversational Breakdowns” by IBM researchers

About the author: linktr.ee/terryjlee

About Prepfit: linktr.ee/prepfit

Thanks to Elaine Anzaldo, Nicolas Arcay Bermejo, Don Irwin, and Sara Pion for reading drafts of this.

There's A Right Way To Fail

Let’s begin with a dialogue I pieced together from multiple Google Action horoscope bots to highlight not so effective ways bots respond to unexpected user responses:

Bot: What’s your zodiac sign?

User: #$&@!

Bot: Sorry I didn’t understand. Can you say that again?

User: #$&@!

Bot: Sorry I didn’t quite catch that. What was that?

User: #$&@!

Bot: Sorry I can’t help.

Maybe you’re wondering at this point as I did: why does the bot say “sorry” so much? Does it even need to say this? Isn’t this defeatist? When I hear a bot say "sorry", too often or too quickly, I tend to think “this bot sucks”.

And then there’s the strategy of admitting to a mistake when things go wrong, like saying "I didn’t understand”. This seems like a step in the right direction, but not if you don’t actually know what went wrong. Then it’d be presumptuous.

For example, what if I mumbled? Or what if the device didn’t pick up what I said? Or what if the recording of my audio got through, but something else happened on the backend beyond my control?

You may also have noticed some nuance in the bot’s tone. Surely, softening the blow does some good. You certainly don’t want to alienate the user. But a gentler confession like “I didn’t quite catch that” by itself, without any attempt to make things right, sounds wishy washy. I’ve even seen some voice apps take a more neutral route and say “something happened”.

But that comes across as vague, even ominous.

Regardless of whether you give a million sorrys or no apology at all, you still need to help users do what they came to your app for. And that means getting possible fixes out in front of them when things go wrong.

For example, a miscommunication scenario with an Alexa Skill I designed, called Interview Practice, would go down like this:

Bot: Why do you think you’re a good fit for the role? Are you ready to practice this question?

User: #$&@!

Bot: Again, the question is: Why do you think you’re a good fit for the role? Ready? You can also ask for a new question.

.png)

Notice the only thing that matters is moving the conversation forward. First, it repeats the interview question at play in case you didn’t hear it. Then, it re-asks the original prompt in condensed form (“Ready?").

Finally, if that’s not enough, you get another option that addresses a difficulty you might be having. I’ve designed it so you hear straightforward fixes like “you can also repeat the question” the very first time you say something that doesn’t register.

Less obvious ones like “you can also ask me to wait” surface later if triggered. These secondary fixes rotate endlessly in a succession from more to less obvious. Ideally, any options suggested would be based on specific user utterances (so not random).

In fact, a 2019 chatbot repair study by IBM researchers came to this exact conclusion: when things go wrong, the most preferred repair strategy is giving the user options based on what the user just said.

Even “problems” such as unexpected, out-of-context, barge-in requests, when anticipated and designed for, can open up new vistas of engagement. For example, in early testing of my app I noticed some users needed more time to think, even a few seconds, before starting their answers.

So I included this detour:

Bot: What are your salary expectations? Are you ready to answer this?

User: Wait!

Bot: No problem, I’ll come back in a moment” (10 silent seconds go by). I’m back. Again, the question is: what are your salary expectations? Ready? Or do you need help?

.png)

If taking a pause doesn’t work, you can always ask for help, which opens up other possible fixes. For example, you may want to re-practice the previous question. Or you may wish to do a related question and dive deeper into a specific category. Or maybe you just want to spin up new questions at random until you find a good one. These and other options are suggested when you say help.

The goal is to gracefully redirect the user back to one of several possible fixes, getting them back on the “happy path”, so the user can get done what they came to your app for.

By the way, in my example I used human intuition and good ol’ fashion guessing to generate possible fixes. In a more perfect system, solutions would be educated guesses (predictive) and leverage not only intuition-based rules from human experts but also machine learning models that mimic memory and contextual knowledge (see OpenAI’s GPT-3 and Facebook’s BlenderBot 2.0).

Using socially appropriate, repair-focused re-prompts like reminding, paraphrasing, and giving options, when intents are mis-recognized or missing, is an approach I came to through trial and error, and is inspired by James Giangola, a Google conversation design expert.

Steve Jobs also has a saying on design that I love, which easily applies here: “Most people make the mistake of thinking (conversation) design is what it looks (and sounds) like. But it's not just what it looks (sounds) and feels like. (Conversation) design is how it works”.

What matters is not just what words mean, it’s what they do.

Paraphrasing as a tactic reminds me of my account management days years ago, when I had a client with a crazy strong accent. He spoke fast too. I never understood what he was saying. One day it dawned on me: asking him what he meant only made things worse if he couldn’t easily be understood. And you can only apologize so much.

So instead I paraphrased what I thought he said, based on bits and pieces I could make out - guesses really - and then asking him for confirmation. When this worked, he’d usually say back to me: “THAT’S what I’ve been SAYING the WHOLE TIME!”

Redirecting as a tactic is something skilled parents do. I have a friend who does this masterfully. Her kid would start climbing the table or pick up a bowl to throw, and then my friend, instead of losing her cool, would suddenly point to the window and say “Wow, look at that bird with the huge beak!”

This would stop her kid in his tracks. He’d look over to the window, turn to his mom and say “Really? and forget all about whatever mischief he was about to cause. Buddhists and relationship therapists call this “skillful means”.

Repair strategies are central to making bots (and humans) better and more focused. Why? Because repair is a universal aspect of language itself.

“That’s repair. Ubiquitous and necessary. The human language fixing device. The more you start thinking about repair, the more you start to notice it. Without it, we would be lost in an ocean of intersubjective failures. Good thing language invented it”, says Emma Hoffstetter, a linguistic researcher.

The more happy paths, the better.

Conversation design is about building a network of more traveled and less traveled roads that all lead to great destinations.

In this way, effective conversational bots and good conversationalists do and want the same things. They keep things moving. They fix things. They take you places. Above all, they don’t focus on themselves. They make it about you.

Further references

- A video presentation entitled “Applying Built-in Hacks of Conversation to Your Voice UI” (starts at 15:16 for repair discussion) by Google conversation design expert James Giangola

- A video lecture on “Repair” as a central feature of human conversation by linguistic researcher Emma Hoffstetter

- A video presentation and paper entitled “Resilient Chatbots: Repair Strategy Preferences for Conversational Breakdowns” by IBM researchers

About the author: linktr.ee/terryjlee

About Prepfit: linktr.ee/prepfit

Thanks to Elaine Anzaldo, Nicolas Arcay Bermejo, Don Irwin, and Sara Pion for reading drafts of this.

.avif)

.svg)