1) Follow-up Intents

A follow-up intent adds a nested intent inside a parent intent.

Dialogflow

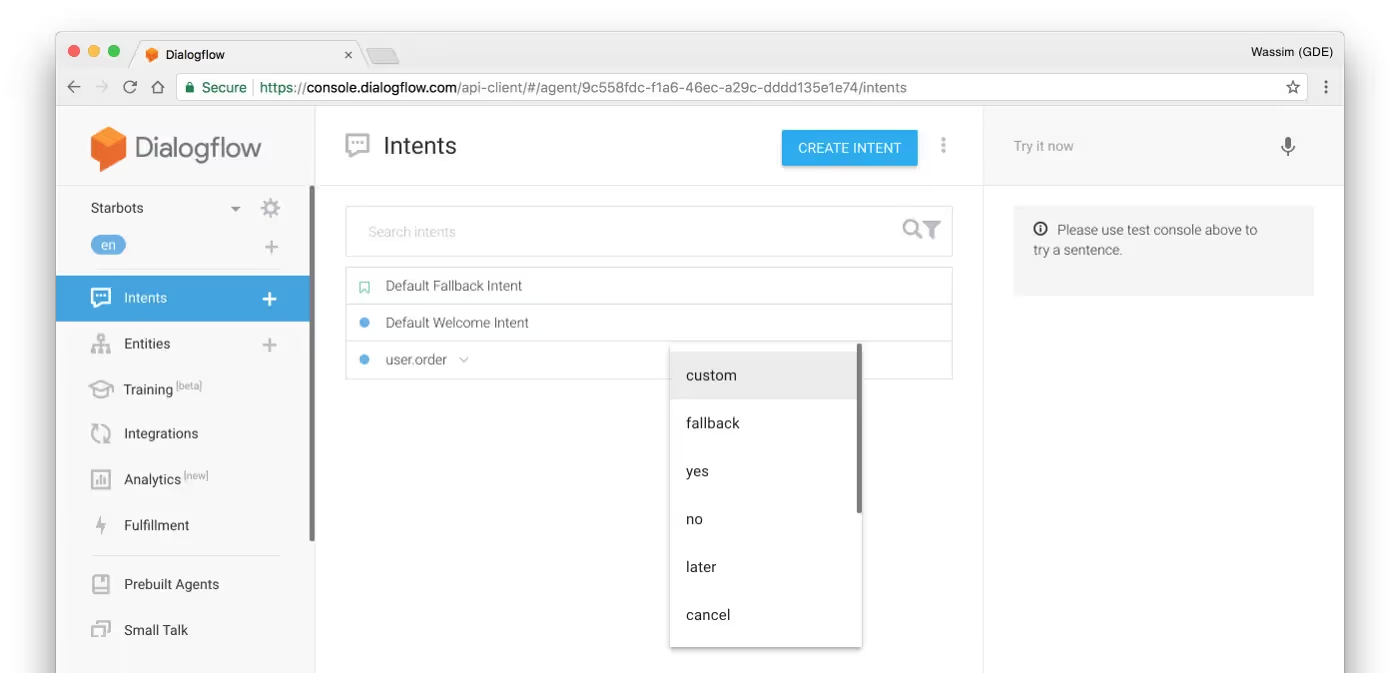

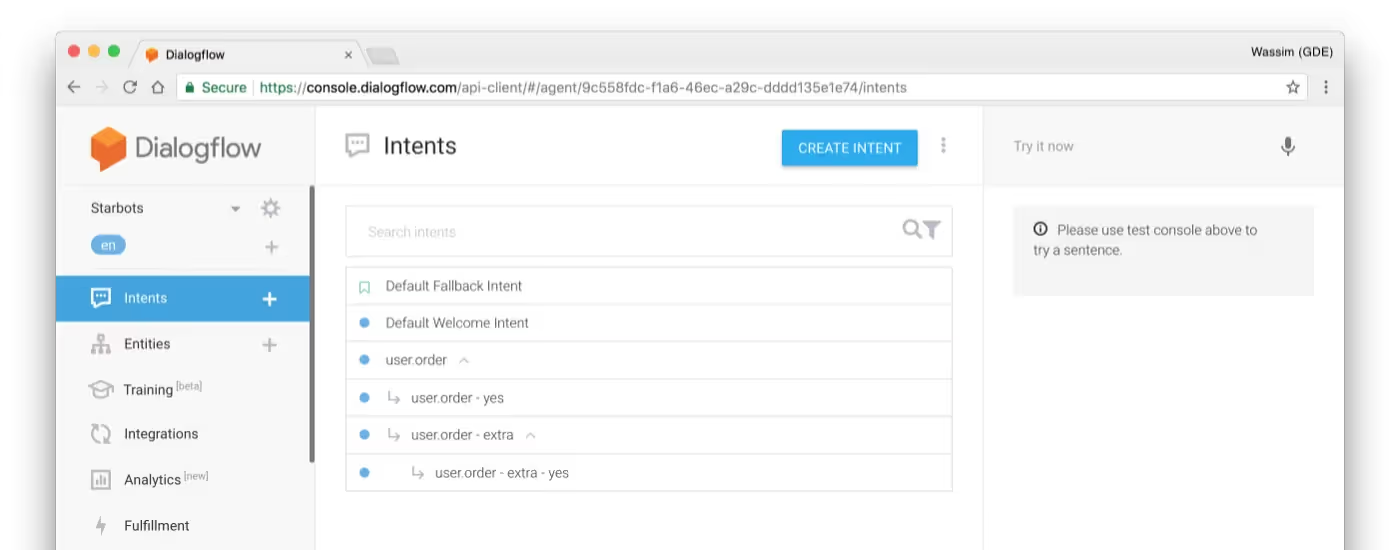

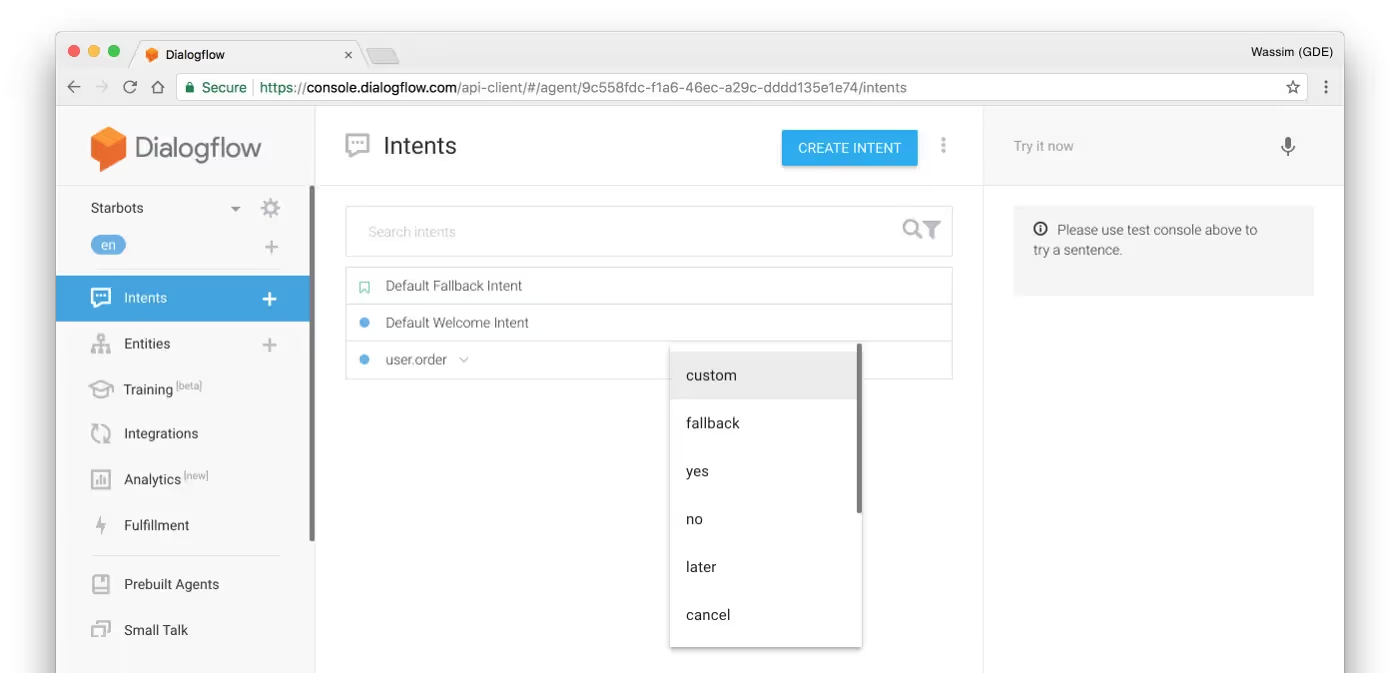

When using Dialogflow (a natural language understanding platform), you’d consider follow-up intents to be normal because they “hold” the context of the parent intent. There are many types of follow-up expressions, including repeat, confirm & cancel.

Let’s design a conversation in Dialogflow to illustrate.

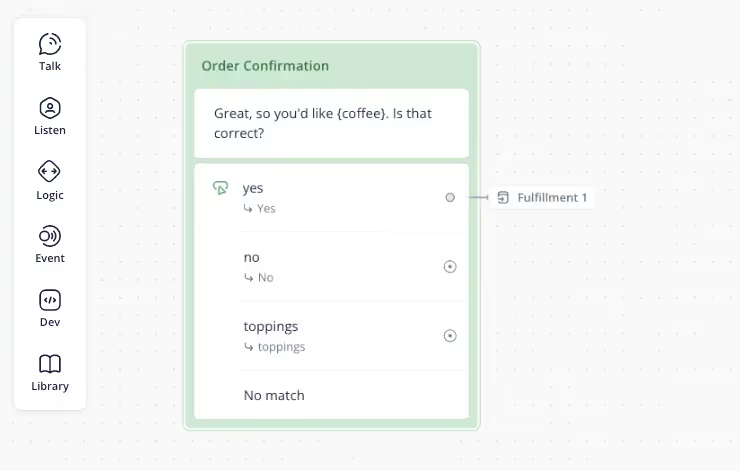

Example 1: Your main intent was to order a caramel latte. A follow-up intent to this would be a secondary order confirmation.

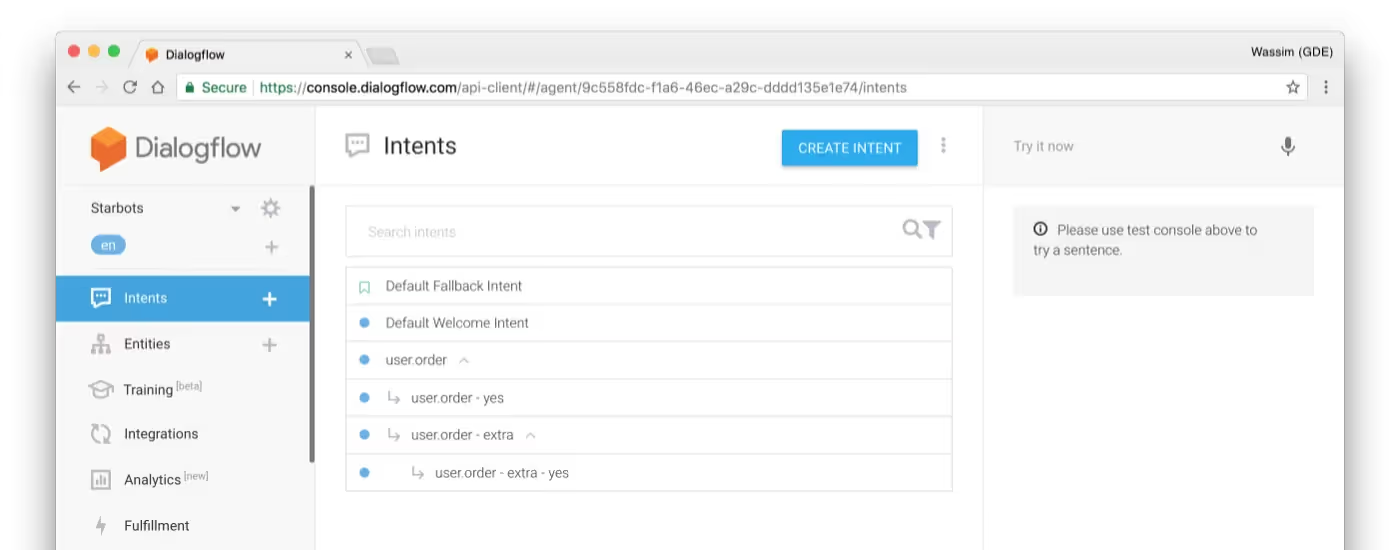

This seems simple enough. However, once we introduce complexity, building with nested context like this becomes quite cumbersome. For example, this same user wants a caramel latte, but before confirming, they add in, “I want to add whipped cream.”

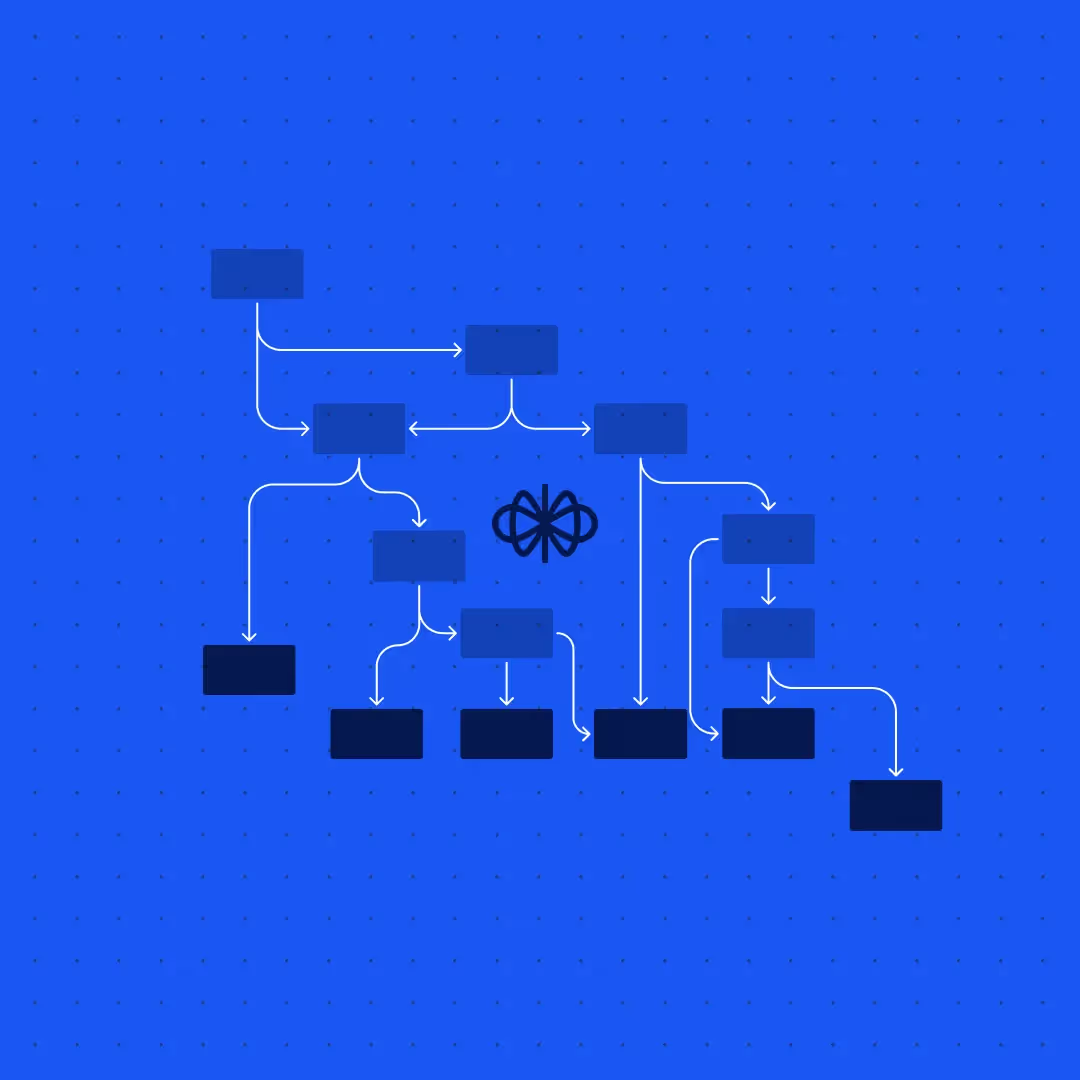

Example 2: Now we have the main intent (order caramel latte), a follow-up intent to confirm, a second follow-up to add whipped cream, and an extra ‘yes’ confirmation intent to confirm the whipped cream.

As you can see, the more complex a conversation gets, the more CAI teams are required to nest contextual follow-ups. While follow-up intents seem straightforward when first designing, the larger and more complex a conversation gets, the harder this web of follow-up intents becomes to manage.

Even more limiting, these nested intents are tied to their parent intent and don’t allow any flexible room for reusability.

Voiceflow

In Voiceflow, we still care about the context of the conversation, but we don’t need to keep track of follow-up intents in the same way. Every intent in Voiceflow is a parent level intent, which creates room for reusability.

In order to account for contextual responses, Voiceflow also offers intent scoping. With a simple toggle, certain intents can only be accessible in a certain topic or globally available. It’s simple to reduce design bloat to a minimum.

Designers have the ability to reference a reusable 'yes' intent wherever necessary and without having to create a net new 'yes' intent per main intent or node.

The difference in mental models here is that instead of creating a net new yes intent for each main intent, one would re-use a system yes intent that has situational context baked in.

This applies to context specific yes intents that often have both an affirmation (such as “yes”) and a repetition of the main intent (”Yes, I would like to order coffee”). As you have the ability to select intent scoping based on each node, there is no concern about conflicting utterances.

This all adds up to less latency, more efficiency and better reusability for designs.

2) Parameters

Parameters are structured data that can be used to perform logic, a task, or generate a response.

Dialogflow

The concept of parameters in Dialogflow exists in order to provide the action value to your fulfillment web hook request or the API interaction response. It can be used, in one example, to trigger specific logic in your service.

When an intent is matched at runtime, Dialogflow will give you the extracted value from the end user expression as a parameter. Each parameter has an entity type and that type dictates how the data is extracted.

Parameters can also be used to reference an event. For example, if the parameter name is duration, the event name might be alarm.

Voiceflow

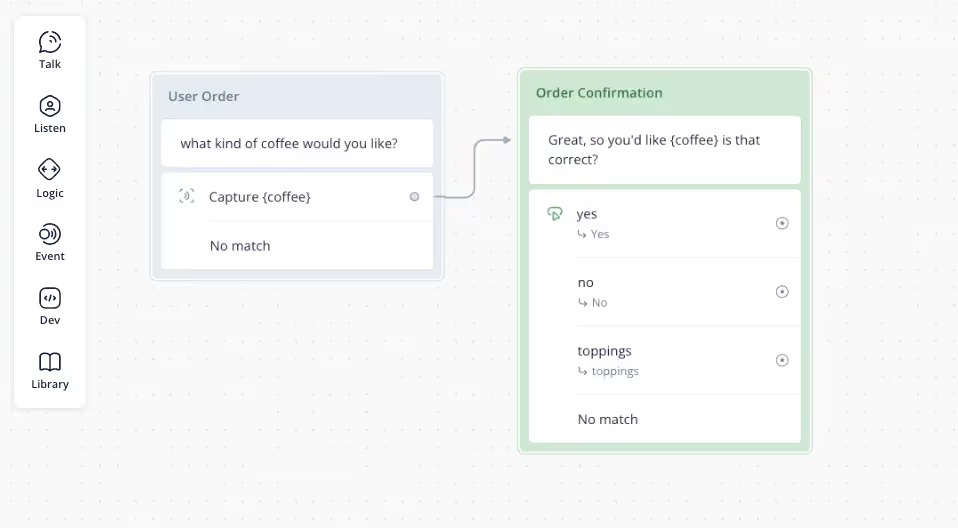

In Voiceflow, you can achieve the same outcome much more dynamically through variables. Whether capturing user responses or dynamically fulfilling content from web hooks or API calls, designers can use variables on canvas to quickly expand the assistant’s personalization and helpfulness.

When considering how a Dialogflow ES parameter might be rebuilt within Voiceflow, we need to consider the two types: entity & static.

Entity parameters are managed in our NLU Designer today. You’d set the Entity as ‘required’ and then create an entity re-prompt message in order to fill that entity as part of the conversational flow. This function would be useful for an {order} entity in which both flavor and size were required to move forward.

Static parameters are best utilized as variables in Voiceflow. For example, you’d prompt the user for their coffee order and capture the user’s response. That captured response can then be mapped to a variable (e.g. {coffee}) and dynamically utilized throughout the conversation - at scale.

3) If Statements

Dialogflow

Building IF statements in Dialogflow is not possible without writing code in the fulfillment.

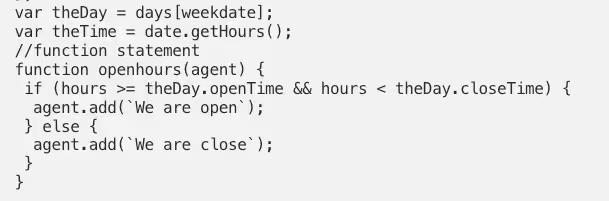

As an example, let’s say you need your Dialogflow Agent to answer differently when your business is open versus closed. If closed, we’d want to surface the next set of open hours and a way to get in touch. If open, we’d want to share the live ordering options.

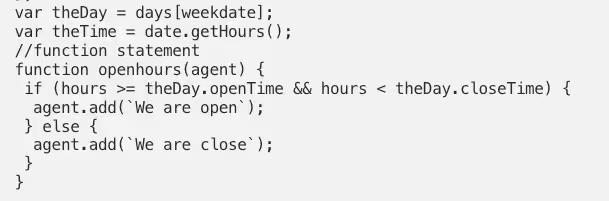

Below is an example of what this code block would look like as a function within the fulfillment section of your chatbot.

Similarly, IF statements are regularly used for new vs. returning customer designs. If we continued our coffees shop example in Dialogflow, we would need to write a function that checks if this user has opened a conversation previously or if they are new.

The same objective is much faster to achieve using Voiceflow.

Voiceflow

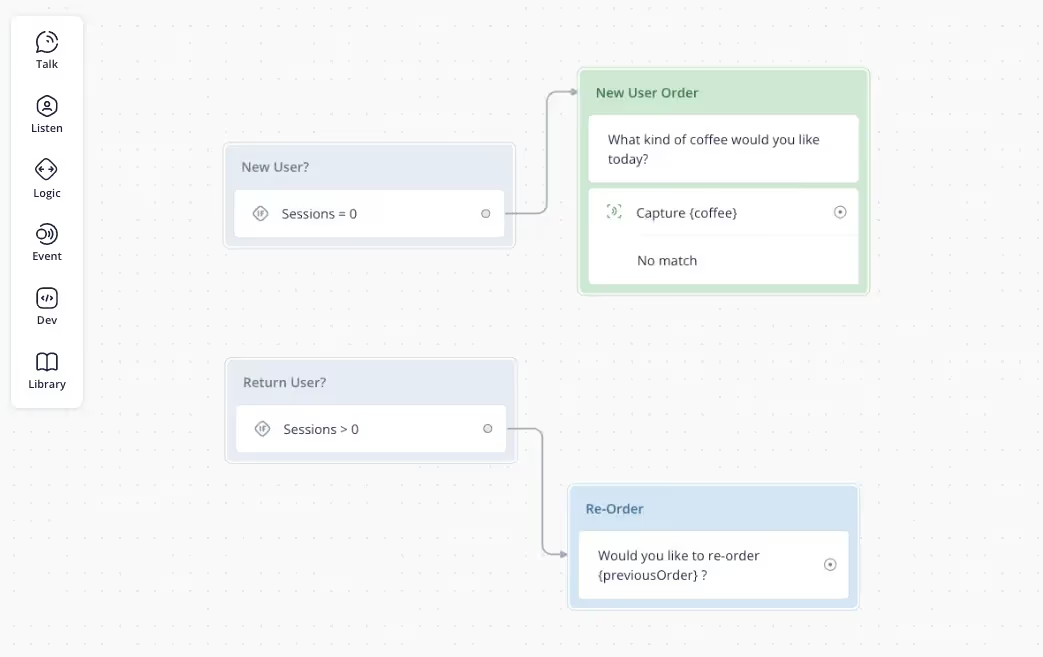

IF statements in Voiceflow are used primarily as routers based on backend data. Each IF statement represents actual backend logic checks that need to happen throughout the conversation.

This feature allows teams to build rich prototypes as the experience is as close to the production version as possible.

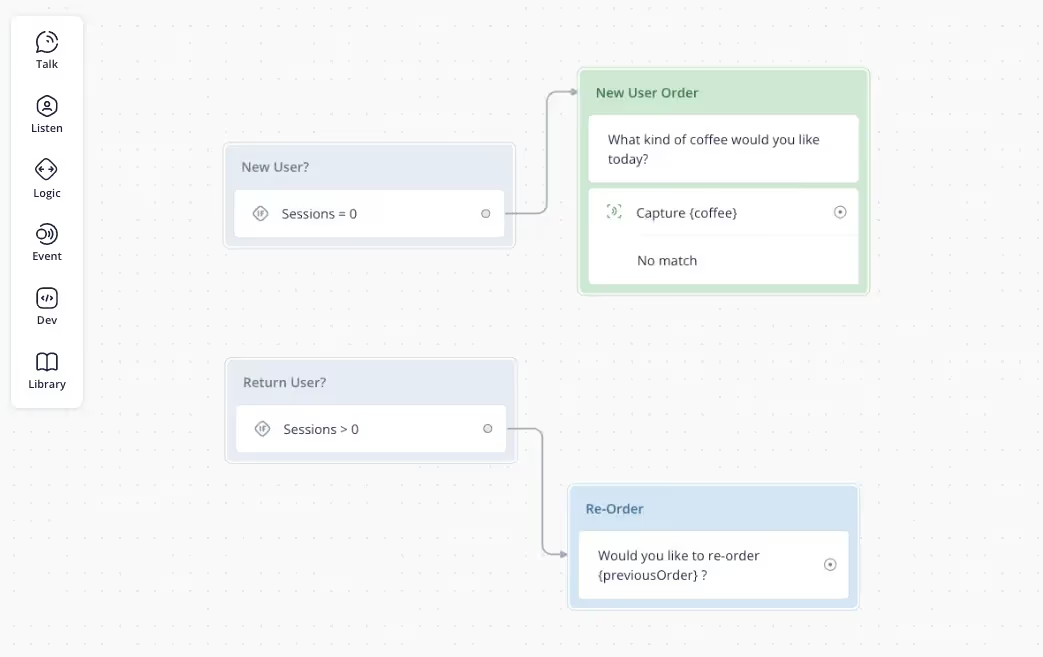

We can take the same IF objective from above and build it in Voiceflow (below).

Here you can see we are using the IF condition block to determine what direction the conversation should go based on the value of the “sessions” variable.

If sessions = 0, then the conversation will be routed to the new user flow.

If sessions is > 0, then the conversation will be routed to the return user flow.

For both production instances and prototyping purposes, Voiceflow’s method is much more efficient.

Developer resources are not needed to hard code the design, while designers can work rapidly to create, test, and ship new flows. Better yet, when a project is ready for production, teams can publish directly to live agents (e.g. Dialogflow CX) or hand-off artifacts with all necessary code needed for implementation.

It’s important for conversation designers, data scientists, and the entire conversational AI team to have a workflow that lets them ship contextual assistants at scale.

As NLUs will continue to change, it’s even more essential to know that your team’s designs are agnostic - ready to plug into any NLU layer with nothing lost in translation.

Need to strategize your migration and CAI tech stack more? Our team is here to chat.

1) Follow-up Intents

A follow-up intent adds a nested intent inside a parent intent.

Dialogflow

When using Dialogflow (a natural language understanding platform), you’d consider follow-up intents to be normal because they “hold” the context of the parent intent. There are many types of follow-up expressions, including repeat, confirm & cancel.

Let’s design a conversation in Dialogflow to illustrate.

Example 1: Your main intent was to order a caramel latte. A follow-up intent to this would be a secondary order confirmation.

This seems simple enough. However, once we introduce complexity, building with nested context like this becomes quite cumbersome. For example, this same user wants a caramel latte, but before confirming, they add in, “I want to add whipped cream.”

Example 2: Now we have the main intent (order caramel latte), a follow-up intent to confirm, a second follow-up to add whipped cream, and an extra ‘yes’ confirmation intent to confirm the whipped cream.

As you can see, the more complex a conversation gets, the more CAI teams are required to nest contextual follow-ups. While follow-up intents seem straightforward when first designing, the larger and more complex a conversation gets, the harder this web of follow-up intents becomes to manage.

Even more limiting, these nested intents are tied to their parent intent and don’t allow any flexible room for reusability.

Voiceflow

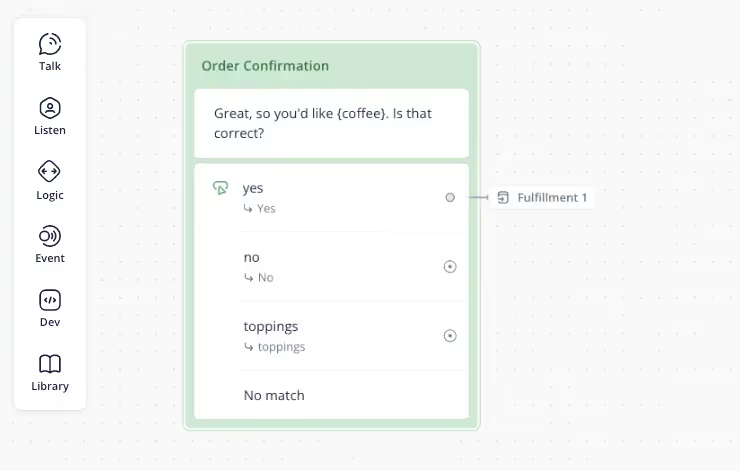

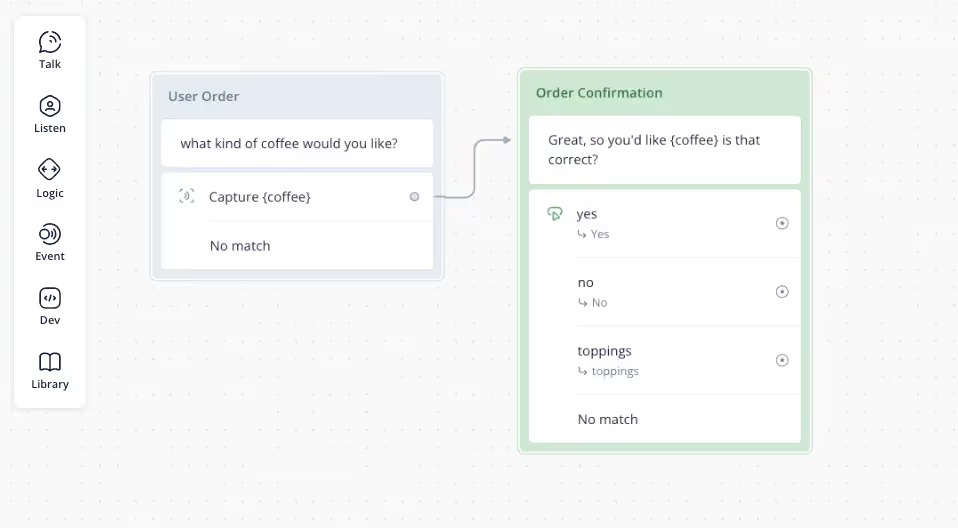

In Voiceflow, we still care about the context of the conversation, but we don’t need to keep track of follow-up intents in the same way. Every intent in Voiceflow is a parent level intent, which creates room for reusability.

In order to account for contextual responses, Voiceflow also offers intent scoping. With a simple toggle, certain intents can only be accessible in a certain topic or globally available. It’s simple to reduce design bloat to a minimum.

Designers have the ability to reference a reusable 'yes' intent wherever necessary and without having to create a net new 'yes' intent per main intent or node.

The difference in mental models here is that instead of creating a net new yes intent for each main intent, one would re-use a system yes intent that has situational context baked in.

This applies to context specific yes intents that often have both an affirmation (such as “yes”) and a repetition of the main intent (”Yes, I would like to order coffee”). As you have the ability to select intent scoping based on each node, there is no concern about conflicting utterances.

This all adds up to less latency, more efficiency and better reusability for designs.

2) Parameters

Parameters are structured data that can be used to perform logic, a task, or generate a response.

Dialogflow

The concept of parameters in Dialogflow exists in order to provide the action value to your fulfillment web hook request or the API interaction response. It can be used, in one example, to trigger specific logic in your service.

When an intent is matched at runtime, Dialogflow will give you the extracted value from the end user expression as a parameter. Each parameter has an entity type and that type dictates how the data is extracted.

Parameters can also be used to reference an event. For example, if the parameter name is duration, the event name might be alarm.

Voiceflow

In Voiceflow, you can achieve the same outcome much more dynamically through variables. Whether capturing user responses or dynamically fulfilling content from web hooks or API calls, designers can use variables on canvas to quickly expand the assistant’s personalization and helpfulness.

When considering how a Dialogflow ES parameter might be rebuilt within Voiceflow, we need to consider the two types: entity & static.

Entity parameters are managed in our NLU Designer today. You’d set the Entity as ‘required’ and then create an entity re-prompt message in order to fill that entity as part of the conversational flow. This function would be useful for an {order} entity in which both flavor and size were required to move forward.

Static parameters are best utilized as variables in Voiceflow. For example, you’d prompt the user for their coffee order and capture the user’s response. That captured response can then be mapped to a variable (e.g. {coffee}) and dynamically utilized throughout the conversation - at scale.

3) If Statements

Dialogflow

Building IF statements in Dialogflow is not possible without writing code in the fulfillment.

As an example, let’s say you need your Dialogflow Agent to answer differently when your business is open versus closed. If closed, we’d want to surface the next set of open hours and a way to get in touch. If open, we’d want to share the live ordering options.

Below is an example of what this code block would look like as a function within the fulfillment section of your chatbot.

Similarly, IF statements are regularly used for new vs. returning customer designs. If we continued our coffees shop example in Dialogflow, we would need to write a function that checks if this user has opened a conversation previously or if they are new.

The same objective is much faster to achieve using Voiceflow.

Voiceflow

IF statements in Voiceflow are used primarily as routers based on backend data. Each IF statement represents actual backend logic checks that need to happen throughout the conversation.

This feature allows teams to build rich prototypes as the experience is as close to the production version as possible.

We can take the same IF objective from above and build it in Voiceflow (below).

Here you can see we are using the IF condition block to determine what direction the conversation should go based on the value of the “sessions” variable.

If sessions = 0, then the conversation will be routed to the new user flow.

If sessions is > 0, then the conversation will be routed to the return user flow.

For both production instances and prototyping purposes, Voiceflow’s method is much more efficient.

Developer resources are not needed to hard code the design, while designers can work rapidly to create, test, and ship new flows. Better yet, when a project is ready for production, teams can publish directly to live agents (e.g. Dialogflow CX) or hand-off artifacts with all necessary code needed for implementation.

It’s important for conversation designers, data scientists, and the entire conversational AI team to have a workflow that lets them ship contextual assistants at scale.

As NLUs will continue to change, it’s even more essential to know that your team’s designs are agnostic - ready to plug into any NLU layer with nothing lost in translation.

Need to strategize your migration and CAI tech stack more? Our team is here to chat.

.svg)