1. Replace language, graphics, and links

Sonia: The first thing I'd like to talk about is probably the most obvious, but one that is so important. It's replacing language, graphics, and links.

With language, it's looking at small words where you might be writing for your chatbot something like, "for more information, click here."

[However], Alexa can't click, and so it's imperative to make sure those words — from click to see to view— are changed to heard and say. This makes the experience more real. If these changes aren't done, your experience can sound very strange.

Another thing is the graphics. You might have an image inserted in your chatbot that helps illustrate something. For example, you're asking a chatbot about a person; maybe it shows you a picture of them so you can see who that person is.

So you're going to have to find and adapt ways to make sure those images are removed [while] finding something else to illustrate your point. This may involve rewording what you already had [so] you're making sense for what the user's asking for.

And [finally] links. We often hyperlink to different pages and external or additional resources. It's making sure we are not doing that anymore for voice. I'll give you an example of how we did this.

I built out the first HIPAA compliant voice app through Alexa for Anthem - a healthcare giant in the United States. One of the things we needed to do was talk about deductibles and let people find out their deductible limits by asking Alexa.

On a chat experience, this would be something where, at the end of the conversation, we might say, "go log into your account to be able to see more details about your deductible limits." Now, because it's all voice, we want the experience to be all-inclusive. We want to get [the user] from beginning to end and through all the information bits without referring them elsewhere. Why? Because that ends up causing frustration.

This is a voice experience, and so they might not be in front of a laptop. They might not be able to access a device. They might be driving. So we wanted to be able to provide [the user] with as much information as possible while [factoring in] brevity.

Let's go through this example:

"Hey Alexa, I want to know my deductible."

She'll say, "you're in-network deductible limit is $1,000 dollars so far this year. You've accumulated $1,000 dollars towards reaching it. Your remaining deductible balance is $9,000."

Then she's going to ask if you want more information.

"Would you like to know about your out of network amounts?"

So [Alexa] is going to try and help you get everything you need to know in this experience:

"Your network deductible limit is $1,000. A quick tip — in-network providers can help you save money. What else can I help you with today?"

Now that's pretty much the basis for what people are going to ask for. And that's what we did for Anthem — we really built out [this experience] so people could get all that information without having to log into their accounts.

2. Add confirmations

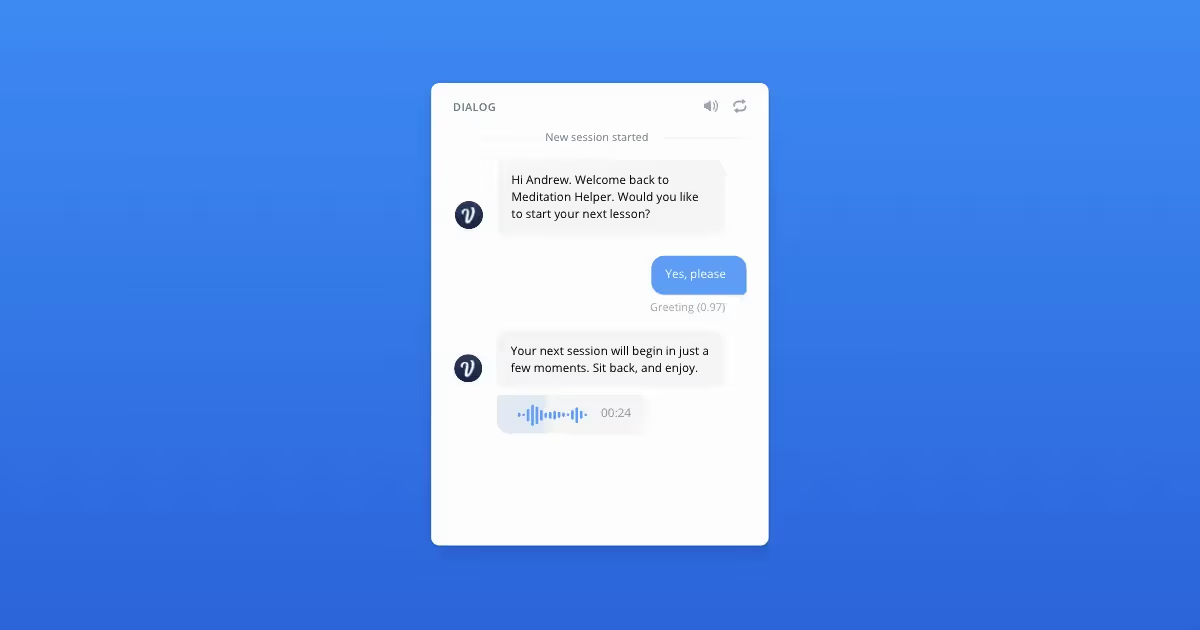

Sonia: This is really key for voice conversations because when a person is talking to an Alexa, Google, Cortana, or Bixby, they might not remember previous conversations [since] there is no trace of it.

They can't scroll back up like on a chatbot to see what they might've said or what they were [previously] talking about. For example, if you're booking a hotel via a chatbot and you're scrolling through [the conversation], you can see the city you chose [and] that you booked a suite. You can trace your details.

You can't do that with voice. We don't want to force the user to use their memory.

Here's an example:

Working with Anthem, we wanted to be able to e-mail people their ID cards as quickly as possible to their e-mail address on file. The text below is what would have happened if this was just a chatbot experience.

"You're all set! I sent your ID card to the e-mail address starting with [XX] and ending with @anthem.com"

This is simple, super easy, and provides a great user experience.

Now with voice, it gets a little tricky. There's so much room for misunderstanding and misinterpretation of what a person could say. The error rate is higher because, with text, you know what you're typing. With voice input, however, it could be different. We want to always confirm that the task we're trying to complete is the task they (the user) wants completed.

On the other hand, when using voice, the experience might go like this:

User: "Hey Alexa, I want my ID card."

Alexa: "OK, you want to get your ID card. Is that right?"

Alexa: "One moment please..."

Adding a confirmation to this voice experience is crucial and will help make sure you are completing the task that the user needs. It's great to add these questions when converting from a chatbot to a voice app.

Finally, there might be a little lag in time that we want to consider as well, [and so we added] "one moment please" to acknowledge that.

3. Ask for more details

Sonia: Number three is to ask for more details. I found this interesting stat which says the amount of time it takes for a person to hear things vs. read things is quite different. Most podcasters and audiobook performers speak between 150 to 160 words per minute. That's kind of the optimal amount of words per minute for most users.

Reading wise, a skilled reader can read up to 300 - 400 words per minute. That's almost twice as much, and so it's going to start to sound like a run-on sentence if you use the exact same language in your chatbot for your voice experience.

Consider shortening some of those bits of information and adding the question, "do you want to learn more about it?"

Let's look at this example:

I helped design HereAfter AI's Alexa skill — [specifically] the conversation that was part of it.

This was a platform where people could record their life stories and share them with their family and loves ones. Those stories, however, could be long. Sometimes people would go on for six to seven minutes, which could be boring to the person listening [which might lead them] to exiting the conversation quicker.

To make this the best user experience possible, we broke those personal stories down. We would do a tidbit of it — a nice nugget that made sense that had a beginning, middle, and end. We would then say one of the following:

1. "Would you like to hear more about my life?"

2. "Did you enjoy hearing about that?"

3. "I'd love to know what you thought about that — what's your reaction? Tell me in a nutshell."

With the above examples, you want to try and get more feedback [from the user]. This is also a good opportunity if you're testing out your new skill to get some insight into what users think of your skill or a particular part of it.

Another example of this is when people ask a skill for a definition.

"Who is Alexander Hamilton?"

We can get a lot of information about Alexander Hamilton off of Wikipedia, but that will be a very long statement that Alexa will have to say. We want to break it down, give it a quick definition, and then ask,"would you like to learn more?"This will make your voice experience excellent [as] it's very different than a chatbot experience where you might be sharing links, images, and more written word.

4. Account for communication lapses in time

Sonia: This is a fun and tricky one and probably even more relevant if any developers are reading this. Now, you might've noticed that when we're building a Google Action or an Alexa Skill, sometimes the processing speed for the API you're calling might be a bit long.

At Anthem, for example, there were times — like when we had to refill a prescription — where we were calling on APIs that caused over a 10-second delay. This meant Alexa couldn't say anything for that period or even register what to say next.

For example, at Anthem, there were times when we had to refill a prescription where we were calling on APIs that caused over a 10-second delay. This meant Alexa couldn't say anything for that period or even know what to say next.

Cases like this are very normal for a text conversation. We're used to seeing those bubbles pop up when we're texting our friends or when we're talking to chatbots. We see those dot dot dots. That's a normal thing to experience as a user — a moment of lapse in time.

But when you're talking, it's bizarre. If you ask a question and somebody in front of you stood in silence for 10 seconds, that would be a bit strange. We're not used to that in voice — and so we need to make up for that lost time while that API is being called.

There's a very simple fix to this.

Let's say it will take 10 seconds to get something [from an API] and there's no way to shorten it. You can't reduce the number of API calls that need to be made [and] you can't restructure the conversation in a way where they don't have to be called at the same time.

You can prompt the user with, "one moment, please...."

This is a very simple thing you can do to tell your users that you heard what they said and are currently working on it. It reassures them that [their request] is being handled. It's just three words that let's people know you're there for them and that you are indeed an assistant trying to be helpful.

5. Stock up on utterances

Sonia: I always pay attention to utterances.

I like to put as much attention on them as I do in creating the conversation because all those beautiful intents that I have created a script for are going nowhere if people can't even pull them out. So stock up on utterances — especially for voice. There's just so much variation in the way people say things.

When people text — especially with a virtual assistant on a website — they can often be very brief, making it easier to map what intent they're looking for. With voice, people tend to speak longer and more elaborately.

And so it's one of those funny things where even though we're trying to make Alexa statements shorter, the user response is longer than it would be in text, and so we need to consider that. So add as many utterances (that make sense) to your intent as possible.

A good place to go if you're starting out isutterance generator.com. I wouldn't recommend this as your only course of action because I think it's important to go through and drop the ones that don't make sense, but you can create a list of utterances based on the different words you have. This will satisfy your users because they can get where they need to go.

6. Add a variety of error messages (Bonus!)

Sonia: This goes with the point we previously discussed. There is so much room for error in what is heard vs. what is typed. The likelihood of an error message being needed is much higher [with voice]. [With error messages,] we want to be diverse in that we want to refrain from saying the same thing every time.

Using Alexa as an example, when she doesn't understand something, she has at least three variations:

Example 1: "Sorry, I didn't get that"

Example 2: "I didn't hear that, please try that again"

Example 3: "I didn't catch that, please say that again"

You can see it doesn't require that much innovation and diversity as the last two examples above are almost the same. The only difference between the two are the words hear/catch and try/say — yet the two examples feel different.

When I hear the third example after the second one, I don't feel as if Alexa is repeating herself, so you don't need to be crazy innovative. You simply need to have a strong and lengthy list of different error messages for what you're hoping to handle.

Another example is when Alexa doesn't know the intent. It's just not programmed in her.

Let's say somebody is asking for a cheeseburger on an Alexa skill about Renaissance history. Alexa is not going to know what the user is asking for.

Example 1: "Sorry, I don't know that"

Example 2: "Sorry, I'm not sure"

Example 3: "Sorry, I don't know that one"

Example 4: "Hmmm, I don't know that"

The above are examples of ways to say, "I don't know what you're talking about." Keep these diverse and interesting. That's how we talk and converse as humans. We want to make sure we don't sound robotic when we're designing these voice experiences.

Interested in joining our next live webinar? Sign up for upcoming events here.

1. Replace language, graphics, and links

Sonia: The first thing I'd like to talk about is probably the most obvious, but one that is so important. It's replacing language, graphics, and links.

With language, it's looking at small words where you might be writing for your chatbot something like, "for more information, click here."

[However], Alexa can't click, and so it's imperative to make sure those words — from click to see to view— are changed to heard and say. This makes the experience more real. If these changes aren't done, your experience can sound very strange.

Another thing is the graphics. You might have an image inserted in your chatbot that helps illustrate something. For example, you're asking a chatbot about a person; maybe it shows you a picture of them so you can see who that person is.

So you're going to have to find and adapt ways to make sure those images are removed [while] finding something else to illustrate your point. This may involve rewording what you already had [so] you're making sense for what the user's asking for.

And [finally] links. We often hyperlink to different pages and external or additional resources. It's making sure we are not doing that anymore for voice. I'll give you an example of how we did this.

I built out the first HIPAA compliant voice app through Alexa for Anthem - a healthcare giant in the United States. One of the things we needed to do was talk about deductibles and let people find out their deductible limits by asking Alexa.

On a chat experience, this would be something where, at the end of the conversation, we might say, "go log into your account to be able to see more details about your deductible limits." Now, because it's all voice, we want the experience to be all-inclusive. We want to get [the user] from beginning to end and through all the information bits without referring them elsewhere. Why? Because that ends up causing frustration.

This is a voice experience, and so they might not be in front of a laptop. They might not be able to access a device. They might be driving. So we wanted to be able to provide [the user] with as much information as possible while [factoring in] brevity.

Let's go through this example:

"Hey Alexa, I want to know my deductible."

She'll say, "you're in-network deductible limit is $1,000 dollars so far this year. You've accumulated $1,000 dollars towards reaching it. Your remaining deductible balance is $9,000."

Then she's going to ask if you want more information.

"Would you like to know about your out of network amounts?"

So [Alexa] is going to try and help you get everything you need to know in this experience:

"Your network deductible limit is $1,000. A quick tip — in-network providers can help you save money. What else can I help you with today?"

Now that's pretty much the basis for what people are going to ask for. And that's what we did for Anthem — we really built out [this experience] so people could get all that information without having to log into their accounts.

2. Add confirmations

Sonia: This is really key for voice conversations because when a person is talking to an Alexa, Google, Cortana, or Bixby, they might not remember previous conversations [since] there is no trace of it.

They can't scroll back up like on a chatbot to see what they might've said or what they were [previously] talking about. For example, if you're booking a hotel via a chatbot and you're scrolling through [the conversation], you can see the city you chose [and] that you booked a suite. You can trace your details.

You can't do that with voice. We don't want to force the user to use their memory.

Here's an example:

Working with Anthem, we wanted to be able to e-mail people their ID cards as quickly as possible to their e-mail address on file. The text below is what would have happened if this was just a chatbot experience.

"You're all set! I sent your ID card to the e-mail address starting with [XX] and ending with @anthem.com"

This is simple, super easy, and provides a great user experience.

Now with voice, it gets a little tricky. There's so much room for misunderstanding and misinterpretation of what a person could say. The error rate is higher because, with text, you know what you're typing. With voice input, however, it could be different. We want to always confirm that the task we're trying to complete is the task they (the user) wants completed.

On the other hand, when using voice, the experience might go like this:

User: "Hey Alexa, I want my ID card."

Alexa: "OK, you want to get your ID card. Is that right?"

Alexa: "One moment please..."

Adding a confirmation to this voice experience is crucial and will help make sure you are completing the task that the user needs. It's great to add these questions when converting from a chatbot to a voice app.

Finally, there might be a little lag in time that we want to consider as well, [and so we added] "one moment please" to acknowledge that.

3. Ask for more details

Sonia: Number three is to ask for more details. I found this interesting stat which says the amount of time it takes for a person to hear things vs. read things is quite different. Most podcasters and audiobook performers speak between 150 to 160 words per minute. That's kind of the optimal amount of words per minute for most users.

Reading wise, a skilled reader can read up to 300 - 400 words per minute. That's almost twice as much, and so it's going to start to sound like a run-on sentence if you use the exact same language in your chatbot for your voice experience.

Consider shortening some of those bits of information and adding the question, "do you want to learn more about it?"

Let's look at this example:

I helped design HereAfter AI's Alexa skill — [specifically] the conversation that was part of it.

This was a platform where people could record their life stories and share them with their family and loves ones. Those stories, however, could be long. Sometimes people would go on for six to seven minutes, which could be boring to the person listening [which might lead them] to exiting the conversation quicker.

To make this the best user experience possible, we broke those personal stories down. We would do a tidbit of it — a nice nugget that made sense that had a beginning, middle, and end. We would then say one of the following:

1. "Would you like to hear more about my life?"

2. "Did you enjoy hearing about that?"

3. "I'd love to know what you thought about that — what's your reaction? Tell me in a nutshell."

With the above examples, you want to try and get more feedback [from the user]. This is also a good opportunity if you're testing out your new skill to get some insight into what users think of your skill or a particular part of it.

Another example of this is when people ask a skill for a definition.

"Who is Alexander Hamilton?"

We can get a lot of information about Alexander Hamilton off of Wikipedia, but that will be a very long statement that Alexa will have to say. We want to break it down, give it a quick definition, and then ask,"would you like to learn more?"This will make your voice experience excellent [as] it's very different than a chatbot experience where you might be sharing links, images, and more written word.

4. Account for communication lapses in time

Sonia: This is a fun and tricky one and probably even more relevant if any developers are reading this. Now, you might've noticed that when we're building a Google Action or an Alexa Skill, sometimes the processing speed for the API you're calling might be a bit long.

At Anthem, for example, there were times — like when we had to refill a prescription — where we were calling on APIs that caused over a 10-second delay. This meant Alexa couldn't say anything for that period or even register what to say next.

For example, at Anthem, there were times when we had to refill a prescription where we were calling on APIs that caused over a 10-second delay. This meant Alexa couldn't say anything for that period or even know what to say next.

Cases like this are very normal for a text conversation. We're used to seeing those bubbles pop up when we're texting our friends or when we're talking to chatbots. We see those dot dot dots. That's a normal thing to experience as a user — a moment of lapse in time.

But when you're talking, it's bizarre. If you ask a question and somebody in front of you stood in silence for 10 seconds, that would be a bit strange. We're not used to that in voice — and so we need to make up for that lost time while that API is being called.

There's a very simple fix to this.

Let's say it will take 10 seconds to get something [from an API] and there's no way to shorten it. You can't reduce the number of API calls that need to be made [and] you can't restructure the conversation in a way where they don't have to be called at the same time.

You can prompt the user with, "one moment, please...."

This is a very simple thing you can do to tell your users that you heard what they said and are currently working on it. It reassures them that [their request] is being handled. It's just three words that let's people know you're there for them and that you are indeed an assistant trying to be helpful.

5. Stock up on utterances

Sonia: I always pay attention to utterances.

I like to put as much attention on them as I do in creating the conversation because all those beautiful intents that I have created a script for are going nowhere if people can't even pull them out. So stock up on utterances — especially for voice. There's just so much variation in the way people say things.

When people text — especially with a virtual assistant on a website — they can often be very brief, making it easier to map what intent they're looking for. With voice, people tend to speak longer and more elaborately.

And so it's one of those funny things where even though we're trying to make Alexa statements shorter, the user response is longer than it would be in text, and so we need to consider that. So add as many utterances (that make sense) to your intent as possible.

A good place to go if you're starting out isutterance generator.com. I wouldn't recommend this as your only course of action because I think it's important to go through and drop the ones that don't make sense, but you can create a list of utterances based on the different words you have. This will satisfy your users because they can get where they need to go.

6. Add a variety of error messages (Bonus!)

Sonia: This goes with the point we previously discussed. There is so much room for error in what is heard vs. what is typed. The likelihood of an error message being needed is much higher [with voice]. [With error messages,] we want to be diverse in that we want to refrain from saying the same thing every time.

Using Alexa as an example, when she doesn't understand something, she has at least three variations:

Example 1: "Sorry, I didn't get that"

Example 2: "I didn't hear that, please try that again"

Example 3: "I didn't catch that, please say that again"

You can see it doesn't require that much innovation and diversity as the last two examples above are almost the same. The only difference between the two are the words hear/catch and try/say — yet the two examples feel different.

When I hear the third example after the second one, I don't feel as if Alexa is repeating herself, so you don't need to be crazy innovative. You simply need to have a strong and lengthy list of different error messages for what you're hoping to handle.

Another example is when Alexa doesn't know the intent. It's just not programmed in her.

Let's say somebody is asking for a cheeseburger on an Alexa skill about Renaissance history. Alexa is not going to know what the user is asking for.

Example 1: "Sorry, I don't know that"

Example 2: "Sorry, I'm not sure"

Example 3: "Sorry, I don't know that one"

Example 4: "Hmmm, I don't know that"

The above are examples of ways to say, "I don't know what you're talking about." Keep these diverse and interesting. That's how we talk and converse as humans. We want to make sure we don't sound robotic when we're designing these voice experiences.

Interested in joining our next live webinar? Sign up for upcoming events here.

.svg)