Stage one: Build a blueprint

This stage is all about translating your vision into a tangible plan. If you want to build an agent that guides users through refund processing or answering FAQs without a human, that’s your vision. With the help of stakeholders, product owners, and natural language understanding (NLU) designers, you can build a blueprint—a tactical vision that marries potential interactions with the expertise necessary to bring them to fruition. Here’s how to put together your collaborative blueprint.

- Gather requirements: First and foremost, you’ll need to ground your design in concrete requirements. Take your time gathering each stakeholder’s input, and use it to set clear goals, metrics, and constraints. Using training data is a great place to start. If it's lacking, consider synthesizing some using language models.

- Create a brief: Distill your requirements into a concise brief, capturing the essence of the vision, objectives, target audience, and scope.

- Hold a briefing session: Designers and stakeholders should come together to align on the brief's contents, ensuring everyone is on the same page before diving into the design.

- Draft the design: Using Voiceflow, designers map out the conversation's initial flow, zeroing in on the ideal user journey.

- Conduct user testing: Put the prototype to the test. Find real-world users to help identify pain points and ambiguities. This feedback will help you further refine the experience.

- Iterate: The feedback you’ve received should guide your iterations, allowing you to enhance the prototype’s logic and the natural ebb and flow of the conversation.

- Get team and stakeholder signoff: Before the development phase begins, internal teams and stakeholders need to review the prototype. This ensures it aligns with the overall vision and is ready for the next stage.

Also, many designers opt to create static sample dialogs between the briefing and design stages. My advice? That’s a relic of the past. Modern conversation design tools (like Voiceflow) allow you to leap straight into interactive prototypes, streamlining the process and facilitating richer feedback and more agile iterations.

Stage two: Fill in the details

This phase is necessary to help bridge the gap between the vision you built with your blueprint and the experience you’ll execute IRL. It requires meticulous collaboration between designers and developers to craft each conversational turn. It also needs to mirror the overarching objectives while leaving room for real-world complexities.

Here are the 8 steps you need to follow to make your initial vision a reality:

- Training and NLU design: The design process needs a strong data foundation, which informs the AI's understanding of user interactions. This is where NLU comes into play, by focusing on enhancing the AI's ability to discern user intent and context through intent discovery, utterance generation, and model testing.

- Long tail design - dialog and response design: After gleaning insights from the AI's training, designers craft the conversation's structure. Every twist, turn, and potential edge case is mapped out to offer a seamless user experience. This step involves two critical disciplines:

Dialog design: At this stage, the designer manages the logic and flow of the conversation, ensuring users are guided smoothly from start to finish.

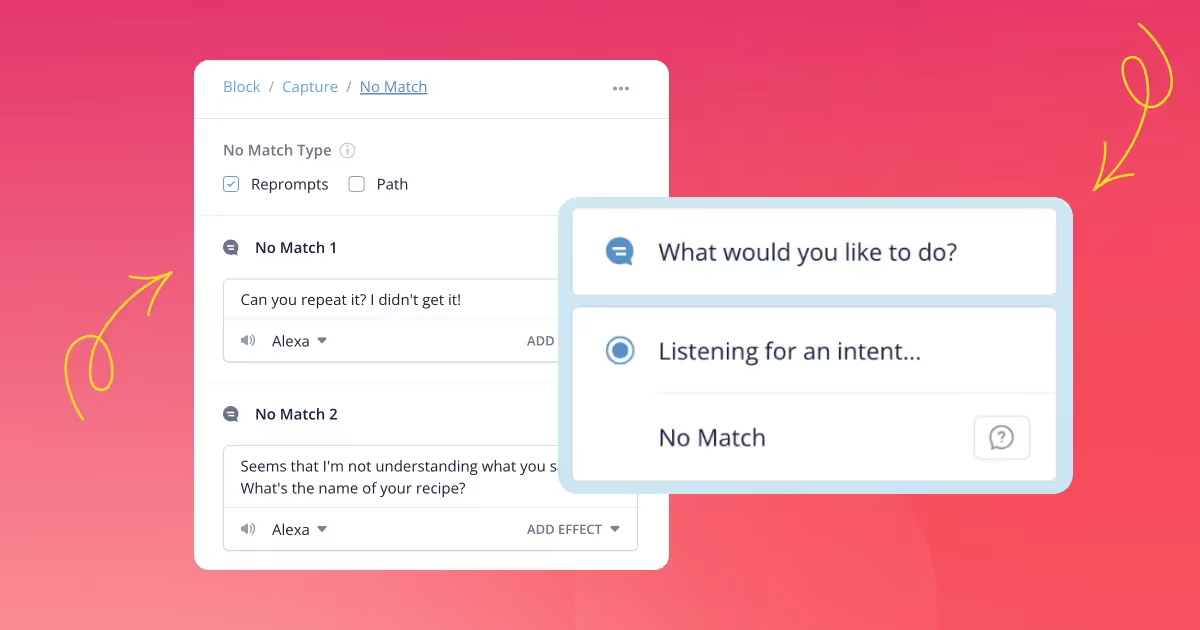

Response design: This discipline shapes the voice and tone of the AI. Here, designers craft responses that are not only informative but also align with the brand's persona and the platform's visual elements. Response designers also work closely with dialog designers in this stage to ensure the language used is clear and easy to understand. - Error handling - response design: Designers also need to proactively create pathways for potential uncertainties, guiding users back to happy paths and ensuring the agent can handle unexpected turns in the conversation. Response designers play a key role in crafting these fallback responses that help glean the required information from users.

- Development: Engineers breathe life into the design, ensuring that the backend integrates seamlessly with the conversational flow.

- QA testing: Thorough testing identifies any technical or conversational challenges, paving the way for refinements that elevate user satisfaction.

- Refinement: Continuous improvement is key. Based on internal feedback and testing insights, the design undergoes further optimization to ensure it remains aligned with user needs.

- Final test: A comprehensive review, involving all stakeholders, ensures the design is primed for launch.

- Launch: Unveiling the design marks a beginning, not an end. Regular monitoring and iterative enhancements ensure the AI remains relevant, efficient, and user-centric.

Stage three: BAU (business as usual) monitoring

Once the design is live, it’s up to the designers to monitor how users interact with the agent, which will help them find weak points and understand areas of friction.

During this stage, each conversation is an opportunity to optimize for increased conversion or ROI. The team works iteratively, updating responses or refining conversation flows to address feedback before rolling out changes gradually. With each new insight, the cycle begins again, driving improvements that may inspire entirely different journeys.

The dialog and response designers will look to refine:

- Flows: Streamline paths, remove unused branches, add missing steps. Each iteration should move users through the journey more effortlessly.

- Responses: Rewrite responses based on accumulated knowledge and user feedback. The goal here is to keep the AI consistent and helpful.

While the NLU designer will look to refine:

- Understanding: Expand the agent’s comprehension through model retraining, adding new utterances, intents, and entities to reduce points of confusion or frustration.

- New opportunities: Sometimes BAU monitoring uncovers gaps that inspire entirely new experiences. Teams work with stakeholders on shaping requirements to design new solutions.

Measuring your assistant’s effectiveness

Measuring performance helps determine what’s working and what’s not. For conversational AI, cross-functional teams monitor metrics in natural language understanding, dialog design, and response quality to pinpoint weaknesses across components and interactions. By scrutinizing confidence scores, turns to complete, and fallback frequency, a team can address pain points piece by piece.

How to measure NLU design

Teams measure intent coverage, entity accuracy, confidence, and other NLP metrics to determine how to improve the AI's language comprehension. Here’s what we recommend looking at:

- Intent coverage: % of user inputs mapped to a known intent

- Entity accuracy: % of entities correctly extracted from user inputs

- Confidence: Level of confidence the model has in its predictions (higher is better)

- Standard NLP metrics: F1 score, accuracy, precision, recall, false positive rate, false negative rate

How to measure dialog design

Analyzing turns, dead ends, and backtracks highlights how to simplify conversation flows and smooth the user experience. Here’s what we tend to measure:

- Turns to complete: The number of turns needed for a user to complete their goal

- Dead ends: The number of times a conversation flow ends prematurely

- Backtracks: How often users have to go backwards in a flow to provide missing info

- Task completion: % of times users are able to finish a full conversation journey

How to measure response design

Monitoring fallback use, repeats, length, and ratings identifies how to improve responses by rewriting or developing new content that better resonates with users. This is what we recommend measuring:

- Fallback count: How often the AI has to use a generic fallback response

- Repeat responses: Number of times the same response is used in a single conversation

- Response length: Average number of words in each AI response (aim for a natural length)

- User feedback: Ratings or written feedback on the quality, helpfulness, and effectiveness of responses

Stage one: Build a blueprint

This stage is all about translating your vision into a tangible plan. If you want to build an agent that guides users through refund processing or answering FAQs without a human, that’s your vision. With the help of stakeholders, product owners, and natural language understanding (NLU) designers, you can build a blueprint—a tactical vision that marries potential interactions with the expertise necessary to bring them to fruition. Here’s how to put together your collaborative blueprint.

- Gather requirements: First and foremost, you’ll need to ground your design in concrete requirements. Take your time gathering each stakeholder’s input, and use it to set clear goals, metrics, and constraints. Using training data is a great place to start. If it's lacking, consider synthesizing some using language models.

- Create a brief: Distill your requirements into a concise brief, capturing the essence of the vision, objectives, target audience, and scope.

- Hold a briefing session: Designers and stakeholders should come together to align on the brief's contents, ensuring everyone is on the same page before diving into the design.

- Draft the design: Using Voiceflow, designers map out the conversation's initial flow, zeroing in on the ideal user journey.

- Conduct user testing: Put the prototype to the test. Find real-world users to help identify pain points and ambiguities. This feedback will help you further refine the experience.

- Iterate: The feedback you’ve received should guide your iterations, allowing you to enhance the prototype’s logic and the natural ebb and flow of the conversation.

- Get team and stakeholder signoff: Before the development phase begins, internal teams and stakeholders need to review the prototype. This ensures it aligns with the overall vision and is ready for the next stage.

Also, many designers opt to create static sample dialogs between the briefing and design stages. My advice? That’s a relic of the past. Modern conversation design tools (like Voiceflow) allow you to leap straight into interactive prototypes, streamlining the process and facilitating richer feedback and more agile iterations.

Stage two: Fill in the details

This phase is necessary to help bridge the gap between the vision you built with your blueprint and the experience you’ll execute IRL. It requires meticulous collaboration between designers and developers to craft each conversational turn. It also needs to mirror the overarching objectives while leaving room for real-world complexities.

Here are the 8 steps you need to follow to make your initial vision a reality:

- Training and NLU design: The design process needs a strong data foundation, which informs the AI's understanding of user interactions. This is where NLU comes into play, by focusing on enhancing the AI's ability to discern user intent and context through intent discovery, utterance generation, and model testing.

- Long tail design - dialog and response design: After gleaning insights from the AI's training, designers craft the conversation's structure. Every twist, turn, and potential edge case is mapped out to offer a seamless user experience. This step involves two critical disciplines:

Dialog design: At this stage, the designer manages the logic and flow of the conversation, ensuring users are guided smoothly from start to finish.

Response design: This discipline shapes the voice and tone of the AI. Here, designers craft responses that are not only informative but also align with the brand's persona and the platform's visual elements. Response designers also work closely with dialog designers in this stage to ensure the language used is clear and easy to understand. - Error handling - response design: Designers also need to proactively create pathways for potential uncertainties, guiding users back to happy paths and ensuring the agent can handle unexpected turns in the conversation. Response designers play a key role in crafting these fallback responses that help glean the required information from users.

- Development: Engineers breathe life into the design, ensuring that the backend integrates seamlessly with the conversational flow.

- QA testing: Thorough testing identifies any technical or conversational challenges, paving the way for refinements that elevate user satisfaction.

- Refinement: Continuous improvement is key. Based on internal feedback and testing insights, the design undergoes further optimization to ensure it remains aligned with user needs.

- Final test: A comprehensive review, involving all stakeholders, ensures the design is primed for launch.

- Launch: Unveiling the design marks a beginning, not an end. Regular monitoring and iterative enhancements ensure the AI remains relevant, efficient, and user-centric.

Stage three: BAU (business as usual) monitoring

Once the design is live, it’s up to the designers to monitor how users interact with the agent, which will help them find weak points and understand areas of friction.

During this stage, each conversation is an opportunity to optimize for increased conversion or ROI. The team works iteratively, updating responses or refining conversation flows to address feedback before rolling out changes gradually. With each new insight, the cycle begins again, driving improvements that may inspire entirely different journeys.

The dialog and response designers will look to refine:

- Flows: Streamline paths, remove unused branches, add missing steps. Each iteration should move users through the journey more effortlessly.

- Responses: Rewrite responses based on accumulated knowledge and user feedback. The goal here is to keep the AI consistent and helpful.

While the NLU designer will look to refine:

- Understanding: Expand the agent’s comprehension through model retraining, adding new utterances, intents, and entities to reduce points of confusion or frustration.

- New opportunities: Sometimes BAU monitoring uncovers gaps that inspire entirely new experiences. Teams work with stakeholders on shaping requirements to design new solutions.

Measuring your assistant’s effectiveness

Measuring performance helps determine what’s working and what’s not. For conversational AI, cross-functional teams monitor metrics in natural language understanding, dialog design, and response quality to pinpoint weaknesses across components and interactions. By scrutinizing confidence scores, turns to complete, and fallback frequency, a team can address pain points piece by piece.

How to measure NLU design

Teams measure intent coverage, entity accuracy, confidence, and other NLP metrics to determine how to improve the AI's language comprehension. Here’s what we recommend looking at:

- Intent coverage: % of user inputs mapped to a known intent

- Entity accuracy: % of entities correctly extracted from user inputs

- Confidence: Level of confidence the model has in its predictions (higher is better)

- Standard NLP metrics: F1 score, accuracy, precision, recall, false positive rate, false negative rate

How to measure dialog design

Analyzing turns, dead ends, and backtracks highlights how to simplify conversation flows and smooth the user experience. Here’s what we tend to measure:

- Turns to complete: The number of turns needed for a user to complete their goal

- Dead ends: The number of times a conversation flow ends prematurely

- Backtracks: How often users have to go backwards in a flow to provide missing info

- Task completion: % of times users are able to finish a full conversation journey

How to measure response design

Monitoring fallback use, repeats, length, and ratings identifies how to improve responses by rewriting or developing new content that better resonates with users. This is what we recommend measuring:

- Fallback count: How often the AI has to use a generic fallback response

- Repeat responses: Number of times the same response is used in a single conversation

- Response length: Average number of words in each AI response (aim for a natural length)

- User feedback: Ratings or written feedback on the quality, helpfulness, and effectiveness of responses

CxD never ends, and that’s a good thing

It may go without saying that the work of CxD is never really finished. This process being iterative means it will be ongoing for as long as your agent is available. By following the right steps, you can make this process as painless as possible for everyone involved—most importantly, your users who just want to find the answer they’re looking for.

.svg)