Trilogy’s Atlas Core, the mother of AI agents

Customer support shouldn't feel like rocket science. But when your users are rocket scientists, the stakes change. “The users submitting tickets or querying support are smart—they use our client’s software at a high level of proficiency,” Ciprian, L3 Technical Support, explains, “The challenge in offering advanced users AI support is that your agent must be knowledgeable enough to offer high-quality, contextual solutions to their issues.”

The Trilogy team also needed an AI platform that would enable them to collaborate effectively. They worked asynchronously 99% of the time and needed Voiceflow to help them deploy an AI-driven support solution they could iterate quickly.

Here’s how they did it

- Created a core AI agent, then used it to scale: Twenty percent of the products Trilogy manages produce 80% of the support tickets. So Ciprian and the CS team opted to create what they call Atlas Core: an AI agent in Voiceflow that contains the foundational building blocks for several complex agents. Atlas Core is integrated with Trilogy’s help center, a knowledge base, and core set of user support flows and functions. “We use that core to build the other 80% of standalone projects. This helps us scale AI agents quickly and add features to specific products while maintaining a complex core that we’re continually improving,” Ciprian says. This has worked wonders for standardizing and scaling projects quickly across interfaces, such as AI voice agents.

- Triaged tickets with a knowledge base: The Trilogy technical team also built a standalone ticket triaging and answering agent, called Atlas Ticket. Using the Voiceflow vector database system powered by Qdrant and Voiceflow's Knowledge Base API, the agent automatically analyzes user queries, recognizes which are L1 queries, and then answers them, drawing from a database of company-specific information, like FAQs, and product details. Integrating the AI agent with a knowledge base deflects simple queries from requiring human intervention and protects the LLM from hallucinating, all while helping users resolve their tasks efficiently.

- Refined their knowledge base to continually improve responses: “The prompt can only get you so far, from there it's about the data that's being fed into the LLM,” says Ciprian, “You can have the best prompt in the world, but if you have poorly written knowledge base articles, the AI agent will falter.” To make the most of their AI agent, Ciprian, Colin, and the CS team reviewed hundreds of AI customer interactions that were eventually routed to a human and refined their knowledge base to ensure that the LLM could better address user issues and retrieve information in future interactions.

- Built trust with hesitant customers: Many users were initially hesitant to engage with AI-driven support. To address this concern, the team chose to include the context of the agent’s answers—links to the website, help articles, or company information—to increase user trust in the agent’s accuracy. Eventually most users realized their queries could be addressed by the agent.

“We no longer worry about prompts when extracting information. We improve our knowledge base—it’s doing the heavy lifting.” - Colin, VP of Customer Success at Trilogy

The impact of scaling Voiceflow across 90 products

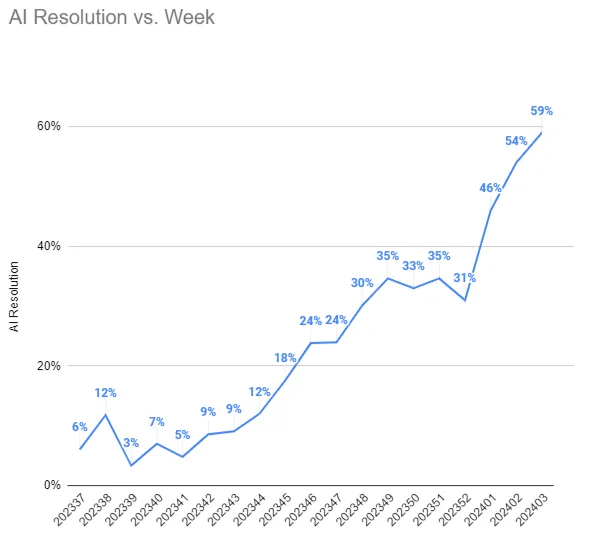

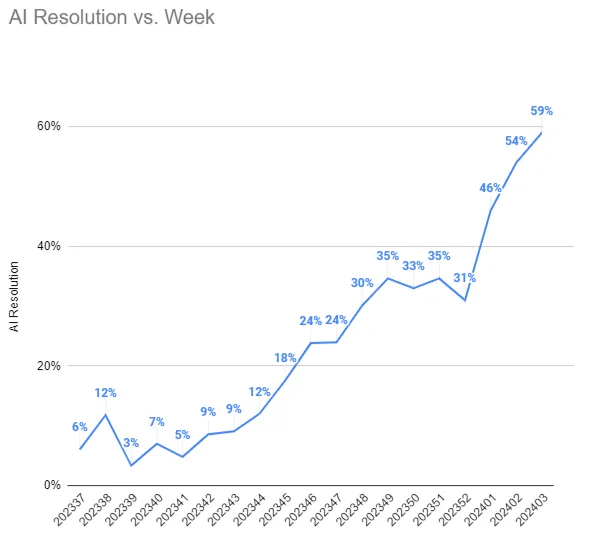

The effect on Trilogy’s support systems was immediate. Within the first week of deploying Voiceflow, they had a 35% AI resolution rate on support tickets. “The CS and technical teams looked at each other and realized Voiceflow was about to make a huge impact on our support capabilities,” Colin recalls. Since they launched AI agents, Trilogy has seen the biggest strides in four major areas.

“Of the 7,000 tickets in central support, 59% were solved completely by AI.” - Ciprian, L3 Technical Support at Trilogy

- AI resolves over half of all support tickets: After 12 weeks, 59% of tickets are resolved by AI without any human interaction. Today, 70% of tickets are resolved by AI.

- Time savings in reduced human intervention: “We used to spend 40 hours a week resolving tickets, on average 15-30 minutes per ticket. That research and effort was not scalable,” Colin says. Since launching AI support, Trilogy has reduced its support hours by 57%. Now, support representatives have more time to work on queries that require a human touch or complex research.

- Reduction in tedious research by using a knowledge base: Gone are the days of support staff needing to do endless Google searches and tedious research. Now that the CS team has built a robust knowledge base of product information, FAQs, and help articles, they can trust that the LLM will pull from trusted sources to resolve queries. “The AI agent has gotten so good that it fills in the blanks—providing more context and a better response than you’d expect,” says Ciprian.

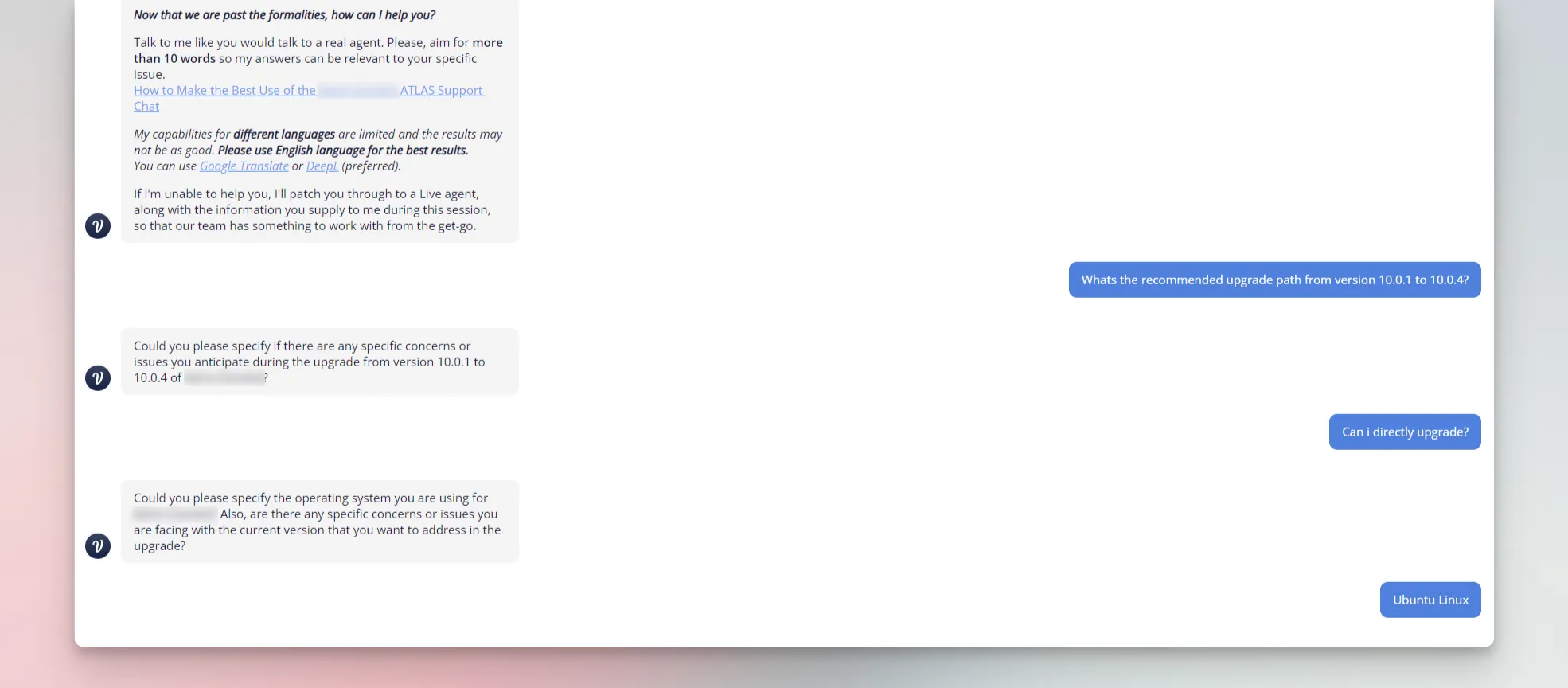

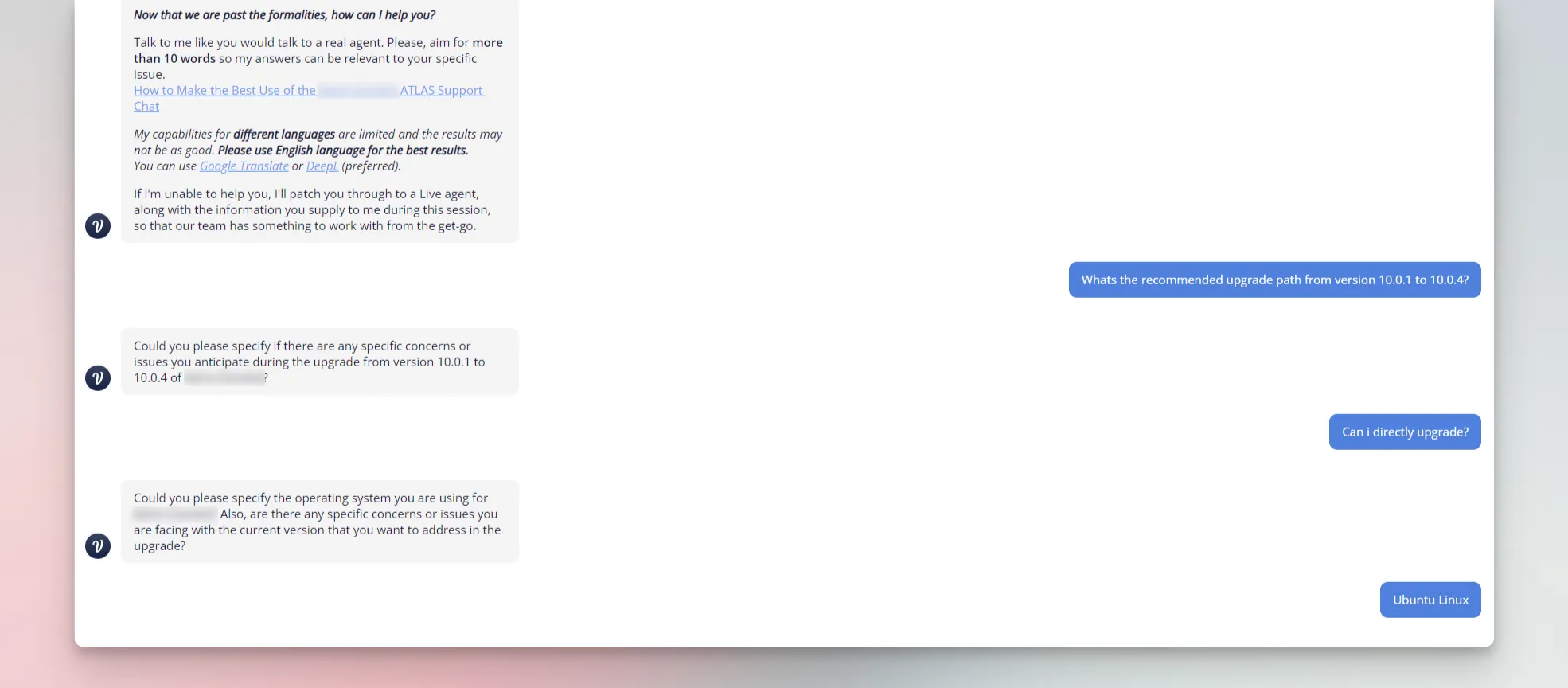

- Improving data collection to solve complex problems: Trilogy’s clients count on them to make operations work. So the pressure is high for the CS team to manage the influx of support tickets that come in when a client's product malfunctions. For example, by expanding the support chat flow to lead users through contextual questions—whether that’s information about user behavior, product data, or past issues—the AI agent retrieves the necessary data to resolve complex queries. With this new flow, the AI agent collects more contextual information than ever before. So if a query does require human intervention, support representatives have access to the chat agent’s conversation log, which enables them to resolve issues quickly.

Trilogy recommends collaboration and failing quickly for better bots

The clear visualizations of flows in the Voiceflow platform allowed the technical and CS teams to speak the same language as they designed customer experiences together. Both teams worked together on the Atlas Core to ensure that scaling AI agents to their 90 products would be seamless. “Without Voiceflow, collaborating on this many agents and projects would be near impossible. Building in Voiceflow allowed us to create a visual journey that anyone could understand, which made approvals and deployment much faster,” Ciprian explained.

Ciprian was also adamant that the Trilogy technical team learned valuable lessons when they moved quickly and learned directly from the user. “I learned more in 24 hours of a bot being live than in days of tweaking it to get things ‘perfect’. You can imagine 20% of what customers may throw at your agent, but only after you release into the wild will you discover what the user actually needs,” Ciprian says.

Colin adds, “Failing is inevitable. That’s why monitoring is so important—don’t leave your AI agents unattended. But those failures teach our teams where to build next, what to A/B test, and where our easy wins are. Fortunately, due to the guardrails we have in place, none of our failures have resulted in our agents going viral.”

What’s next for Trilogy?

Trilogy plans to use Voiceflow to continue to standardize and expand its AI capabilities. They plan to roll out Voiceflow for internal projects—for finance, marketing, and sales. For example, if Trilogy employees need to fill out an invoice, instead of searching for a form, they’ll share the information with the internal AI agent and it'll generate the required documents.

Also, the technical team is expecting another large jump in what their L2 bot will be able to do. Both L1 and L2 agents will be connected, share data, and source information from several integrations and databases to make them readily available to serve users better.

Lastly, as Trilogy expands to serve more business’ operational needs, they’ll continue to standardize and scale AI agents across their new customers. Ensuring all new businesses onboard into their AI systems right away.

As the technical and CS team’s collaboration continues to go strong, I have no doubt they’ll continue to excel at their AI-first vision.

Ready to transform your support channels with AI? Book a demo with our team.

Trilogy’s Atlas Core, the mother of AI agents

Customer support shouldn't feel like rocket science. But when your users are rocket scientists, the stakes change. “The users submitting tickets or querying support are smart—they use our client’s software at a high level of proficiency,” Ciprian, L3 Technical Support, explains, “The challenge in offering advanced users AI support is that your agent must be knowledgeable enough to offer high-quality, contextual solutions to their issues.”

The Trilogy team also needed an AI platform that would enable them to collaborate effectively. They worked asynchronously 99% of the time and needed Voiceflow to help them deploy an AI-driven support solution they could iterate quickly.

Here’s how they did it

- Created a core AI agent, then used it to scale: Twenty percent of the products Trilogy manages produce 80% of the support tickets. So Ciprian and the CS team opted to create what they call Atlas Core: an AI agent in Voiceflow that contains the foundational building blocks for several complex agents. Atlas Core is integrated with Trilogy’s help center, a knowledge base, and core set of user support flows and functions. “We use that core to build the other 80% of standalone projects. This helps us scale AI agents quickly and add features to specific products while maintaining a complex core that we’re continually improving,” Ciprian says. This has worked wonders for standardizing and scaling projects quickly across interfaces, such as AI voice agents.

- Triaged tickets with a knowledge base: The Trilogy technical team also built a standalone ticket triaging and answering agent, called Atlas Ticket. Using the Voiceflow vector database system powered by Qdrant and Voiceflow's Knowledge Base API, the agent automatically analyzes user queries, recognizes which are L1 queries, and then answers them, drawing from a database of company-specific information, like FAQs, and product details. Integrating the AI agent with a knowledge base deflects simple queries from requiring human intervention and protects the LLM from hallucinating, all while helping users resolve their tasks efficiently.

- Refined their knowledge base to continually improve responses: “The prompt can only get you so far, from there it's about the data that's being fed into the LLM,” says Ciprian, “You can have the best prompt in the world, but if you have poorly written knowledge base articles, the AI agent will falter.” To make the most of their AI agent, Ciprian, Colin, and the CS team reviewed hundreds of AI customer interactions that were eventually routed to a human and refined their knowledge base to ensure that the LLM could better address user issues and retrieve information in future interactions.

- Built trust with hesitant customers: Many users were initially hesitant to engage with AI-driven support. To address this concern, the team chose to include the context of the agent’s answers—links to the website, help articles, or company information—to increase user trust in the agent’s accuracy. Eventually most users realized their queries could be addressed by the agent.

“We no longer worry about prompts when extracting information. We improve our knowledge base—it’s doing the heavy lifting.” - Colin, VP of Customer Success at Trilogy

The impact of scaling Voiceflow across 90 products

The effect on Trilogy’s support systems was immediate. Within the first week of deploying Voiceflow, they had a 35% AI resolution rate on support tickets. “The CS and technical teams looked at each other and realized Voiceflow was about to make a huge impact on our support capabilities,” Colin recalls. Since they launched AI agents, Trilogy has seen the biggest strides in four major areas.

“Of the 7,000 tickets in central support, 59% were solved completely by AI.” - Ciprian, L3 Technical Support at Trilogy

- AI resolves over half of all support tickets: After 12 weeks, 59% of tickets are resolved by AI without any human interaction. Today, 70% of tickets are resolved by AI.

- Time savings in reduced human intervention: “We used to spend 40 hours a week resolving tickets, on average 15-30 minutes per ticket. That research and effort was not scalable,” Colin says. Since launching AI support, Trilogy has reduced its support hours by 57%. Now, support representatives have more time to work on queries that require a human touch or complex research.

- Reduction in tedious research by using a knowledge base: Gone are the days of support staff needing to do endless Google searches and tedious research. Now that the CS team has built a robust knowledge base of product information, FAQs, and help articles, they can trust that the LLM will pull from trusted sources to resolve queries. “The AI agent has gotten so good that it fills in the blanks—providing more context and a better response than you’d expect,” says Ciprian.

- Improving data collection to solve complex problems: Trilogy’s clients count on them to make operations work. So the pressure is high for the CS team to manage the influx of support tickets that come in when a client's product malfunctions. For example, by expanding the support chat flow to lead users through contextual questions—whether that’s information about user behavior, product data, or past issues—the AI agent retrieves the necessary data to resolve complex queries. With this new flow, the AI agent collects more contextual information than ever before. So if a query does require human intervention, support representatives have access to the chat agent’s conversation log, which enables them to resolve issues quickly.

Trilogy recommends collaboration and failing quickly for better bots

The clear visualizations of flows in the Voiceflow platform allowed the technical and CS teams to speak the same language as they designed customer experiences together. Both teams worked together on the Atlas Core to ensure that scaling AI agents to their 90 products would be seamless. “Without Voiceflow, collaborating on this many agents and projects would be near impossible. Building in Voiceflow allowed us to create a visual journey that anyone could understand, which made approvals and deployment much faster,” Ciprian explained.

Ciprian was also adamant that the Trilogy technical team learned valuable lessons when they moved quickly and learned directly from the user. “I learned more in 24 hours of a bot being live than in days of tweaking it to get things ‘perfect’. You can imagine 20% of what customers may throw at your agent, but only after you release into the wild will you discover what the user actually needs,” Ciprian says.

Colin adds, “Failing is inevitable. That’s why monitoring is so important—don’t leave your AI agents unattended. But those failures teach our teams where to build next, what to A/B test, and where our easy wins are. Fortunately, due to the guardrails we have in place, none of our failures have resulted in our agents going viral.”

What’s next for Trilogy?

Trilogy plans to use Voiceflow to continue to standardize and expand its AI capabilities. They plan to roll out Voiceflow for internal projects—for finance, marketing, and sales. For example, if Trilogy employees need to fill out an invoice, instead of searching for a form, they’ll share the information with the internal AI agent and it'll generate the required documents.

Also, the technical team is expecting another large jump in what their L2 bot will be able to do. Both L1 and L2 agents will be connected, share data, and source information from several integrations and databases to make them readily available to serve users better.

Lastly, as Trilogy expands to serve more business’ operational needs, they’ll continue to standardize and scale AI agents across their new customers. Ensuring all new businesses onboard into their AI systems right away.

As the technical and CS team’s collaboration continues to go strong, I have no doubt they’ll continue to excel at their AI-first vision.

.svg)