I’ve got 6 problems with the Klarna agent, here’s how to fix them:

1. Generates verbose responses

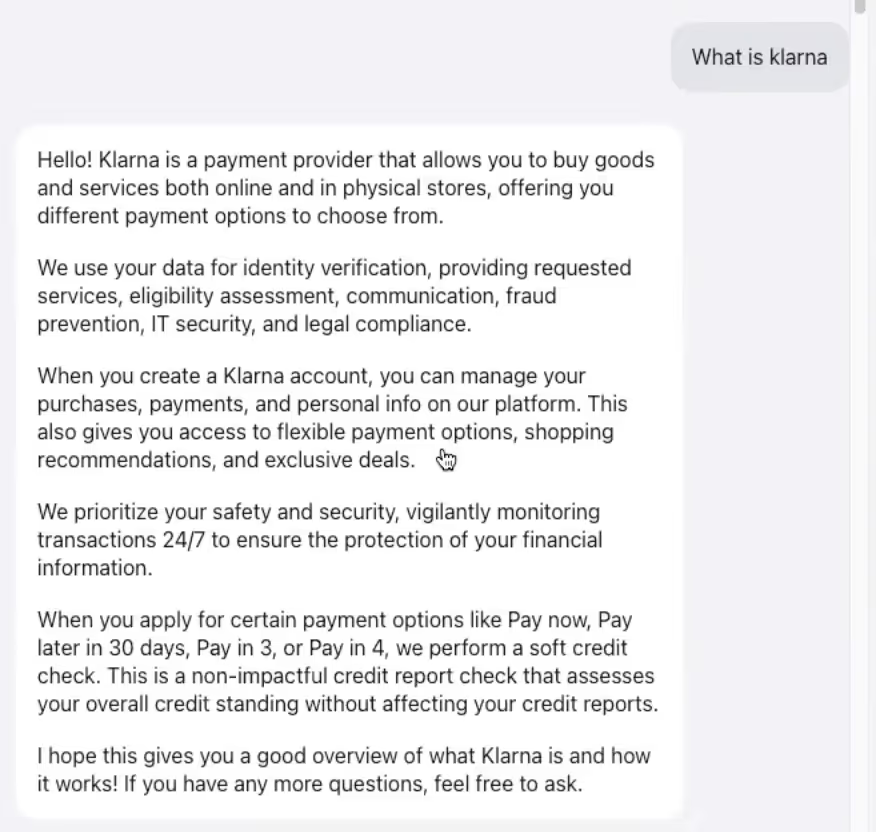

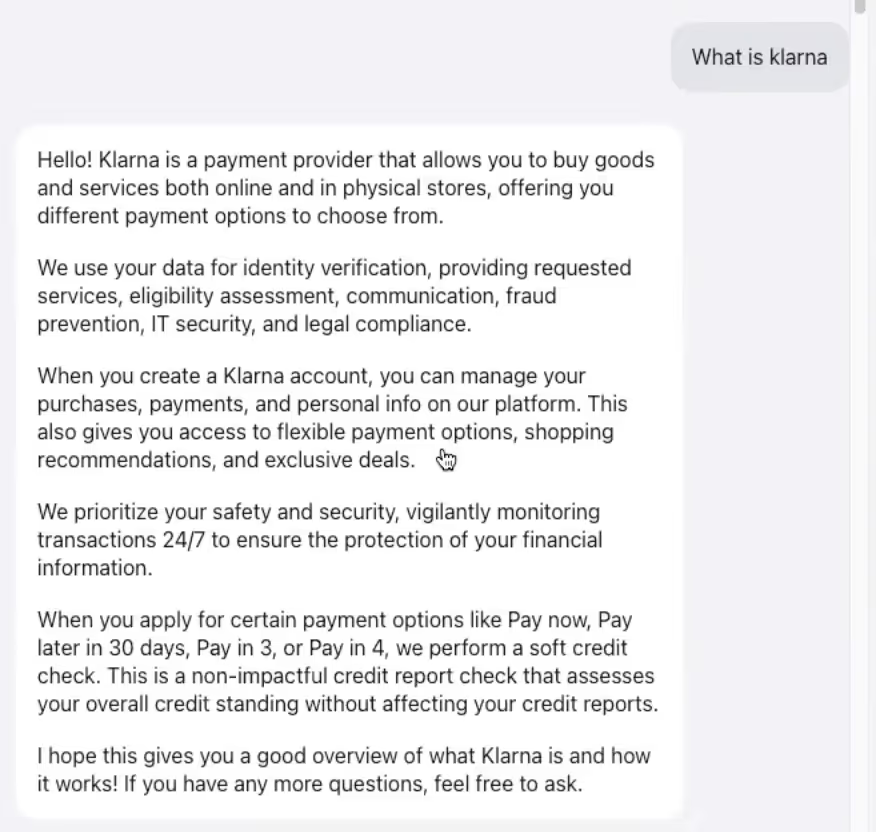

I started my tests by asking a simple question: What is Klarna? I got a response that was lengthy and filled the screen with text.

It is possible to answer this question without going into a brand story—in fact, you should be concise. To answer this question without taking up all this valuable real estate, you should explain your company in 2-3 sentences, followed by top product features, and what briefly sets you apart from competitors. That’s a conversation design best practice.

But this verbosity was consistent with the Klarna agent. No matter the question, from simple to complex, the response I received was always longer than necessary and took up most of the chat window. At this point, I was starting to understand Kevin’s humble adage.

Now, if you’re using an LLM paired with a knowledge base to address basic questions from users (which you should), then dealing with verbosity from your LLM just requires some clever prompting.

Here’s an example of what this prompt could look like:

As a chatbot support agent, provide a clear and concise response to the user’s question:

“{userQuestion}”

With the provided details:

“{chunks}”

Instructions:

- Summarize key points briefly, within three to four sentences.

- Use bullet points for clarity and numbered lists for step-by-step instructions.

- Start with a direct answer before using bullet points for additional details.

- Write in simple, clear language.

- Apply markdown syntax for formatting.

- Ensure accuracy with the core information given.

- Respond with "chunks" if essential details are missing.

- Include hyperlinks only when relevant, formatted as: "Name of the page page."

- Avoid phrases like 'information provided.'

IMPORTANT:

If ‘chunks’ do not contain the needed information to answer a question, respond with “NOANSWER”

These instructions not only work well for an LLM, but also for static responses. Always remember, users will skim responses—so break up lengthy responses with numbered or bulleted lists where you can. You can also do things like:

- Provide buttons with common user responses

- Offer a maximum of three options for each conversation item

- If more options are necessary, group them into a “more options” button

And remember, a conversation might not always be the best way to automate something. You can use other UI elements like forms, images, attachments, and carousels.

2. Unable to perform simple actions

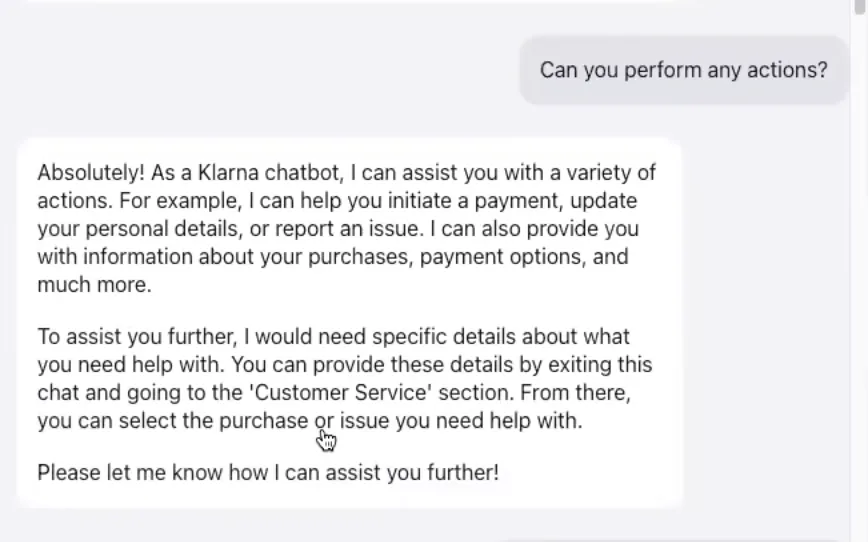

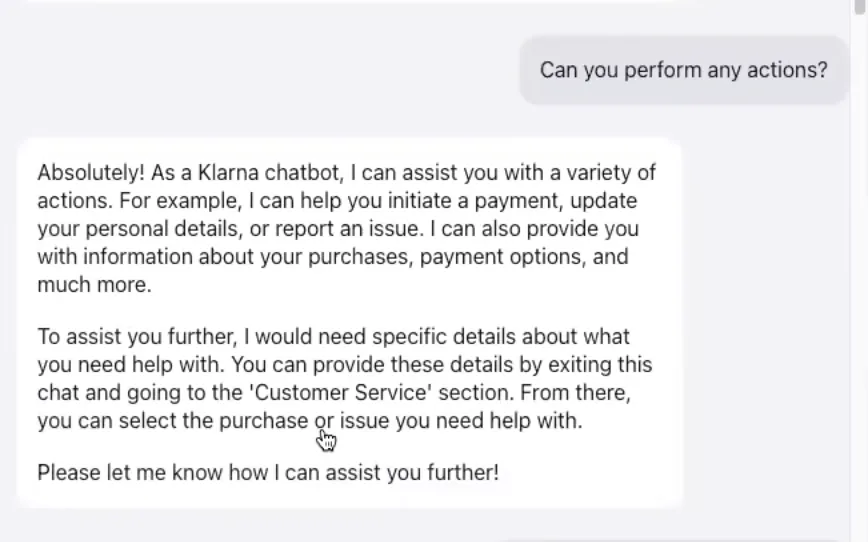

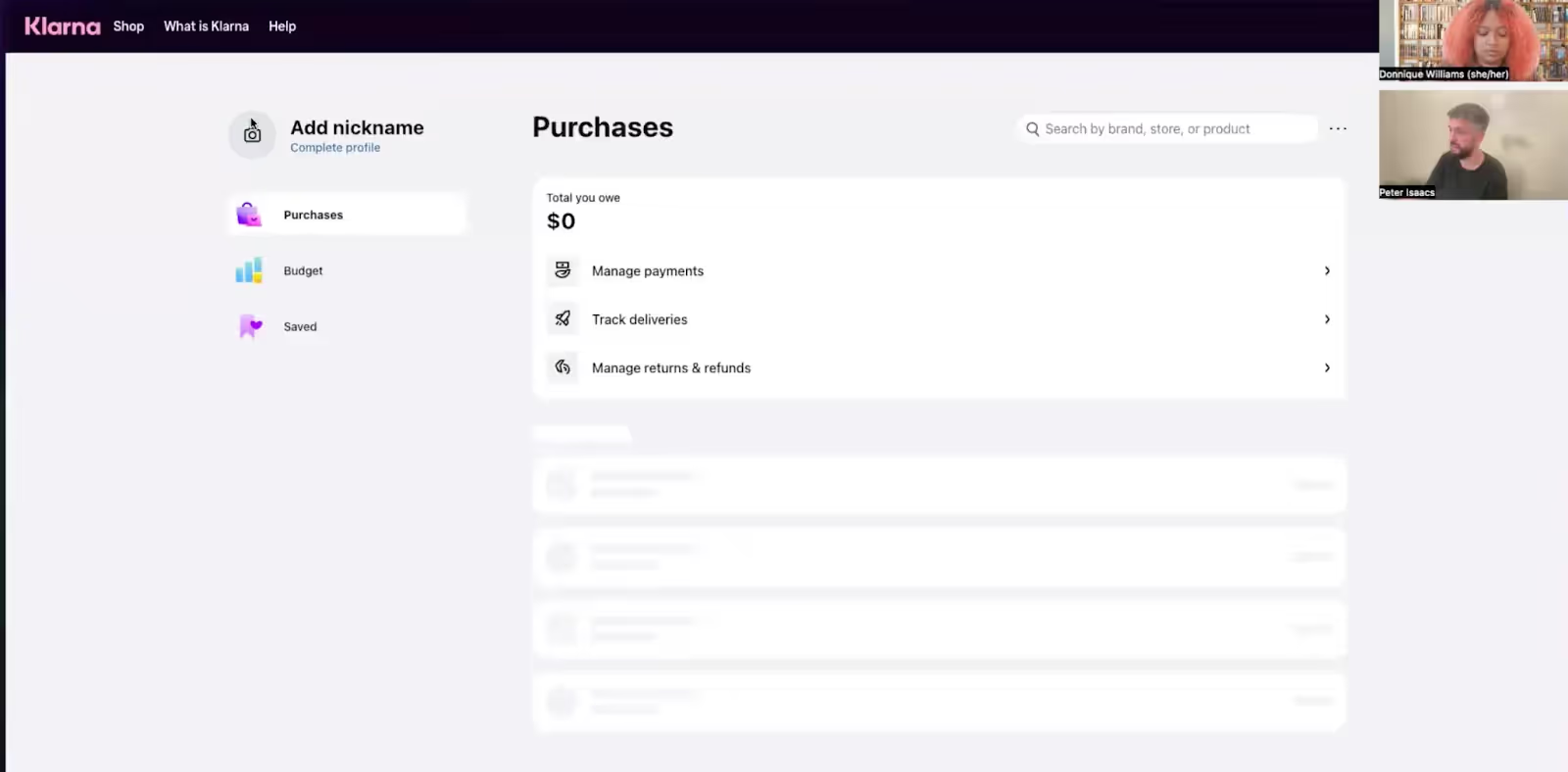

When prompted, the Klarna agent has a list of actions it says it can complete—updating personal details, changing payment information, or sharing information about my purchases. In my experience, it is unable to complete simple actions on behalf of the user.

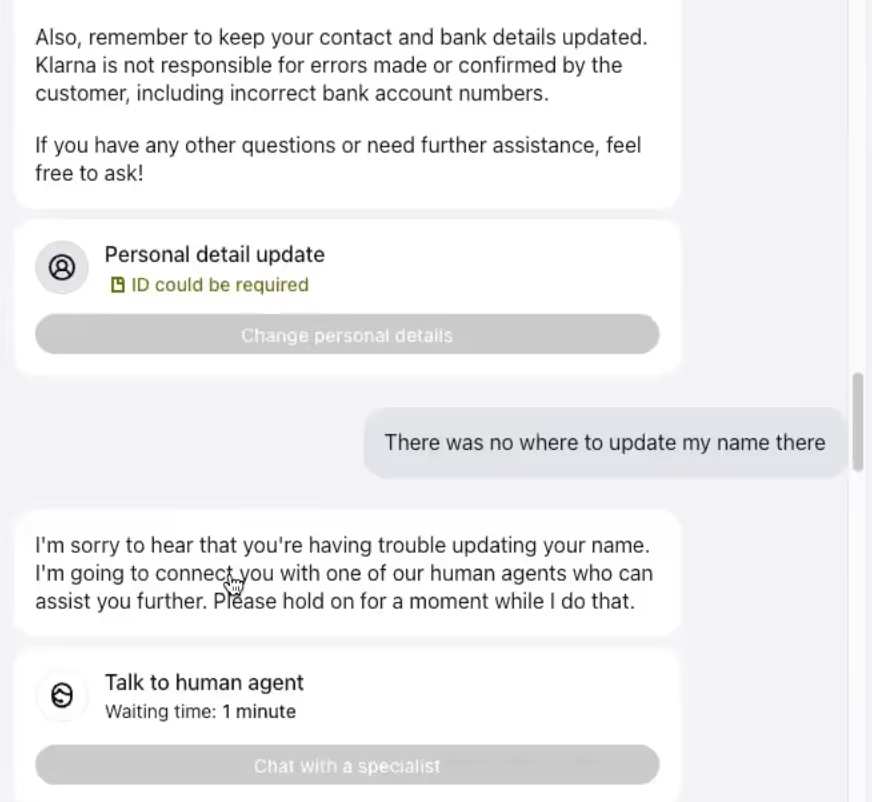

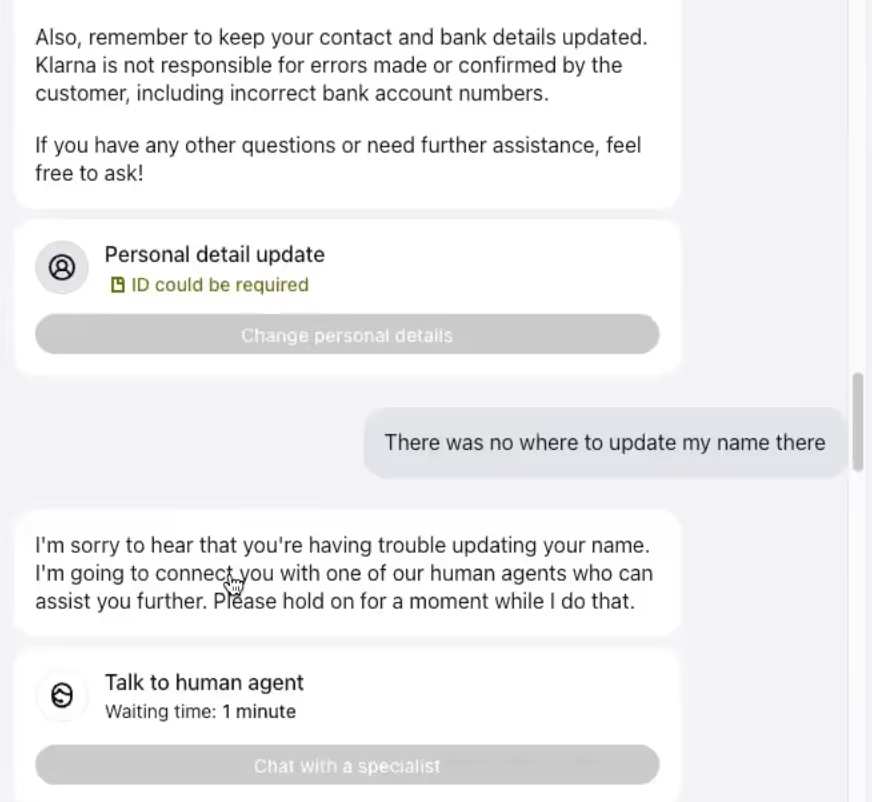

When asking the agent to update my name in my profile, it offered me a button to update my personal information by navigating away from the chat. That link led to nowhere.

When I asked the agent to tell me what past purchases I had made—an easy task, because I had none—it prompted me to leave the chat to find them myself. I asked the agent to update my payment details, and once again I was prompted to follow a list of instructions to complete the action myself, outside the chat window. When I asked the agent if I could give the details in the chat, it said no and pushed me to a live agent.

Over and over, I asked the Klarna agent to complete actions it had told me it could do, and every time it asked me to do the action myself, it pushed me to a live agent or failed altogether.

To improve the performance right away, I would ensure that it was very clear to the user what the agent can and can’t do. Setting expectations is key. Then, for actions the agent can’t complete, I’d ensure I have clear instructions in a knowledge base that an agent can pull from. For actions an agent can complete, it’s all about setting up intents that help capture utterances and then push them down the business process you’re trying to take.

If an agent can’t automate the thing a user is trying to do, then clearly tell users how to do it. And if the agent can automate it, do it.

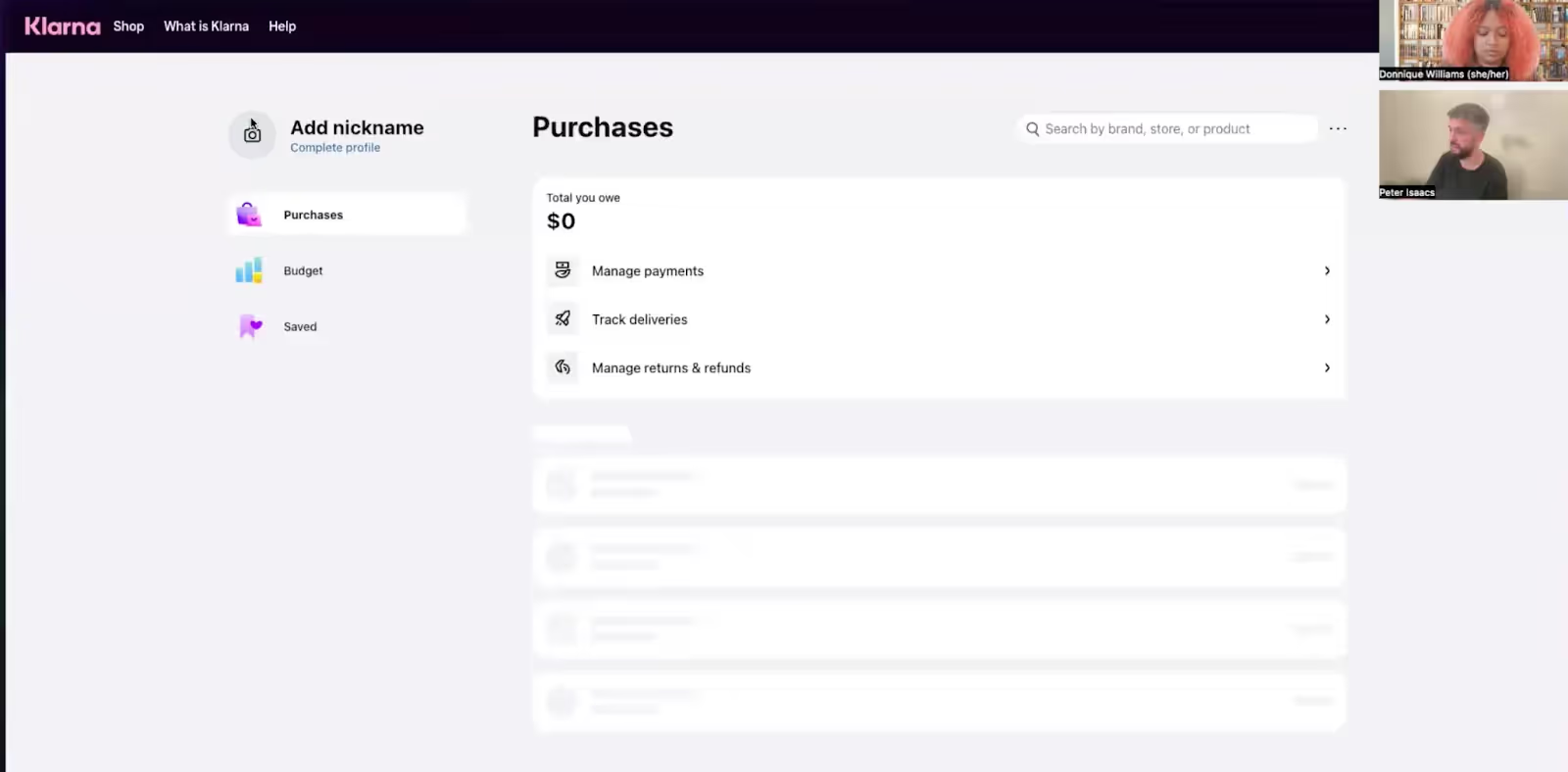

3. Asks users to exit support to complete actions

I will admit I’m not a Klarna customer—I started a blank account to run these tests—so this is not a scientific experiment. But if an agent claims it can do a collection of actions for me as a user, then I expect the agent to complete those actions. I don’t expect to be sent exploring my own profile with a laundry list of instructions.

Now, generating instructions for a customer to DIY actions is not inherently bad. But what Klarna gets wrong in this case is the list of instructions in a static support window. When you select a button or go to a different page, that window disappears.

Imagine the experience from the user’s perspective. They must read those verbose instructions, exit the support window, remember those instructions well enough to navigate the site, complete the action, and navigate back to the support window. No one will remember those instructions after five clicks, not to mention how to navigate back to the support. Which means they’ll be unable to complete the action they sought support for. The whole experience is just frustrating.

So, what’s the solution? If you must ask your user to complete the action themselves (although I’d argue, there’s a bunch of actions your agent can do), include a link that goes to where you want your user to go. (And make sure it works.) This is more deterministic flow but it ensures that the agent is more accurately supporting users.

4. Hides the customer support chat

In my opinion, finding the customer support chat was a hassle every time. Here’s a clip of me struggling, which happened repeatedly as the agent directed me to complete actions elsewhere.

Instead, Klarna should host their customer support in the bottom corner of the page as a dynamic window that stays with the user as they navigate the site (and you should too).

Also, since they’re offering customer support already, I don’t recommend hiding it away. Adding unnecessary friction to the support process is counterintuitive. And when users are inevitably transferred to a live agent, they’ll take out those frustrations on them.

5. Employs a sickly sweet tone

Now, this may be because I am a self-proclaimed killjoy when it comes to agent’s personalities. But, the inhuman, enthusiastic tone of this agent often undermines its intentions.

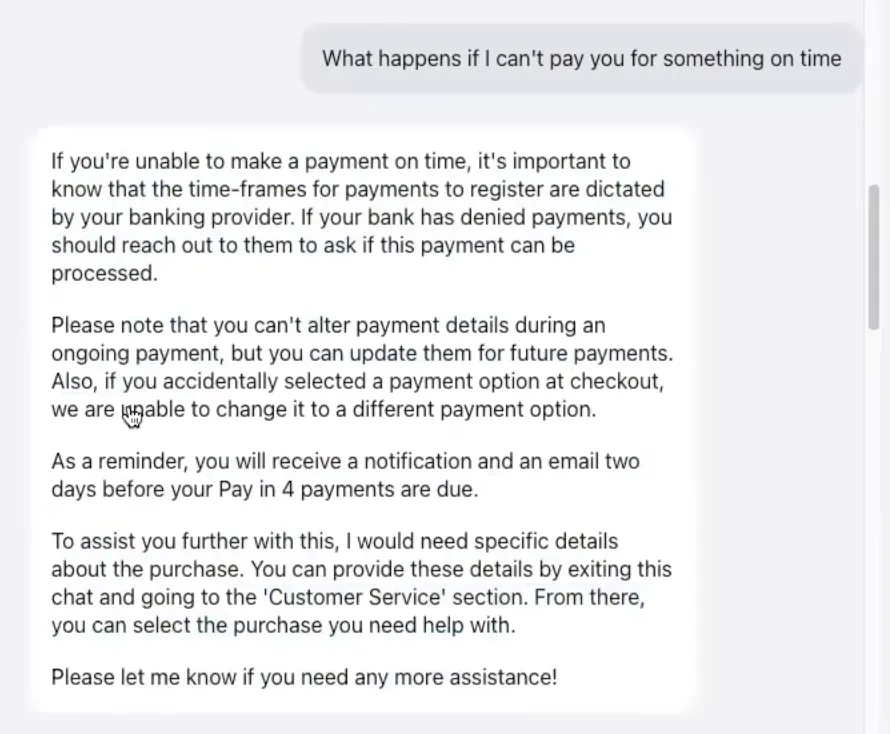

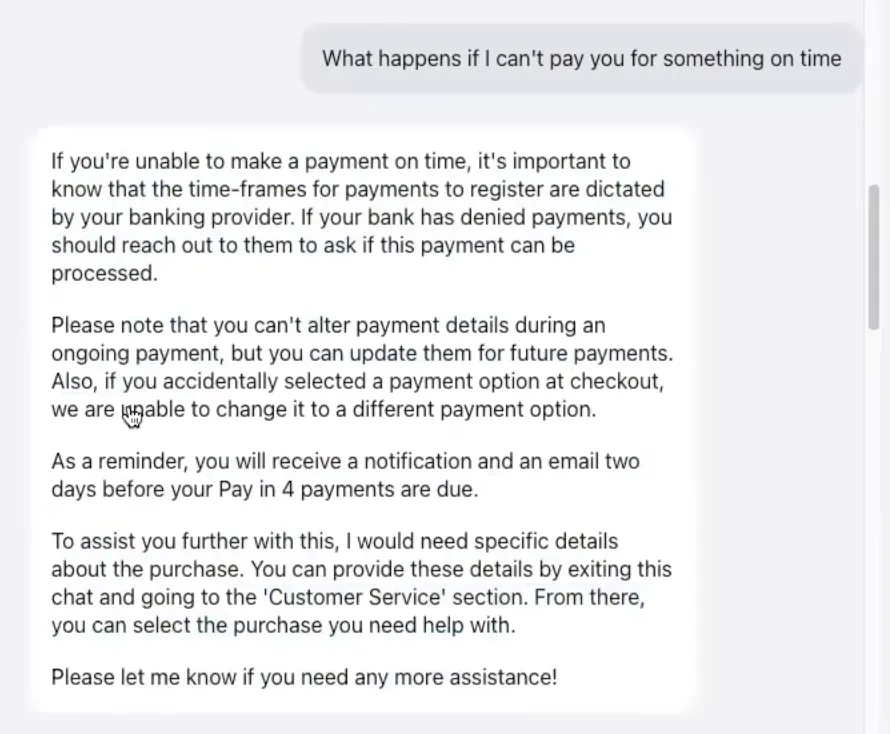

For example, I asked a common question for Klarna: What happens if I can’t pay on time? And its answers were run-of-the-mill boilerplate. That isn’t my problem with the response—my issue is that it lacks empathy. It lacked anything that addressed the potential hardship of a customer who needs to ask that question in the first place.

Conversation designers do a lot of things and one of the most important is keeping the human qualities of conversation alive in AI agents. In the case of a live customer support agent or an in-person interaction, there’s usually acknowledgement and empathy in response to someone sharing their difficulty in making payments on time. I encourage designers and developers to include moments of empathy, it could be as simple as starting the response with “I’m sorry to hear you’re having trouble making payments.”

You can disagree with me, in fact, I welcome it (shoot me a message on LinkedIn). But I think moments like these really add up to a better user experience.

I’d also suggest removing static pleasantries that you drop at the end of every paragraph. That’s not how people talk.

6. Suffers from lengthy response times

After receiving an input, the Klarna agent generated responses after 15-20 seconds. That doesn’t sound like much, but imagine asking your barista for a chai latte and watching them stare at you for 20 seconds before responding—it’s bearable, but painfully awkward. And from a technical standpoint, it’s unacceptable.

I have a theory as to why the Klarna agent’s responses lag—I suspect using the beta of OpenAI’s assistants API is the culprit. For each response, the agent is accessing multiple tools at once, like knowledge retrieval, function calling, voice and tone tuning, and persistent threads. As a result, the response time is impacted.

If you’re planning to use the new OpenAI assistants API, these current constraints are ones to consider. Setbacks are to be expected when an API is in beta, even with a company as innovative as OpenAI. In reality, this API is great at helping you launch an agent quickly, but not ideal at helping your agent perform actions quickly.

To avoid lengthy response times with your agent, it’s best to use a hybrid approach. Use a knowledge base for information retrieval and deterministic flows (along with LLMs) to guide users through actions.

Also, don’t use GPT-4, or Claude Opus for everything—it’s like driving a Ferrari two doors down to pick up milk. Sure, it’s cool but totally unnecessary. Instead, you can use cheaper and faster models to give the same feeling of flexibility, while also using deterministic flows to get things done.

You don’t need to be BFFs with Sam Altman to automate your customer support

If there’s one thing I want you to take away from this breakdown it’s this—you don’t need a special relationship with OpenAI to automate your customer support. With a knowledge base, LLMs, and an agent orchestration platform (like Voiceflow, obviously), you can consistently automate an impressive batch of your level 1 support tickets.

In fact, you can move beyond level 1 tickets. Developers at Trilogy are making it happen—their team has automated 60% of their customer support across multiple product lines, in under 12 weeks. Meanwhile, our customer support agent Tico, offers multi-channel support for 97% of tickets and completes complex actions on behalf of users. And those are real results.

I will say, for simple FAQs and level 1 inquiries, the Klarna bot excels. There is a team of incredible developers and designers who are working tirelessly to make the Klarna agent possible. And I don’t doubt that it will improve over time. This AI agent is an incredibly successful proof concept (POC) with issues that can be fixed with testing and iteration—the most important steps to avoid a POC that doesn’t deliver results.

But given all the spectacle when that press release dropped, I expected to be wowed. Maybe next time.

I’ve got 6 problems with the Klarna agent, here’s how to fix them:

1. Generates verbose responses

I started my tests by asking a simple question: What is Klarna? I got a response that was lengthy and filled the screen with text.

It is possible to answer this question without going into a brand story—in fact, you should be concise. To answer this question without taking up all this valuable real estate, you should explain your company in 2-3 sentences, followed by top product features, and what briefly sets you apart from competitors. That’s a conversation design best practice.

But this verbosity was consistent with the Klarna agent. No matter the question, from simple to complex, the response I received was always longer than necessary and took up most of the chat window. At this point, I was starting to understand Kevin’s humble adage.

Now, if you’re using an LLM paired with a knowledge base to address basic questions from users (which you should), then dealing with verbosity from your LLM just requires some clever prompting.

Here’s an example of what this prompt could look like:

As a chatbot support agent, provide a clear and concise response to the user’s question:

“{userQuestion}”

With the provided details:

“{chunks}”

Instructions:

- Summarize key points briefly, within three to four sentences.

- Use bullet points for clarity and numbered lists for step-by-step instructions.

- Start with a direct answer before using bullet points for additional details.

- Write in simple, clear language.

- Apply markdown syntax for formatting.

- Ensure accuracy with the core information given.

- Respond with "chunks" if essential details are missing.

- Include hyperlinks only when relevant, formatted as: "Name of the page page."

- Avoid phrases like 'information provided.'

IMPORTANT:

If ‘chunks’ do not contain the needed information to answer a question, respond with “NOANSWER”

These instructions not only work well for an LLM, but also for static responses. Always remember, users will skim responses—so break up lengthy responses with numbered or bulleted lists where you can. You can also do things like:

- Provide buttons with common user responses

- Offer a maximum of three options for each conversation item

- If more options are necessary, group them into a “more options” button

And remember, a conversation might not always be the best way to automate something. You can use other UI elements like forms, images, attachments, and carousels.

2. Unable to perform simple actions

When prompted, the Klarna agent has a list of actions it says it can complete—updating personal details, changing payment information, or sharing information about my purchases. In my experience, it is unable to complete simple actions on behalf of the user.

When asking the agent to update my name in my profile, it offered me a button to update my personal information by navigating away from the chat. That link led to nowhere.

When I asked the agent to tell me what past purchases I had made—an easy task, because I had none—it prompted me to leave the chat to find them myself. I asked the agent to update my payment details, and once again I was prompted to follow a list of instructions to complete the action myself, outside the chat window. When I asked the agent if I could give the details in the chat, it said no and pushed me to a live agent.

Over and over, I asked the Klarna agent to complete actions it had told me it could do, and every time it asked me to do the action myself, it pushed me to a live agent or failed altogether.

To improve the performance right away, I would ensure that it was very clear to the user what the agent can and can’t do. Setting expectations is key. Then, for actions the agent can’t complete, I’d ensure I have clear instructions in a knowledge base that an agent can pull from. For actions an agent can complete, it’s all about setting up intents that help capture utterances and then push them down the business process you’re trying to take.

If an agent can’t automate the thing a user is trying to do, then clearly tell users how to do it. And if the agent can automate it, do it.

3. Asks users to exit support to complete actions

I will admit I’m not a Klarna customer—I started a blank account to run these tests—so this is not a scientific experiment. But if an agent claims it can do a collection of actions for me as a user, then I expect the agent to complete those actions. I don’t expect to be sent exploring my own profile with a laundry list of instructions.

Now, generating instructions for a customer to DIY actions is not inherently bad. But what Klarna gets wrong in this case is the list of instructions in a static support window. When you select a button or go to a different page, that window disappears.

Imagine the experience from the user’s perspective. They must read those verbose instructions, exit the support window, remember those instructions well enough to navigate the site, complete the action, and navigate back to the support window. No one will remember those instructions after five clicks, not to mention how to navigate back to the support. Which means they’ll be unable to complete the action they sought support for. The whole experience is just frustrating.

So, what’s the solution? If you must ask your user to complete the action themselves (although I’d argue, there’s a bunch of actions your agent can do), include a link that goes to where you want your user to go. (And make sure it works.) This is more deterministic flow but it ensures that the agent is more accurately supporting users.

4. Hides the customer support chat

In my opinion, finding the customer support chat was a hassle every time. Here’s a clip of me struggling, which happened repeatedly as the agent directed me to complete actions elsewhere.

Instead, Klarna should host their customer support in the bottom corner of the page as a dynamic window that stays with the user as they navigate the site (and you should too).

Also, since they’re offering customer support already, I don’t recommend hiding it away. Adding unnecessary friction to the support process is counterintuitive. And when users are inevitably transferred to a live agent, they’ll take out those frustrations on them.

5. Employs a sickly sweet tone

Now, this may be because I am a self-proclaimed killjoy when it comes to agent’s personalities. But, the inhuman, enthusiastic tone of this agent often undermines its intentions.

For example, I asked a common question for Klarna: What happens if I can’t pay on time? And its answers were run-of-the-mill boilerplate. That isn’t my problem with the response—my issue is that it lacks empathy. It lacked anything that addressed the potential hardship of a customer who needs to ask that question in the first place.

Conversation designers do a lot of things and one of the most important is keeping the human qualities of conversation alive in AI agents. In the case of a live customer support agent or an in-person interaction, there’s usually acknowledgement and empathy in response to someone sharing their difficulty in making payments on time. I encourage designers and developers to include moments of empathy, it could be as simple as starting the response with “I’m sorry to hear you’re having trouble making payments.”

You can disagree with me, in fact, I welcome it (shoot me a message on LinkedIn). But I think moments like these really add up to a better user experience.

I’d also suggest removing static pleasantries that you drop at the end of every paragraph. That’s not how people talk.

6. Suffers from lengthy response times

After receiving an input, the Klarna agent generated responses after 15-20 seconds. That doesn’t sound like much, but imagine asking your barista for a chai latte and watching them stare at you for 20 seconds before responding—it’s bearable, but painfully awkward. And from a technical standpoint, it’s unacceptable.

I have a theory as to why the Klarna agent’s responses lag—I suspect using the beta of OpenAI’s assistants API is the culprit. For each response, the agent is accessing multiple tools at once, like knowledge retrieval, function calling, voice and tone tuning, and persistent threads. As a result, the response time is impacted.

If you’re planning to use the new OpenAI assistants API, these current constraints are ones to consider. Setbacks are to be expected when an API is in beta, even with a company as innovative as OpenAI. In reality, this API is great at helping you launch an agent quickly, but not ideal at helping your agent perform actions quickly.

To avoid lengthy response times with your agent, it’s best to use a hybrid approach. Use a knowledge base for information retrieval and deterministic flows (along with LLMs) to guide users through actions.

Also, don’t use GPT-4, or Claude Opus for everything—it’s like driving a Ferrari two doors down to pick up milk. Sure, it’s cool but totally unnecessary. Instead, you can use cheaper and faster models to give the same feeling of flexibility, while also using deterministic flows to get things done.

You don’t need to be BFFs with Sam Altman to automate your customer support

If there’s one thing I want you to take away from this breakdown it’s this—you don’t need a special relationship with OpenAI to automate your customer support. With a knowledge base, LLMs, and an agent orchestration platform (like Voiceflow, obviously), you can consistently automate an impressive batch of your level 1 support tickets.

In fact, you can move beyond level 1 tickets. Developers at Trilogy are making it happen—their team has automated 60% of their customer support across multiple product lines, in under 12 weeks. Meanwhile, our customer support agent Tico, offers multi-channel support for 97% of tickets and completes complex actions on behalf of users. And those are real results.

I will say, for simple FAQs and level 1 inquiries, the Klarna bot excels. There is a team of incredible developers and designers who are working tirelessly to make the Klarna agent possible. And I don’t doubt that it will improve over time. This AI agent is an incredibly successful proof concept (POC) with issues that can be fixed with testing and iteration—the most important steps to avoid a POC that doesn’t deliver results.

But given all the spectacle when that press release dropped, I expected to be wowed. Maybe next time.

.svg)