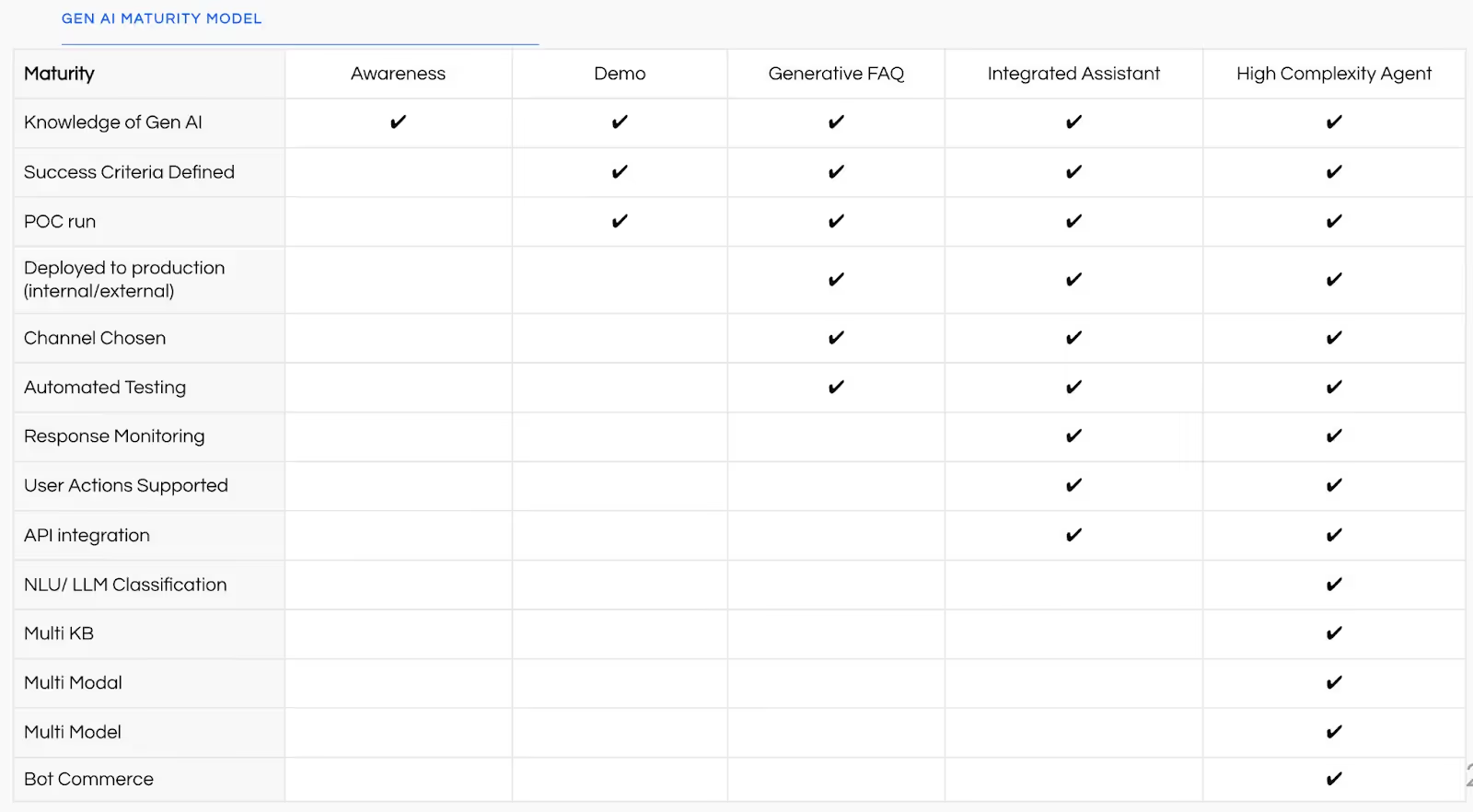

The five levels of AI maturity

As I see it, there are five levels of AI maturity. Let’s take a quick look at each, and then we’ll talk about why companies have trouble progressing from one stage to another.

Stage 1: Awareness

The team in this stage knows that generative AI tools like ChatGPT exist but the extent of their AI knowledge stops there. That kind of manager is also probably not reading this article, so let’s move on.

Stage 2: Demo

Managers in this stage have successfully built some kind of demo GenAI application, but haven’t deployed it. Developers in this stage got curious and built a demo chatbot for a specific use case, but never got brave enough to test it on customers.

Stage 3: Generative FAQ

At this stage, the company has deployed a generative FAQ chatbot to production that can answer customer questions.

Stage 4: Integrated agent

Teams at this stage have deployed an agent that can help users take actions and complete multiple tasks. The agent provides user support, checks systems status, and allows human escalation within its system.

Stage 5: High-complexity agent

At this stage, the team has created a complex agent that’s tailored to the customer experience and draws on multiple knowledge bases, models, and modalities.

Why most companies stall out at stage 2, the demo stage

It’s not hard to build an FAQ agent—in fact, many developers can build one in five minutes—but most companies end up stalling their Gen-AI agent efforts at the demo stage (especially Fortune 500 and tech companies). Let’s figure out why.

They fall off the data cliff

Functioning FAQ bots need good data to function. Pre-existing FAQ answers and clean documents provide a strong foundation for a bot that provides customers with relevant, helpful answers. Without good data, demo GenAI agents tend to fall off a cliff.

Too many companies fall off that cliff. Their data is disjointed, disorganized, and not actionable, which creates a hurdle that many companies never clear.

They can’t deploy or test with confidence

Some companies have great data, but lack the resources to properly deploy and test their GenAI agent. Being able to actually deploy a web chat requires a certain degree of expertise that some companies just can’t provide, due to silos slowing down and hindering the process.

There can also be unnecessary bureaucracy when developers rely on other teams to validate and test a virtual agent. Often, these teams lack the tools they need and the way forward isn’t clear. The result is a muddy process that guarantees key testers get stuck in the weeds.

Finally, some companies get cold feet before deploying an FAQ bot because they perceive security risks or simply lack confidence that they can keep it running well. In these cases, abandoning the project altogether can seem easier than having to face the unknown.

How to get to stage 3, the GenAI sweet spot

Even if most companies stall out at stage two, that doesn’t mean they have to stay there.

If bad data is the problem, good data is the solution. Being intentional about how you format your data can completely change how your FAQ bot will operate. If you’re stuck on how to improve your data, you may want to get in touch with a knowledgeable team that can help you organize and format it (like, oh I don’t know, Voiceflow, for example). You could also use a tool like knowledge base to action text data and turn it into a chatbot.

If you’re running into hurdles at the deployment stage, you might want to look into a pre-built, customizable widget that allows you to set up and publish your bot in a matter of minutes. You can also avoid collaboration hurdles by using a platform that allows your company to collaborate cross-functionally between the large language model, team, back end, front end, design, and product teams.

Finally, if apprehensions about testing and monitoring are keeping you from deploying, you may want to find a platform that provides a regression testing framework. With this in place, you’ll be able to validate answer quality easily and deploy with confidence.

Build an advanced AI agent right now.

To stage 4 and beyond

Deploying a stage 3 model to production is a huge achievement, but there’s also lots of room to grow afterward. You’ll find before long that while an FAQ bot can answer customer questions adequately, it can’t help customers take action nor does it allow for integrations. It also can’t handle multi-turn flows, which will limit you over time.

If you can get your virtual agent to stage 4, you’ll enable it to integrate with existing systems and help users take actions. For example, in addition to answering product questions, it will also be able to help customers place and confirm orders. To get your agent to this stage, you’ll need to integrate it with existing systems, design the necessary multi-turn flows, and have a system in place to test and monitor the virtual agent’s performance.

Most companies can serve customers very well by getting to stage 4, but you may have your sights set higher. If your goal is to get your virtual agent to stage 5, you’ll be able to provide a tailored customer experience. Voiceflow’s own chatbot, Tico, is an example of a stage 5 agent. It’s implemented on multiple channels, draws on many knowledge bases and models, and provides personalized answers to users. Stay tuned for future articles that dive further into how Tico works.

Building a model this mature does require more time and resources. It can take days to build the initial model, and weeks to refine it. If your goal is to build a high-complexity agent, you’ll want to use a platform that will help you save time and avoid a tricky developer handoff. With the right tool, you’ll be able to design, build, and deploy your agent in the same place. Using a platform that has features like knowledge base, one-click deployment, an extendable set of APIs, the right integrations, and prototyping and testing will make this process much easier.

Great GenAI agents are great at deflection

It can be easy to get consumed with up-leveling your GenAI agent. If you’re currently operating a passable FAQ bot, of course you’re thinking about what you can do to integrate it further.

My advice would be to not worry too much about what your bot can do from a technical perspective, and instead keep deflection in mind as a key metric. How well does your virtual agent serve customers so they don’t have to speak to a live agent? One of our clients at Voiceflow has achieved a 70% deflection rate just by implementing a customer support chatbot in their help center. That’s an incredible improvement and says much more about the customer experience than any other metric might.

GenAI models will continue to mature and improve, so constantly trying to keep up might feel like running a race with no finish line in sight. If you can get your virtual agent out of the demo stage and onto your site, you’re already ahead of most companies. The rest is a matter of incremental improvement.

The five levels of AI maturity

As I see it, there are five levels of AI maturity. Let’s take a quick look at each, and then we’ll talk about why companies have trouble progressing from one stage to another.

Stage 1: Awareness

The team in this stage knows that generative AI tools like ChatGPT exist but the extent of their AI knowledge stops there. That kind of manager is also probably not reading this article, so let’s move on.

Stage 2: Demo

Managers in this stage have successfully built some kind of demo GenAI application, but haven’t deployed it. Developers in this stage got curious and built a demo chatbot for a specific use case, but never got brave enough to test it on customers.

Stage 3: Generative FAQ

At this stage, the company has deployed a generative FAQ chatbot to production that can answer customer questions.

Stage 4: Integrated agent

Teams at this stage have deployed an agent that can help users take actions and complete multiple tasks. The agent provides user support, checks systems status, and allows human escalation within its system.

Stage 5: High-complexity agent

At this stage, the team has created a complex agent that’s tailored to the customer experience and draws on multiple knowledge bases, models, and modalities.

Why most companies stall out at stage 2, the demo stage

It’s not hard to build an FAQ agent—in fact, many developers can build one in five minutes—but most companies end up stalling their Gen-AI agent efforts at the demo stage (especially Fortune 500 and tech companies). Let’s figure out why.

They fall off the data cliff

Functioning FAQ bots need good data to function. Pre-existing FAQ answers and clean documents provide a strong foundation for a bot that provides customers with relevant, helpful answers. Without good data, demo GenAI agents tend to fall off a cliff.

Too many companies fall off that cliff. Their data is disjointed, disorganized, and not actionable, which creates a hurdle that many companies never clear.

They can’t deploy or test with confidence

Some companies have great data, but lack the resources to properly deploy and test their GenAI agent. Being able to actually deploy a web chat requires a certain degree of expertise that some companies just can’t provide, due to silos slowing down and hindering the process.

There can also be unnecessary bureaucracy when developers rely on other teams to validate and test a virtual agent. Often, these teams lack the tools they need and the way forward isn’t clear. The result is a muddy process that guarantees key testers get stuck in the weeds.

Finally, some companies get cold feet before deploying an FAQ bot because they perceive security risks or simply lack confidence that they can keep it running well. In these cases, abandoning the project altogether can seem easier than having to face the unknown.

How to get to stage 3, the GenAI sweet spot

Even if most companies stall out at stage two, that doesn’t mean they have to stay there.

If bad data is the problem, good data is the solution. Being intentional about how you format your data can completely change how your FAQ bot will operate. If you’re stuck on how to improve your data, you may want to get in touch with a knowledgeable team that can help you organize and format it (like, oh I don’t know, Voiceflow, for example). You could also use a tool like knowledge base to action text data and turn it into a chatbot.

If you’re running into hurdles at the deployment stage, you might want to look into a pre-built, customizable widget that allows you to set up and publish your bot in a matter of minutes. You can also avoid collaboration hurdles by using a platform that allows your company to collaborate cross-functionally between the large language model, team, back end, front end, design, and product teams.

Finally, if apprehensions about testing and monitoring are keeping you from deploying, you may want to find a platform that provides a regression testing framework. With this in place, you’ll be able to validate answer quality easily and deploy with confidence.

Build an advanced AI agent right now.

To stage 4 and beyond

Deploying a stage 3 model to production is a huge achievement, but there’s also lots of room to grow afterward. You’ll find before long that while an FAQ bot can answer customer questions adequately, it can’t help customers take action nor does it allow for integrations. It also can’t handle multi-turn flows, which will limit you over time.

If you can get your virtual agent to stage 4, you’ll enable it to integrate with existing systems and help users take actions. For example, in addition to answering product questions, it will also be able to help customers place and confirm orders. To get your agent to this stage, you’ll need to integrate it with existing systems, design the necessary multi-turn flows, and have a system in place to test and monitor the virtual agent’s performance.

Most companies can serve customers very well by getting to stage 4, but you may have your sights set higher. If your goal is to get your virtual agent to stage 5, you’ll be able to provide a tailored customer experience. Voiceflow’s own chatbot, Tico, is an example of a stage 5 agent. It’s implemented on multiple channels, draws on many knowledge bases and models, and provides personalized answers to users. Stay tuned for future articles that dive further into how Tico works.

Building a model this mature does require more time and resources. It can take days to build the initial model, and weeks to refine it. If your goal is to build a high-complexity agent, you’ll want to use a platform that will help you save time and avoid a tricky developer handoff. With the right tool, you’ll be able to design, build, and deploy your agent in the same place. Using a platform that has features like knowledge base, one-click deployment, an extendable set of APIs, the right integrations, and prototyping and testing will make this process much easier.

Great GenAI agents are great at deflection

It can be easy to get consumed with up-leveling your GenAI agent. If you’re currently operating a passable FAQ bot, of course you’re thinking about what you can do to integrate it further.

My advice would be to not worry too much about what your bot can do from a technical perspective, and instead keep deflection in mind as a key metric. How well does your virtual agent serve customers so they don’t have to speak to a live agent? One of our clients at Voiceflow has achieved a 70% deflection rate just by implementing a customer support chatbot in their help center. That’s an incredible improvement and says much more about the customer experience than any other metric might.

GenAI models will continue to mature and improve, so constantly trying to keep up might feel like running a race with no finish line in sight. If you can get your virtual agent out of the demo stage and onto your site, you’re already ahead of most companies. The rest is a matter of incremental improvement.

.avif)

.svg)