Understanding your biggest design challenges

A theme we see from teams using Voiceflow successfully is a strong understanding of their most complex design challenges. While the most successful teams share many common traits, sharing consistent terminology and processes is not one of them.

I’ll try my best to generalize these nuanced design challenges in laymen's terms:

- Accounting for infinite scope — It’s impossible to account for every possible thing a users could ask your assistant.

- Creating contextual conversations— It’s hard to respond with contextually relevant information at all times.

Let’s take a closer look at how successful teams are tackling these two challenges in the hopes of better preparing you and your team for current and future projects.

Accounting for infinite scope

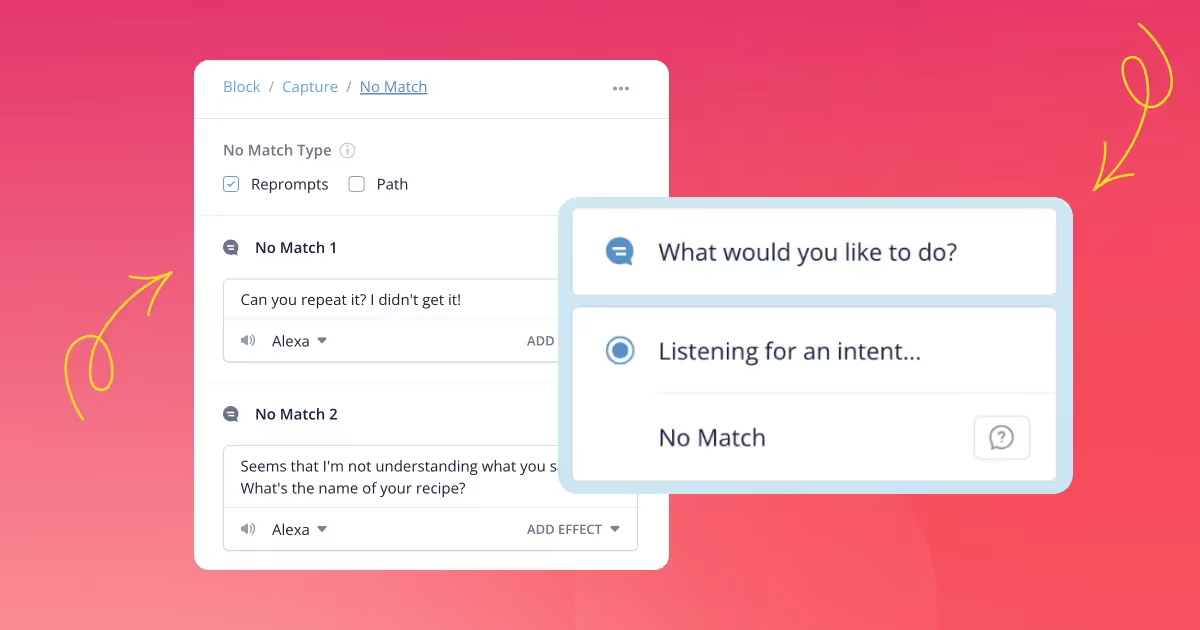

The most common challenge we see teams face when designing a conversational product today is that it’s impossible to account for every potential thing a users could say.

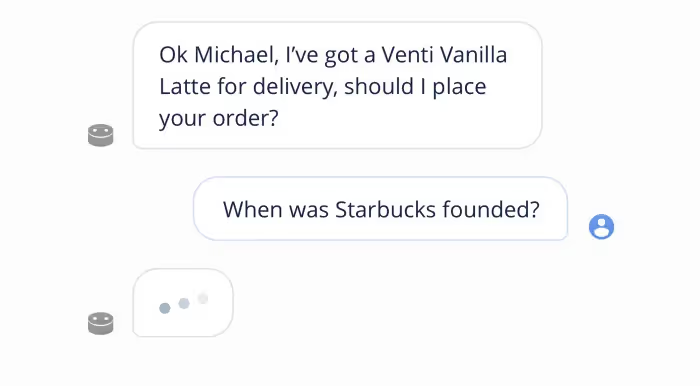

For example, If you were tasked with building the Starbucks Alexa Skill, it’s easy to determine what the user is likely to say- “I want to order xyz”, but impossible to identify everything the user could say.

In other words, building a great conversational product sometimes feels like fighting an endless battle with the unknown. As designers, we want to make our conversations feel as natural and non-linear as possible. At the same time we also must recognize that there needs to be a limit to what our assistant can do given the current technical realities of Conversational AI. Cramming additional intents into our interaction model isn’t necessarily the solution, so what is?

Unlike visual design, we don’t have the ability to bind users to the physical X and Y dimensions of their devices viewport, and instead need to create our own ‘invisible viewport’ that acts as the scope of our conversation.

Let’s look at how forward thinking teams are solving this problem.

1. The 80/20 rule

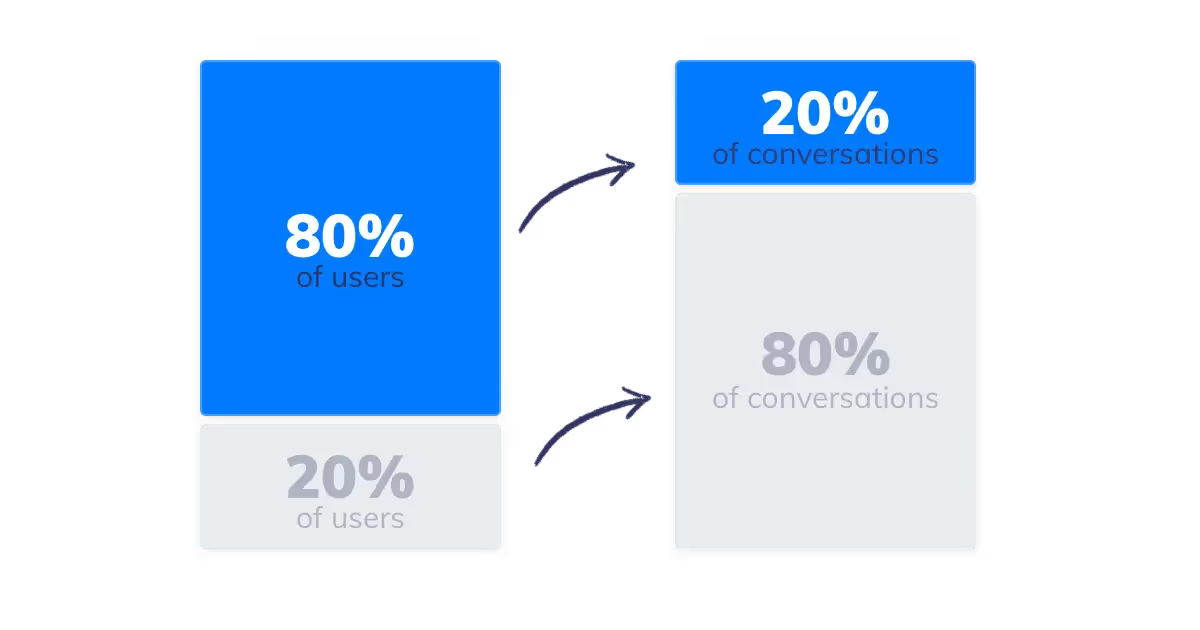

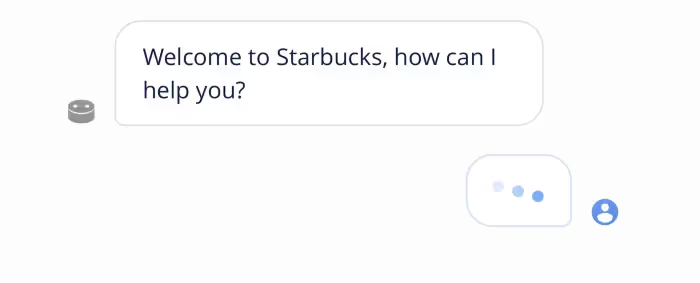

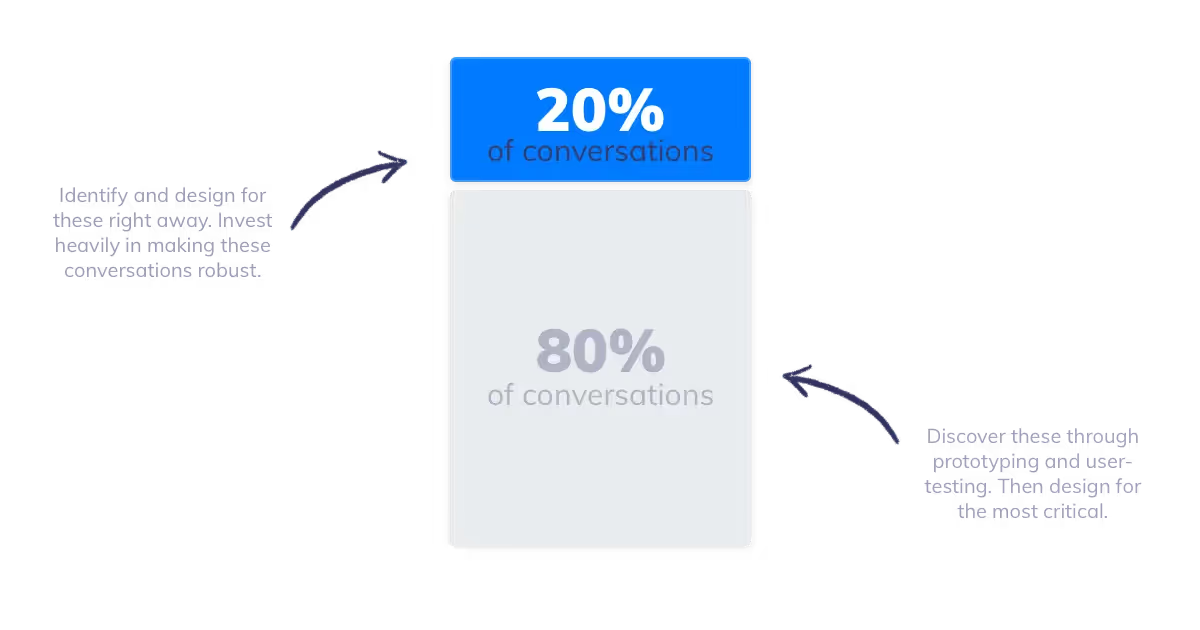

The 80/20 rule, or the Pareto principle (for the scholars out there) is a super practical framework for combating the infinite scope problem.

When designing conversations, teams are using this rule as a way of prioritizing work, by first focusing on the most common conversational journeys (80% of users = 20% of conversations), and then through prototyping and user-testing discovering and designing for the rest.

2. Identify your 80/20 quickly through prototyping & user-testing

Successful teams identify their 80/20 as quickly as possible. Using Voiceflow they quickly design and test prototypes powered by production ready code. These successful teams get prototypes into the hands of stakeholders and users early, and often. They track users sessions and iterate their designs constantly. This early user feedback and iteration helps teams quickly define which conversational paths will account for the bulk of traffic so they can focus their efforts on making this 20% a great experience.

Conversely, unsuccessful teams often spend months using Microsoft Office products like Word, Excel and Visio to design conversations that never occur once a Voiceflow prototype has been designed and shared. When these teams start user testing, they quickly understand which sections of their work to prioritize, and often highlight the redundancies of their previous month’s work.

In short, put your designs to the test as soon as possible. Teams are continually surprised at how users are interacting with their prototypes- and from these discoveries build better conversational products.

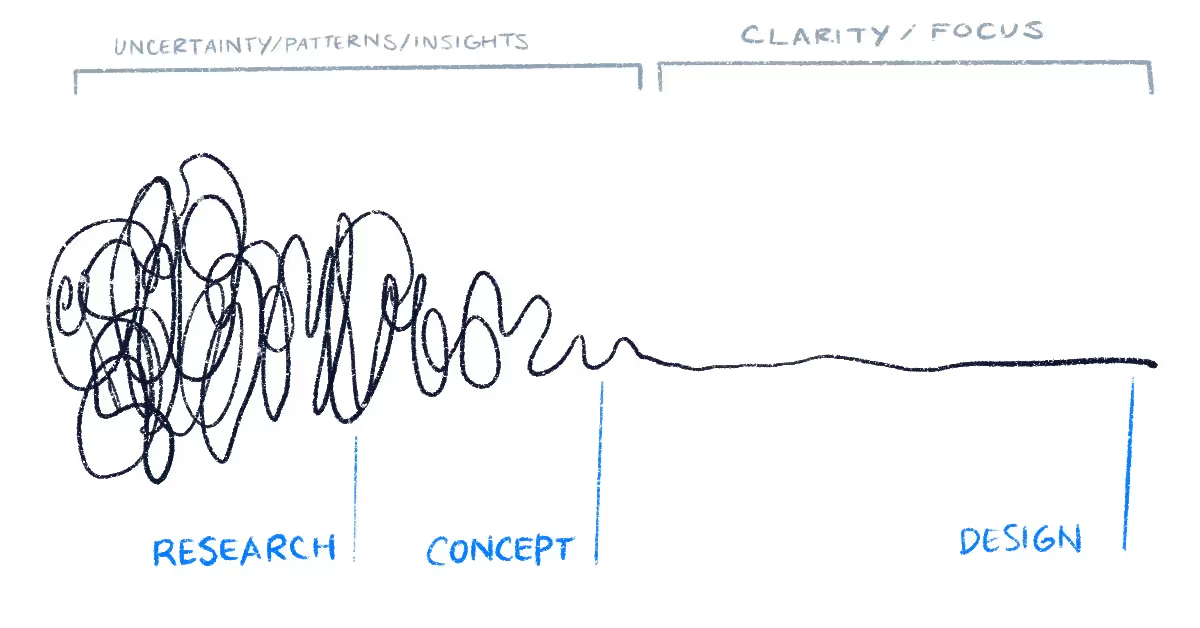

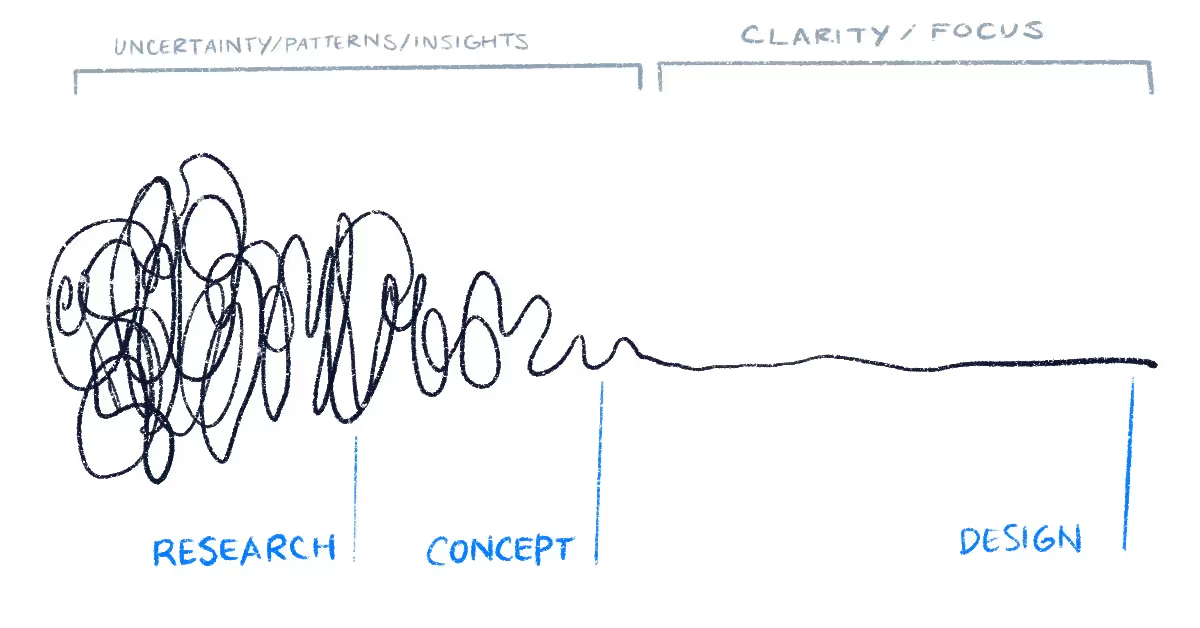

Successful teams have a design process that looks something like this:

You’ve probably seen the above image before. Likely displayed in epic fashion inside another design blog or on a conference slide deck- but I think the following idea remains true for conversational product development:

The journey of researching, uncovering insights, generating creative concepts, iteration of prototypes and eventually concluding in one single designed solution. It is intended to convey the feeling of the journey. Beginning on the left with mess and uncertainty and ending on the right in a single point of focus: the design.

Successful teams embrace the uncertainty that a conversational interface poses by testing their designs early, under the scrutiny of live usage from stakeholders and un-bias users.

See a real-world example here on how AIG successfully designs, prototypes and test their IVR experience using Voiceflow.

Creating contextual conversations

Let’s now operate under the assumption that we’ve successfully identified our 80/20 and in doing so we’ve accounting for all desired happy and un-happy conversational paths. In more technical terms, we could say we’ve designed the ‘perfect interaction model’. Stopping here would result in an adequate experience and users goals would be met most of the time. However, we’re seeing teams understand that to design a truly great conversation- the assistant must become contextually aware.

Designing contextual experiences are hard, as once again- the number of unknowns is staggering, and common Conversational AI solutions aren’t adept enough yet to solve this problem for us.

After talking to forward thinking teams about this subject, we’ve identified three distinct types of context that we see designers accounting for (whether they know it or not). I’ve given them the unofficial names: Session Context, Historical Context and Environmental Context as defined below:

- Session context

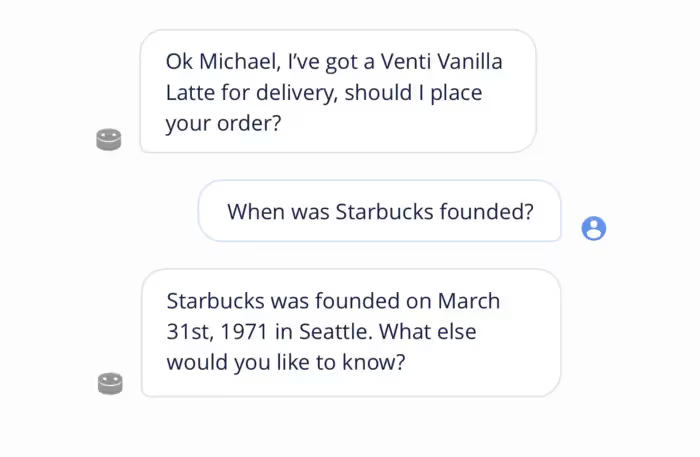

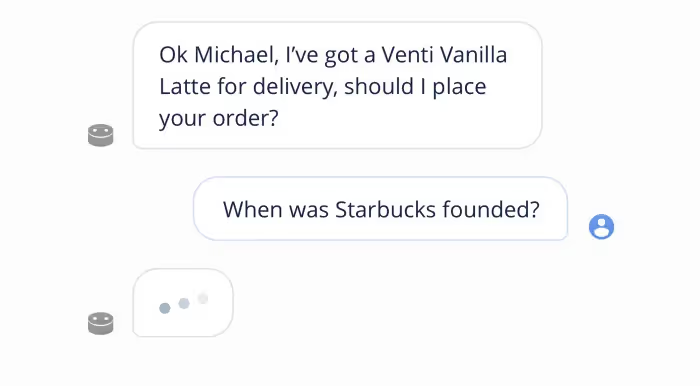

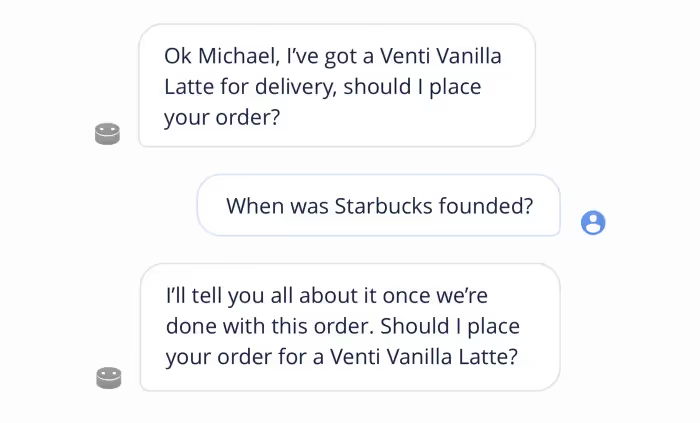

Session context refers to things that happen within a single session of your conversation that can change the way we want our system to respond. For example, lets assume we’re confirming our users order, and the following occurs:

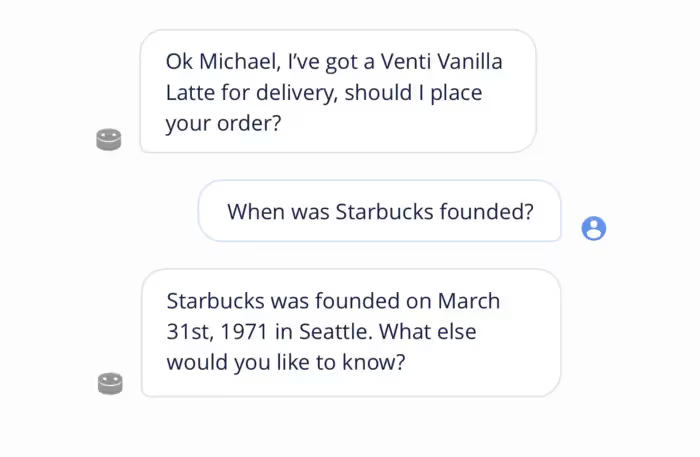

How frustrating! Our assistant was hoping for a simple yes, or no response but instead, the user triggered an entirely different intent. This exact example might not be one we’d find in practice, however the concept remains true- depending on the context of the session we as designers must respond to the user contextually. For example, without accounting for session context we might have responded like this:

But if we had accounted for session context we might have responded like this:

2. Historical context:

Historical context refers to information from previous sessions that allow us as designers to better tailor the next experience for our users.

Using the same example, failing to use historical context might look like this during our users next session:

While successfully using historical context might look like this:

In this example using historical context could be as simple as greeting the user differently based on their number of sessions, or as complex as comparing their previous orders to recommend a new beverage. Regardless of the goal, we see successful teams designing with historical context in mind.

3. Environmental context

Environmental context is when we account for external factors outside of what the user says, in order to contextually decide how our system should respond.

For example, if a user asks to order a coffee, there are many pieces of environmental context that our system could benefit from knowing:

- Where is the user (At home, out of the house, on their way to work?)

- What time of day is it?

- What’s the weather outside (Is it raining- should we recommend delivery? Is it really hot out- should we recommend an iced beverage?)

- What device did they use to make this request (Alexa, mobile app, In-car assistant?)

We can see from these examples that environmental context has nothing to do with what the users says, but rather looks at the environment they said it in, along with any other extraneous indicators that could allow us as designers to deliver a more contextual experience.

Interested in adding these different types of context to your Voiceflow projects? Check out our latest blog post here.

Key takeaways

- Apply the 80/20 rule to determine focus. Make the 20% as robust as possible. Discover your 80% through prototyping and user-testing, then prioritize accordingly.

- Get your prototypes in the hands of other quickly and often. Learn from session history and iterate accordingly.

- When designing, think about how you can use session, historical and environmental context to make a truly great conversational product.

Understanding your biggest design challenges

A theme we see from teams using Voiceflow successfully is a strong understanding of their most complex design challenges. While the most successful teams share many common traits, sharing consistent terminology and processes is not one of them.

I’ll try my best to generalize these nuanced design challenges in laymen's terms:

- Accounting for infinite scope — It’s impossible to account for every possible thing a users could ask your assistant.

- Creating contextual conversations— It’s hard to respond with contextually relevant information at all times.

Let’s take a closer look at how successful teams are tackling these two challenges in the hopes of better preparing you and your team for current and future projects.

Accounting for infinite scope

The most common challenge we see teams face when designing a conversational product today is that it’s impossible to account for every potential thing a users could say.

For example, If you were tasked with building the Starbucks Alexa Skill, it’s easy to determine what the user is likely to say- “I want to order xyz”, but impossible to identify everything the user could say.

In other words, building a great conversational product sometimes feels like fighting an endless battle with the unknown. As designers, we want to make our conversations feel as natural and non-linear as possible. At the same time we also must recognize that there needs to be a limit to what our assistant can do given the current technical realities of Conversational AI. Cramming additional intents into our interaction model isn’t necessarily the solution, so what is?

Unlike visual design, we don’t have the ability to bind users to the physical X and Y dimensions of their devices viewport, and instead need to create our own ‘invisible viewport’ that acts as the scope of our conversation.

Let’s look at how forward thinking teams are solving this problem.

1. The 80/20 rule

The 80/20 rule, or the Pareto principle (for the scholars out there) is a super practical framework for combating the infinite scope problem.

When designing conversations, teams are using this rule as a way of prioritizing work, by first focusing on the most common conversational journeys (80% of users = 20% of conversations), and then through prototyping and user-testing discovering and designing for the rest.

2. Identify your 80/20 quickly through prototyping & user-testing

Successful teams identify their 80/20 as quickly as possible. Using Voiceflow they quickly design and test prototypes powered by production ready code. These successful teams get prototypes into the hands of stakeholders and users early, and often. They track users sessions and iterate their designs constantly. This early user feedback and iteration helps teams quickly define which conversational paths will account for the bulk of traffic so they can focus their efforts on making this 20% a great experience.

Conversely, unsuccessful teams often spend months using Microsoft Office products like Word, Excel and Visio to design conversations that never occur once a Voiceflow prototype has been designed and shared. When these teams start user testing, they quickly understand which sections of their work to prioritize, and often highlight the redundancies of their previous month’s work.

In short, put your designs to the test as soon as possible. Teams are continually surprised at how users are interacting with their prototypes- and from these discoveries build better conversational products.

Successful teams have a design process that looks something like this:

You’ve probably seen the above image before. Likely displayed in epic fashion inside another design blog or on a conference slide deck- but I think the following idea remains true for conversational product development:

The journey of researching, uncovering insights, generating creative concepts, iteration of prototypes and eventually concluding in one single designed solution. It is intended to convey the feeling of the journey. Beginning on the left with mess and uncertainty and ending on the right in a single point of focus: the design.

Successful teams embrace the uncertainty that a conversational interface poses by testing their designs early, under the scrutiny of live usage from stakeholders and un-bias users.

See a real-world example here on how AIG successfully designs, prototypes and test their IVR experience using Voiceflow.

Creating contextual conversations

Let’s now operate under the assumption that we’ve successfully identified our 80/20 and in doing so we’ve accounting for all desired happy and un-happy conversational paths. In more technical terms, we could say we’ve designed the ‘perfect interaction model’. Stopping here would result in an adequate experience and users goals would be met most of the time. However, we’re seeing teams understand that to design a truly great conversation- the assistant must become contextually aware.

Designing contextual experiences are hard, as once again- the number of unknowns is staggering, and common Conversational AI solutions aren’t adept enough yet to solve this problem for us.

After talking to forward thinking teams about this subject, we’ve identified three distinct types of context that we see designers accounting for (whether they know it or not). I’ve given them the unofficial names: Session Context, Historical Context and Environmental Context as defined below:

- Session context

Session context refers to things that happen within a single session of your conversation that can change the way we want our system to respond. For example, lets assume we’re confirming our users order, and the following occurs:

How frustrating! Our assistant was hoping for a simple yes, or no response but instead, the user triggered an entirely different intent. This exact example might not be one we’d find in practice, however the concept remains true- depending on the context of the session we as designers must respond to the user contextually. For example, without accounting for session context we might have responded like this:

But if we had accounted for session context we might have responded like this:

2. Historical context:

Historical context refers to information from previous sessions that allow us as designers to better tailor the next experience for our users.

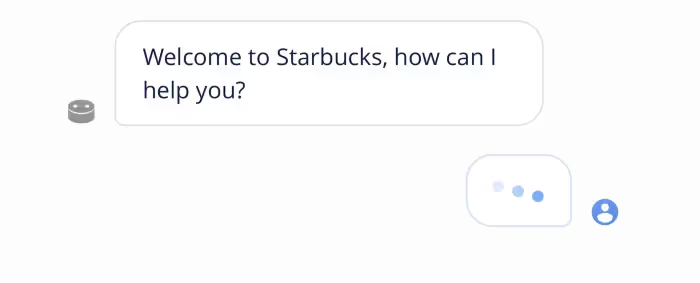

Using the same example, failing to use historical context might look like this during our users next session:

While successfully using historical context might look like this:

In this example using historical context could be as simple as greeting the user differently based on their number of sessions, or as complex as comparing their previous orders to recommend a new beverage. Regardless of the goal, we see successful teams designing with historical context in mind.

3. Environmental context

Environmental context is when we account for external factors outside of what the user says, in order to contextually decide how our system should respond.

For example, if a user asks to order a coffee, there are many pieces of environmental context that our system could benefit from knowing:

- Where is the user (At home, out of the house, on their way to work?)

- What time of day is it?

- What’s the weather outside (Is it raining- should we recommend delivery? Is it really hot out- should we recommend an iced beverage?)

- What device did they use to make this request (Alexa, mobile app, In-car assistant?)

We can see from these examples that environmental context has nothing to do with what the users says, but rather looks at the environment they said it in, along with any other extraneous indicators that could allow us as designers to deliver a more contextual experience.

Interested in adding these different types of context to your Voiceflow projects? Check out our latest blog post here.

Key takeaways

- Apply the 80/20 rule to determine focus. Make the 20% as robust as possible. Discover your 80% through prototyping and user-testing, then prioritize accordingly.

- Get your prototypes in the hands of other quickly and often. Learn from session history and iterate accordingly.

- When designing, think about how you can use session, historical and environmental context to make a truly great conversational product.

.avif)

.svg)