Many of these tasks were also 0-shot based, rather than few-shot-based which is the technique that creates formatting and response stability that production applications look for. Over the course of 2023, more research was conducted on the overall sensitivity of prompts showing dramatic differences across models with small tweaks in prompt formats. [2]

LLM Drift for Conversational AI — Intent Classification

While ChatGPT and LLMs changed the narrative around building AI Agents, intent classification remains an important problem for directing user conversation and structuring agent actions.

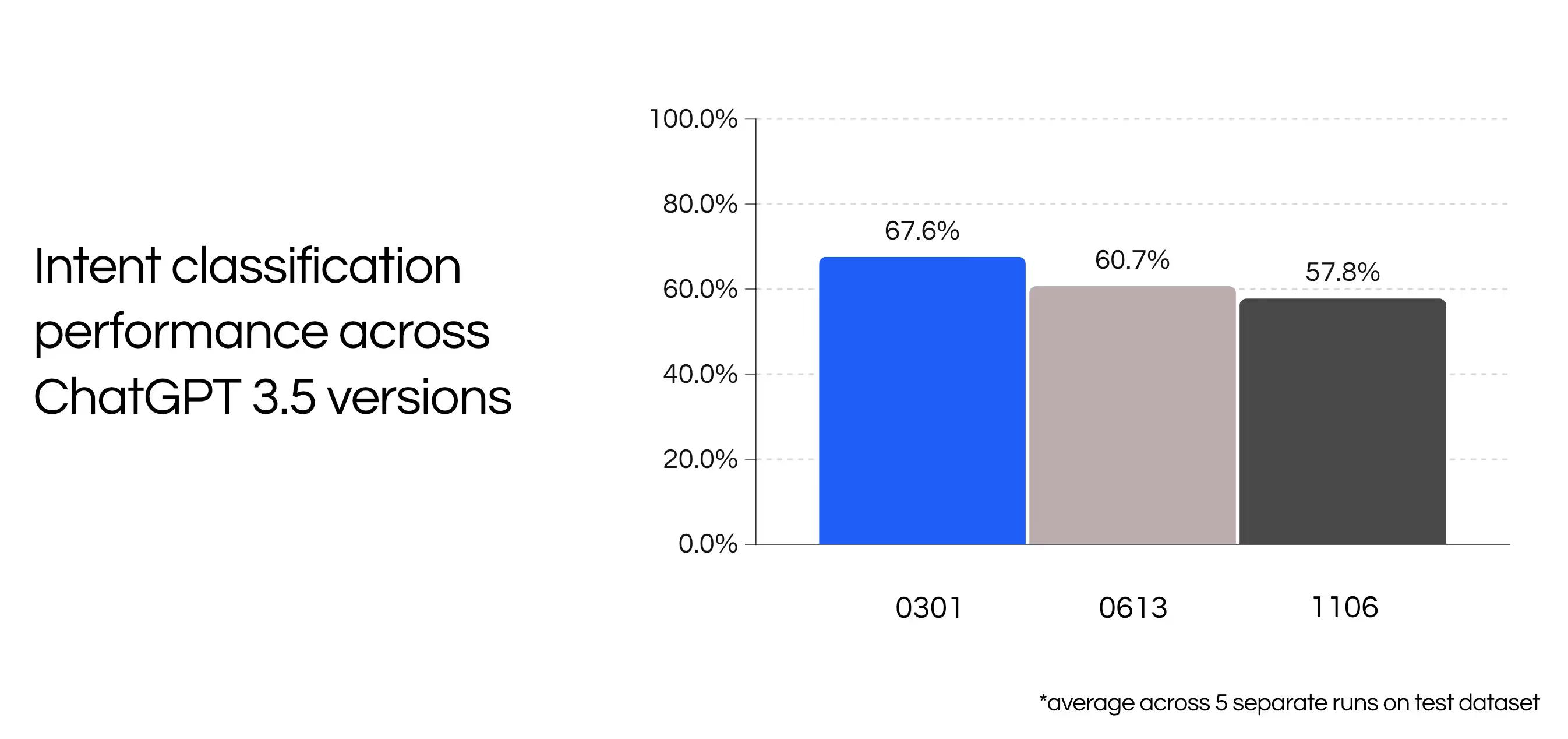

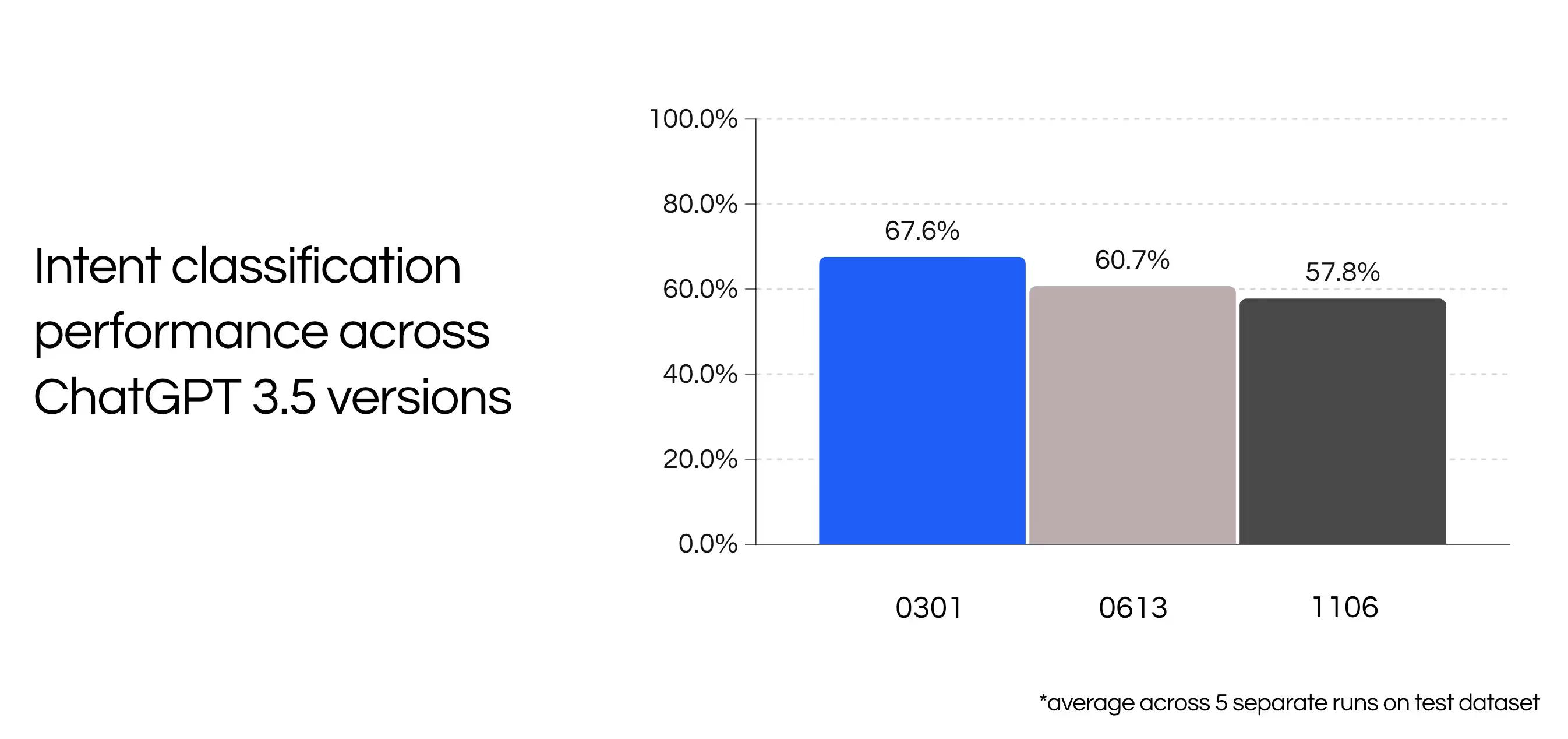

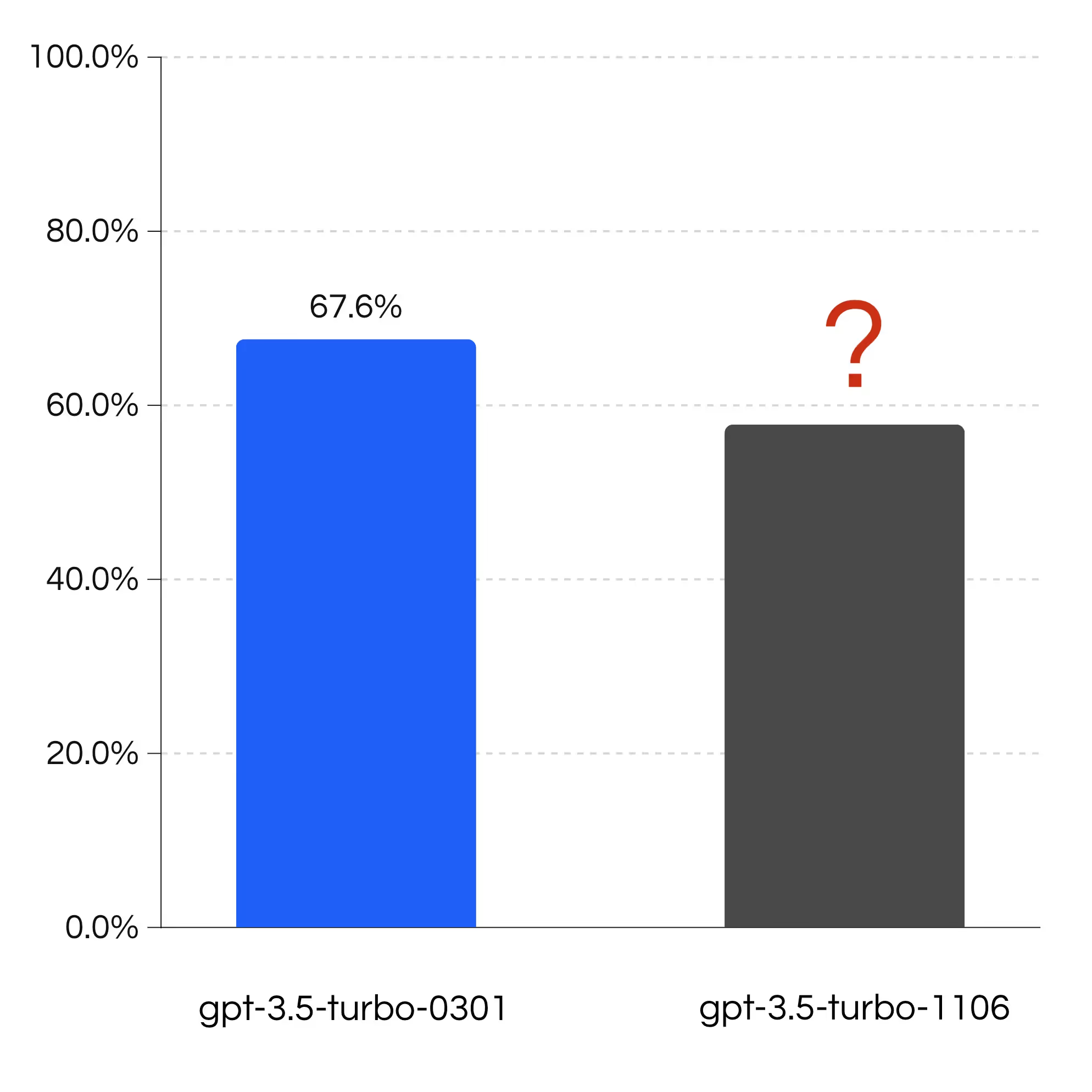

In late 2023, we were working with a customer who was using LLMs for intent classification and noticed that gpt-3.5-turbo-0301 was getting close to end of life. So, we upgraded this customer’s base model to gpt-3.5-turbo-1106, and it dramatically decreased the performance.

After realizing the impact of our change (and reverting), we retroactively ran the benchmarks and saw the severe degradation on the newest model of ~10%*.

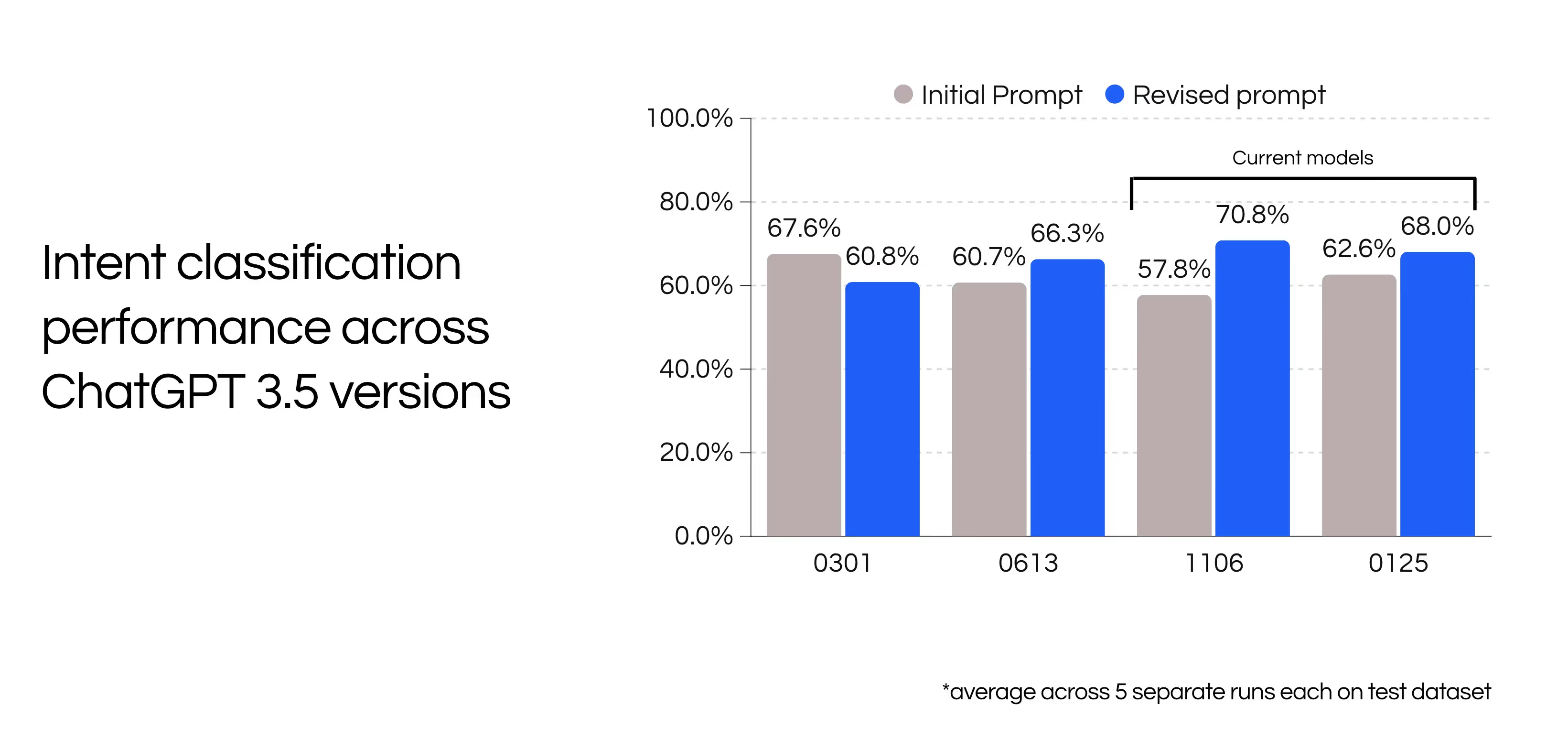

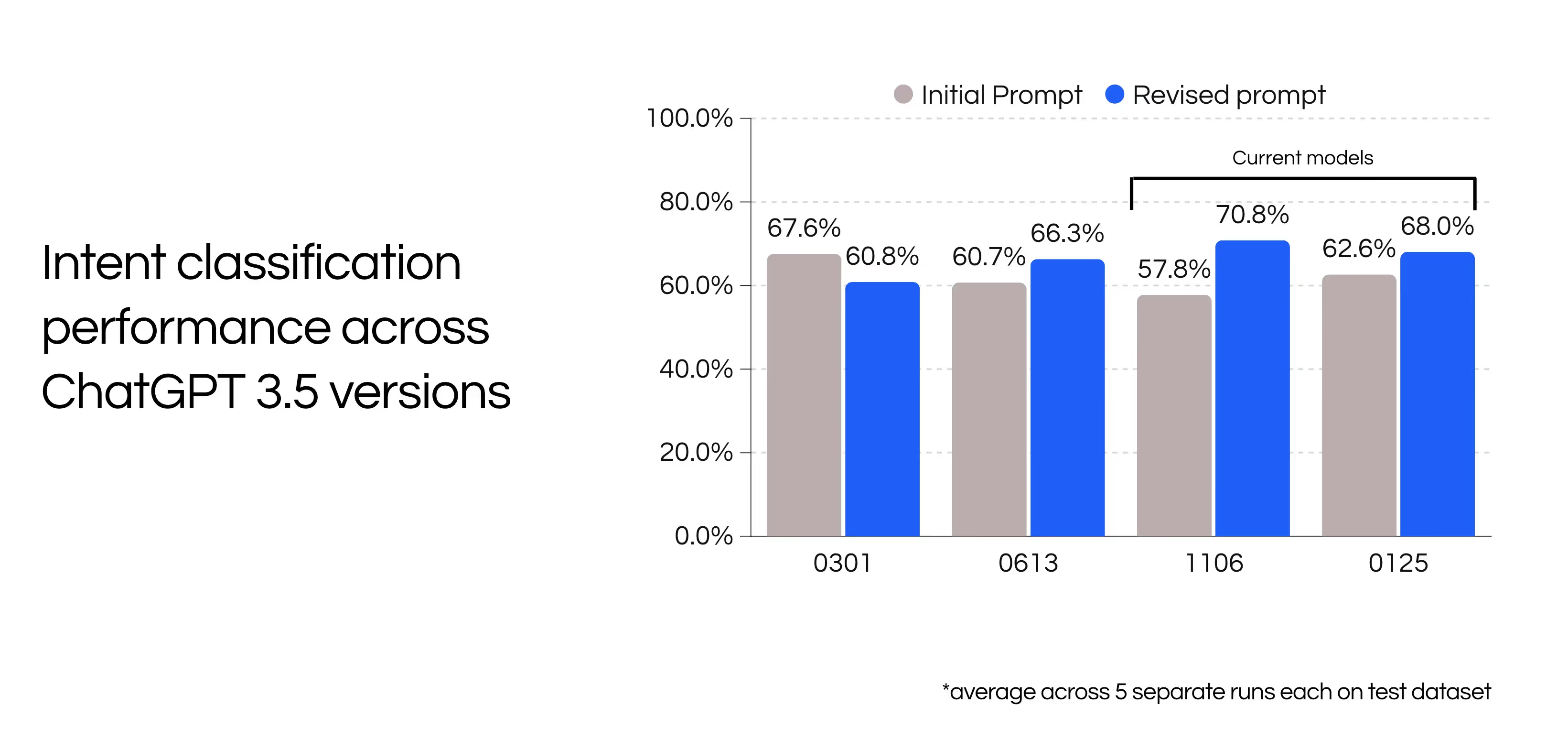

With this degradation in mind, we decided to tweak the initial prompt slightly to improve model performance back to our initial benchmarked accuracy. At face value, the changes are not apparent, but make an impact.

Methodology

To improve our prompts and reduce version discrepancies, we iterated on one portion of our real-life dataset to improve our validation accuracy and find a prompt that brought *gpt-3.5-turbo-1106’*s accuracy to an acceptable range.

After the iterations, we ran our evaluation dataset** once and confirmed that model results were much better for gpt-3.5-turbo-1106*** (and gpt-3.5-turbo-0125 a few months later for curiosities sake).

Prompt changes

Our final prompt had three main changes:

- We changed the opening line to an indication of importance.

- We changed from descriptions and intents to descriptions and actions.

- We added a one shot example to improve none intent classification.

To measure the impact of each of these changes, we ran a small ablation study to measure the impact across each change.

Ablations

Our goal with ablations was to gain a better insight into the magnitude of each change’s performance.

The ablations generally improved performance for later models and demonstrated impact of -10.66% to 13.08%. Bolded results show the best performing ablation for each model. Interestingly enough, the ablations performed better on later models showing a higher impact across techniques. However, there was significant swings for seemingly small changes showing the brittle nature of prompts.

Final results

With our three prompt modifications, our intent classification task performed much better on newer models. The accuracy decreased for the initial gpt-3.5-turbo versions, but increased across most recent versions.

Conclusion

LLM versions can make a large difference! Even for a few shot approach. Having good, non benchmark datasets to validate performance is important, even when onboarding new use-cases. In the future we’ll be discussing some our techniques for internal prompt optimization!

The Voiceflow research section covers industry-relevant and applicable research on ML and LLM work in the conversational AI space.

Many of these tasks were also 0-shot based, rather than few-shot-based which is the technique that creates formatting and response stability that production applications look for. Over the course of 2023, more research was conducted on the overall sensitivity of prompts showing dramatic differences across models with small tweaks in prompt formats. [2]

LLM Drift for Conversational AI — Intent Classification

While ChatGPT and LLMs changed the narrative around building AI Agents, intent classification remains an important problem for directing user conversation and structuring agent actions.

In late 2023, we were working with a customer who was using LLMs for intent classification and noticed that gpt-3.5-turbo-0301 was getting close to end of life. So, we upgraded this customer’s base model to gpt-3.5-turbo-1106, and it dramatically decreased the performance.

After realizing the impact of our change (and reverting), we retroactively ran the benchmarks and saw the severe degradation on the newest model of ~10%*.

With this degradation in mind, we decided to tweak the initial prompt slightly to improve model performance back to our initial benchmarked accuracy. At face value, the changes are not apparent, but make an impact.

Methodology

To improve our prompts and reduce version discrepancies, we iterated on one portion of our real-life dataset to improve our validation accuracy and find a prompt that brought *gpt-3.5-turbo-1106’*s accuracy to an acceptable range.

After the iterations, we ran our evaluation dataset** once and confirmed that model results were much better for gpt-3.5-turbo-1106*** (and gpt-3.5-turbo-0125 a few months later for curiosities sake).

Prompt changes

Our final prompt had three main changes:

- We changed the opening line to an indication of importance.

- We changed from descriptions and intents to descriptions and actions.

- We added a one shot example to improve none intent classification.

To measure the impact of each of these changes, we ran a small ablation study to measure the impact across each change.

Ablations

Our goal with ablations was to gain a better insight into the magnitude of each change’s performance.

The ablations generally improved performance for later models and demonstrated impact of -10.66% to 13.08%. Bolded results show the best performing ablation for each model. Interestingly enough, the ablations performed better on later models showing a higher impact across techniques. However, there was significant swings for seemingly small changes showing the brittle nature of prompts.

Final results

With our three prompt modifications, our intent classification task performed much better on newer models. The accuracy decreased for the initial gpt-3.5-turbo versions, but increased across most recent versions.

Conclusion

LLM versions can make a large difference! Even for a few shot approach. Having good, non benchmark datasets to validate performance is important, even when onboarding new use-cases. In the future we’ll be discussing some our techniques for internal prompt optimization!

The Voiceflow research section covers industry-relevant and applicable research on ML and LLM work in the conversational AI space.

Notes

* standard deviation across 5 runs was between 0.3-0.7% accuracy

** our validation set contained ~400 examples and our evaluation set contained ~200

*** our request parameters

messages = [{"role": "user", "content": request}]

response = openai.ChatCompletion.create(

model=model_version,

messages=messages,

temperature=0.1,

max_tokens=100

)

Citations:

[1] L. Chen, M. Zaharia and J. Zou. 2023. How is ChatGPT’s behavior changing over time? ArXiv:2307.09009 [cs].

[2] M. Sclar, Y. Choi, Y. Tsvetkov, and A. Suhr. Quantifying language models’ sensitivity to spurious features in prompt design or: How i learned to start worrying about prompt formatting. arXiv preprint arXiv:2310.11324, 2023.

Cite this Work

@article{

IntentClassifcationChatGPTVersions,

author = {Linkov, Denys},

title = {How much do ChatGPT versions affect real world performance?},

year = {2024},

month = {03},

howpublished = {\url{https://voiceflow.com}},

url = {https://www.voiceflow.com/blog/how-much-do-chatgpt-versions-affect-real-world-performance}

}

.svg)