Chapter 2: An integrated prototype

It was April and we had an internal engineering onsite. One of the demos was the VFNLU, now running in one of our Voiceflow dev environments. Below is the recording of the demo.

The VFNLU was no longer an academic exercise, but a feasible product.

Chapter 3: Feature completeness and Multilingual models

After the April excitement, the reality of preparing the models for production were set in. Code was refactored, added and changed to fit our existing systems. We also retrained the NLU several times, including new techniques that boosted performance, along with adding multilingual support. We could A/B test these models, with our ML platform setup, which was handy for doing comparisons. Summer turned into fall, and we began testing load testing.

Chapter 4: Real time constraints

“This is too slow”

Our ML platform had been built with realtime in mind, with latencies in the high 100s of ms for most requests. We built it with a queue system, but that technology was not designed for requests that should consistently return in 150ms. Even though our NLU was very fast (sub 20ms) the latency of the system was quite high, especially the long tail of requests.

We had to redesign how our NLU deals with requests, which started a 3 month code refactor. It was unexpected but required to achieve the latency that our users expected.

Chapter 5: Beta testing

April 2023, almost a year after the initial VFNLU demo, was when we started rolling out the VFNLU to our free users. It was time to test how well the VFNLU performed in the real world and what bugs we could find. We also began migrating some early customers to help avoid some of the limitations we had with our previous OEM NLU implementation. We implemented a feature flag, directing a % of users to train and run inference on our NLU. Eventually we finalized a full rollout across all our free users, helping us to find some last minute bugs and deficiencies in the systems.

Chapter 6: The VFNLU

And today we release the VFNLU. After 18 months of work VFNLU is ready. Outperforming the most popular NLUs on open source and proprietary benchmarks. We’ve released an open source testing repo to test the performance yourself

With 30+ languages supported and low training time, you can both power and prototype your next conversational assistant.

NLUs in a LLM world

In December 2022, ChatGPT was in almost every Conversational AI based conversation. A powerful new model that could solve many tasks, handle many languages and respond to almost any user request. We did an internal hackathon before the winter holidays to add a number of Gen AI features to Voiceflow.

Outside of the Gen AI features, we kept working on the VFNLU. There were a couple reasons for this:

- All our Enterprise Customers were continuing to use NLUs to power their assistants

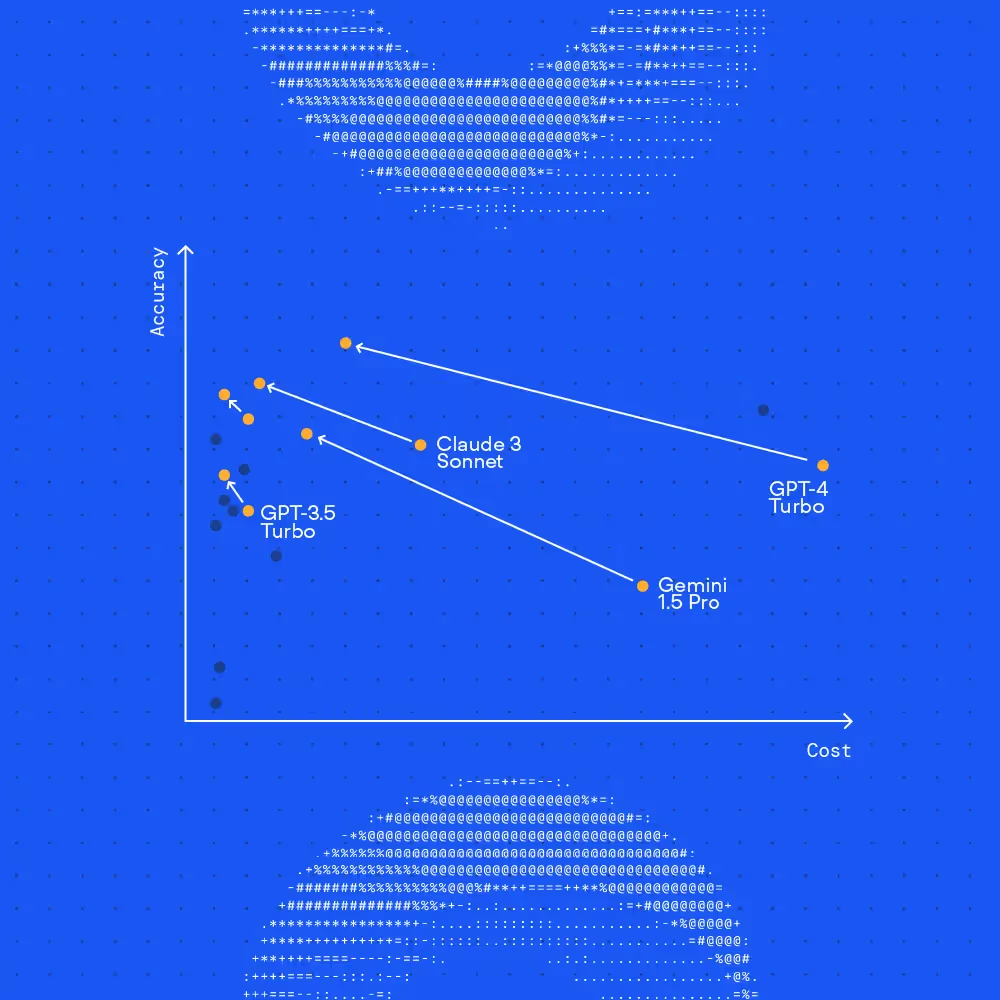

- NLUs are 100-1000x cheaper than LLMs and perform better on large projects

- NLUs don’t hallucinate, they might respond with the wrong intent but it will be clear if there’s a false positive.

With this in mind, each piece of technology has their place in the conversational AI world, our goal as a platform is to allow people to experiment and build with both.

Chapter 2: An integrated prototype

It was April and we had an internal engineering onsite. One of the demos was the VFNLU, now running in one of our Voiceflow dev environments. Below is the recording of the demo.

The VFNLU was no longer an academic exercise, but a feasible product.

Chapter 3: Feature completeness and Multilingual models

After the April excitement, the reality of preparing the models for production were set in. Code was refactored, added and changed to fit our existing systems. We also retrained the NLU several times, including new techniques that boosted performance, along with adding multilingual support. We could A/B test these models, with our ML platform setup, which was handy for doing comparisons. Summer turned into fall, and we began testing load testing.

Chapter 4: Real time constraints

“This is too slow”

Our ML platform had been built with realtime in mind, with latencies in the high 100s of ms for most requests. We built it with a queue system, but that technology was not designed for requests that should consistently return in 150ms. Even though our NLU was very fast (sub 20ms) the latency of the system was quite high, especially the long tail of requests.

We had to redesign how our NLU deals with requests, which started a 3 month code refactor. It was unexpected but required to achieve the latency that our users expected.

Chapter 5: Beta testing

April 2023, almost a year after the initial VFNLU demo, was when we started rolling out the VFNLU to our free users. It was time to test how well the VFNLU performed in the real world and what bugs we could find. We also began migrating some early customers to help avoid some of the limitations we had with our previous OEM NLU implementation. We implemented a feature flag, directing a % of users to train and run inference on our NLU. Eventually we finalized a full rollout across all our free users, helping us to find some last minute bugs and deficiencies in the systems.

Chapter 6: The VFNLU

And today we release the VFNLU. After 18 months of work VFNLU is ready. Outperforming the most popular NLUs on open source and proprietary benchmarks. We’ve released an open source testing repo to test the performance yourself

With 30+ languages supported and low training time, you can both power and prototype your next conversational assistant.

NLUs in a LLM world

In December 2022, ChatGPT was in almost every Conversational AI based conversation. A powerful new model that could solve many tasks, handle many languages and respond to almost any user request. We did an internal hackathon before the winter holidays to add a number of Gen AI features to Voiceflow.

Outside of the Gen AI features, we kept working on the VFNLU. There were a couple reasons for this:

- All our Enterprise Customers were continuing to use NLUs to power their assistants

- NLUs are 100-1000x cheaper than LLMs and perform better on large projects

- NLUs don’t hallucinate, they might respond with the wrong intent but it will be clear if there’s a false positive.

With this in mind, each piece of technology has their place in the conversational AI world, our goal as a platform is to allow people to experiment and build with both.

.svg)