What is Automatic Speech Recognition? An Overview of ASR Technology

Automatic Speech Recognition (ASR) technology has become an integral part of our digital landscape, powering everything from virtual assistants to real-time captioning services. Over the past decade, ASR systems have become increasingly prevalent in our daily lives, from voice search functionality to virtual assistants in contact centers, cars, hospitals, and restaurants.

As ASR technology approaches human-level accuracy, we're witnessing an explosion of applications leveraging this capability to make audio and video content more accessible and actionable. For organizations and developers looking to implement speech recognition solutions, understanding the fundamentals of this technology is essential for making informed decisions about integration and deployment.

These advancements have been made possible by significant breakthroughs in deep learning and artificial intelligence, transforming what was once an experimental technology into a reliable tool that millions of people use every day.

What Is Automatic Speech Recognition (ASR)?

Automatic Speech Recognition, commonly known as ASR, is the technology that enables machines to convert spoken language into written text. Unlike Text-to-Speech (TTS) which transforms text into voice, ASR does the opposite—capturing human speech and translating it into text that computers can process and understand.

A Brief History

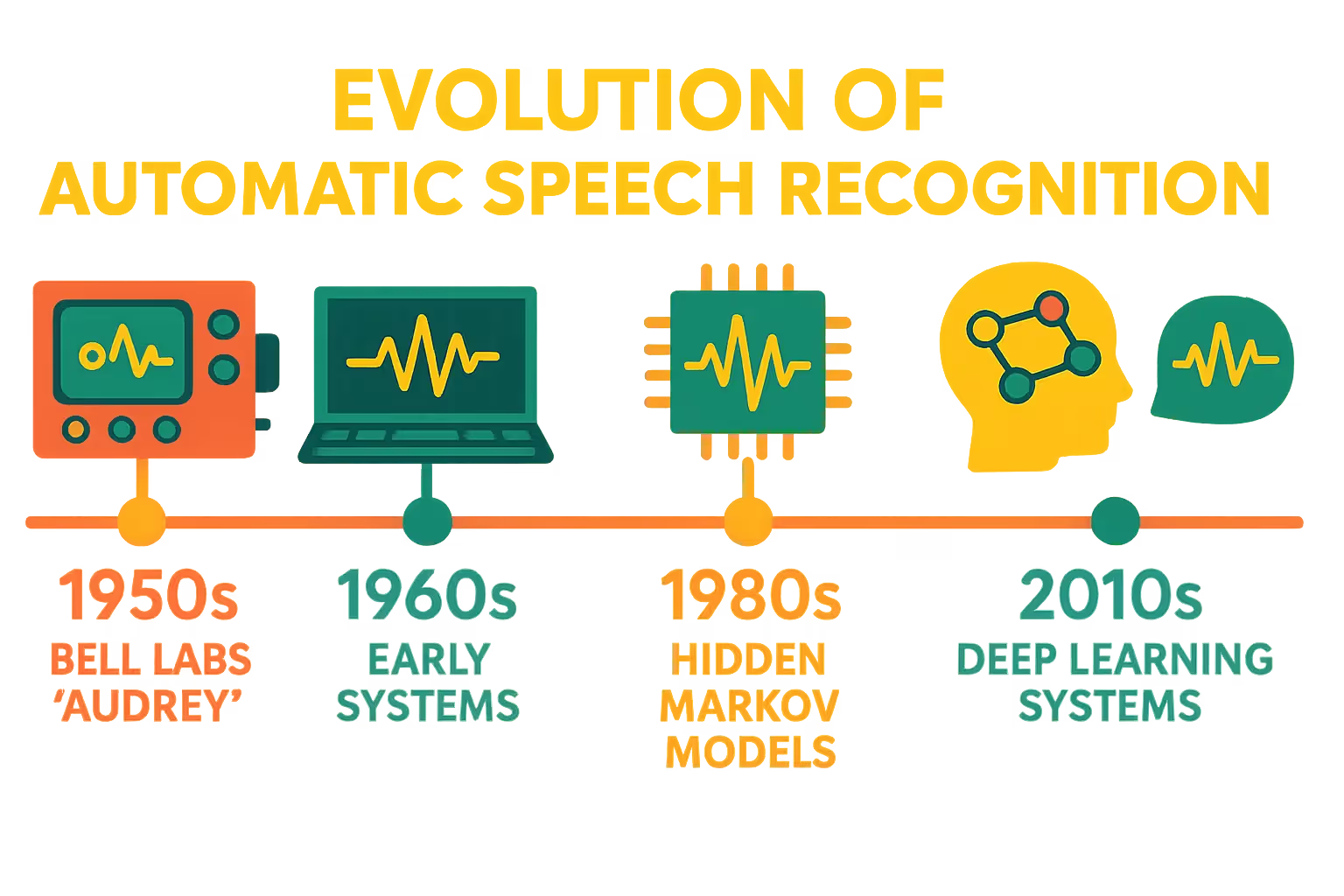

ASR technology has a rich history dating back to 1952 when Bell Labs created "Audrey," a rudimentary system capable of recognizing spoken digits. Over the following decades, the technology evolved gradually, with early systems only able to transcribe basic spoken words like "hello."

The evolution of ASR technology can be traced through several key periods:

- Early Experiments (1950s-1970s): First-generation systems like "Audrey" and IBM's "Shoebox" could recognize very limited vocabularies.

- Hidden Markov Models Era (1980s-2010s): For most of the past fifteen years, ASR was dominated by classical machine learning approaches using Hidden Markov Models (HMMs). While these systems represented the industry standard for many years, their accuracy eventually plateaued.

- Deep Learning Revolution (2014-Present): The field experienced a renaissance when Baidu published their groundbreaking paper, "Deep Speech: Scaling up end-to-end speech recognition." This research demonstrated the power of applying deep learning techniques to speech recognition, pushing accuracy beyond previous limitations and closer to human-level performance.

Alongside these technical advancements, the accessibility of ASR technology has improved dramatically. What once required lengthy, expensive enterprise contracts can now be accessed through simple APIs, democratizing access for developers, startups, and large corporations alike.

How ASR Works

Today, there are two primary approaches to automatic speech recognition: the traditional hybrid approach and the end-to-end deep learning approach. Understanding how these systems work provides valuable insight into their capabilities and limitations.

{{blue-cta}}

Traditional Hybrid Approach

The traditional hybrid approach has dominated the field for the past fifteen years and remains widely used despite its limitations. This approach combines three key components:

- Lexicon Model: Describes how words are pronounced phonetically, requiring custom phoneme sets for each language created by expert phoneticians.

- Acoustic Model (AM): Models the acoustic patterns of speech, predicting which sound or phoneme is being spoken at each speech segment. These models typically use Hidden Markov Models (HMM) or Gaussian Mixture Models (GMM).

- Language Model (LM): Models the statistics of language, learning which sequences of words are most likely to be spoken and predicting which words will follow from current words with associated probabilities.

The process begins with force alignment—mapping the text transcription of an audio segment to determine when specific words occur in the speech. The system then uses the lexicon, acoustic, and language models together through a decoding process to produce a transcript.

While effective, this approach has several drawbacks. Each model must be trained independently, making the process time and labor-intensive. The requirement for force-aligned data and custom phonetic sets creates accessibility barriers and often requires significant human expertise to achieve optimal results.

End-to-End Deep Learning Approach

The end-to-end deep learning approach represents a newer paradigm in ASR technology. With this approach, systems can directly map a sequence of input acoustic features into a sequence of words without requiring force-aligned data or separate lexicon models.

Popular architectures in this category include:

- Connectionist Temporal Classification (CTC): A neural network training algorithm that allows for the alignment between input and output sequences of different lengths.

- Listen, Attend and Spell (LAS): An attention-based sequence-to-sequence model that "listens" to the input sequence, "attends" to different parts of the sequence, and "spells" out the output sequence.

- Recurrent Neural Network Transducers (RNNT): Combines the benefits of CTC and attention-based models for streaming speech recognition.

According to NVIDIA, "Modern ASR systems leverage neural networks to convert speech directly to text without intermediate phonetic representations", enabling significantly higher accuracy than traditional approaches.

These end-to-end systems offer several advantages over traditional approaches. They're easier to train, require less human labor, and generally achieve higher accuracy. Additionally, the deep learning research community continuously improves these models, pushing accuracy levels closer to human performance with each iteration.

Key Terms and Features

Understanding ASR technology requires familiarity with several key terms and features:

- Acoustic Model: Takes audio waveforms as input and predicts what words are present in the waveform.

- Language Model: Helps guide and correct the acoustic model's predictions based on linguistic patterns and probabilities.

- Word Error Rate (WER): The industry standard measurement for ASR accuracy, calculated by comparing machine transcription against human transcription.

- Speaker Diarization: The process of determining "who spoke when" in an audio recording with multiple speakers, often referred to as speaker labeling.

- Custom Vocabulary: Also known as Word Boost, this feature enhances accuracy for specific keywords or phrases when transcribing audio.

- Sentiment Analysis: The identification of emotional tone (positive, negative, or neutral) in speech segments.

As AssemblyAI explains, these features work together to create robust ASR systems capable of processing diverse speech patterns in various acoustic environments.

Common ASR Use Cases

The advancement of ASR technology has led to widespread adoption across numerous industries:

Telephony and Contact Centers

Call tracking systems, cloud phone solutions, and contact centers rely on accurate transcriptions for quality assurance and analytics. Modern ASR systems enable conversation intelligence features, call analytics, speaker diarization, and more, helping businesses improve customer service and extract valuable insights from voice interactions.

Video Platforms

Real-time and asynchronous video captioning has become an industry standard for accessibility. Video editing platforms and content creators use ASR for content categorization, search functionality, and content moderation, making video content more discoverable and accessible.

Media Monitoring

Broadcast TV, podcasts, radio, and other media outlets use Speech-to-Text APIs to quickly and accurately detect brand mentions and topic references, enabling better advertising targeting and content analysis.

Virtual Meetings

Meeting platforms like Zoom, Google Meet, and WebEx integrate ASR technology to provide accurate transcriptions and enable analysis of meeting content, driving key insights and action items from conversations.

Healthcare

Medical professionals use ASR for clinical documentation, reducing administrative burden and allowing more time for patient care. Speech recognition systems can transcribe patient-doctor conversations, helping to create more accurate medical records.

Education

Educational institutions leverage ASR for lecture transcription, making content more accessible to students with hearing impairments and enabling searchable archives of educational material.

Building With ASR

Organizations implementing ASR technology should consider several factors when selecting a solution:

- Accuracy: How well does the system perform across different accents, dialects, and acoustic environments?

- Additional Features: What complementary capabilities are offered, such as speaker diarization, sentiment analysis, or custom vocabulary?

- Support: What level of technical support and documentation is available?

- Pricing and Transparency: Are costs predictable and well-documented?

- Data Security: How is audio data handled, stored, and protected?

- Innovation Pace: Does the provider regularly update their models with the latest research advancements?

Real-world implementations demonstrate the transformative potential of ASR technology. Contact center automation service providers use ASR to power smart transcription and speed up quality assurance processes. Data analysis platforms integrate ASR to reduce time spent analyzing research data. These examples highlight how ASR serves as a foundational component for AI systems processing spoken language.

{{blue-cta}}

Challenges of ASR Today

Despite significant progress, ASR technology faces several ongoing challenges:

Accuracy Limitations

While modern ASR systems have made remarkable strides, achieving 100% human accuracy remains elusive. The nuances of human speech—including dialects, slang, and variations in pitch—create edge cases that even the best deep learning models struggle to handle consistently.

Custom Model Misconceptions

Some organizations believe custom speech models will solve accuracy problems. However, unless addressing very specific use cases (like children's speech), custom models are often less accurate, harder to train, and more expensive than good end-to-end deep learning models.

Privacy Concerns

Data privacy represents another significant challenge. Many ASR providers use customer data to train models without explicit permission, raising serious concerns about data privacy. Continuous cloud storage of audio and transcription data also presents potential security risks, especially when containing personally identifiable information.

Conclusion & Next Steps

As ASR technology continues to evolve, we can expect greater integration into our everyday lives and more widespread industry applications. Advancements in self-supervised learning systems promise to make ASR models even more accurate and affordable, expanding their use and acceptance.

For organizations considering implementing ASR technology, the key is to clearly define requirements, evaluate providers based on accuracy and features relevant to your use case, and consider the long-term implications for data privacy and security.

The future of ASR looks promising, with ongoing research pushing the boundaries of what's possible. As these systems become more sophisticated, they'll continue to transform how we interact with technology and process spoken information, making our digital world more accessible and our interactions more natural.

Get the latest AI agent news

Join Voiceflow CEO, Braden Ream, as he explores the future of agentic tech in business on the Humans Talking Agents podcast.