GPT-5 Is Here: What You Need To Know [2026]

OpenAI unveiled GPT-5 on August 7, 2025, presenting it as the long-awaited successor to GPT-4.

The launch was not flawless. A slide packed with confusing benchmarks quickly drew criticism and even became a meme before Sam Altman acknowledged it as a ‘mega chart screw up.’

The misstep, however, did not overshadow the larger story. GPT-5 is the first model to merge speed and intelligence into a single system. Earlier releases such as GPT-4o, o1, and o3 delivered steady progress but often forced users to choose between quick answers and deeper reasoning. GPT-5 removes that trade-off by adapting on the fly, offering rapid responses to simple queries and more deliberate reasoning for complex ones.

Despite this, GPT-5’s arrival has been met with a mix of excitement and criticism from developers and users alike. This guide provides a detailed breakdown of GPT-5, including its core capabilities, performance, and the nuanced community reception.

What Features Does GPT-5 Include?

GPT-5 is not a single model but a system of different models working together through a real-time “router”. The system automatically selects the best model for a given task, balancing speed and complexity. This includes a fast, default model for routine queries and a more powerful "Thinking" or "Pro" variant for complex, multi-step problems.

What Is the Multi-Modal “Routing” System?

GPT-5’s multi-model routing system dynamically allocates compute power, ensuring that a simple question doesn't waste resources while a complex query receives the necessary depth of analysis. The system is composed of several variants:

- GPT-5: The flagship model, a true reasoning engine designed for deep analysis and complex workflows.

- GPT-5 Mini: A faster, lower-cost option with solid reasoning capabilities, ideal for quick, well-defined tasks.

- GPT-5 Nano: An ultra-fast, ultra-low-latency model optimized for real-time and embedded applications where speed is the top priority.

Some of GPT-5’s advancements include:

- Unified Multimodality: GPT-5 can handle multiple data types simultaneously, processing and generating content from text, code, and images in a single interaction. It can, for example, generate a full web application from a simple prompt or analyze a financial chart and then write a detailed report on its trends.

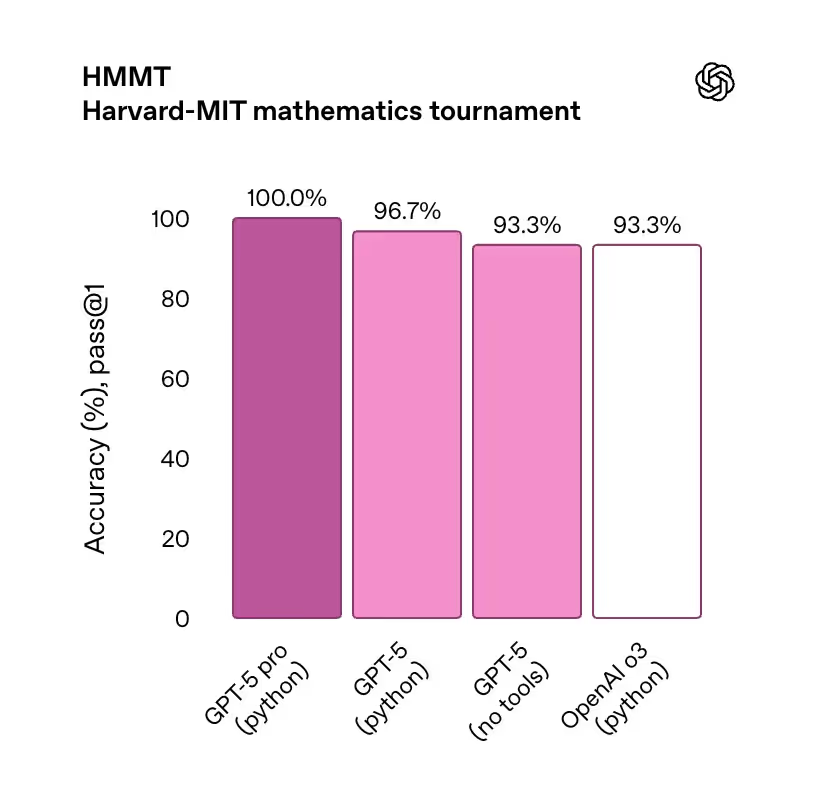

- Enhanced Reasoning: It has shown significant improvements in its ability to perform multi-step logical inference. It scored over 90% on advanced math competitions like the AIME 2025 and HMMT and over 85% on the GPQA Diamond, which consists of PhD-level science questions.

- Superior Coding Performance: OpenAI touts GPT-5 as its "strongest coding model to date," noting its efficiency in using fewer tokens and tool calls to achieve higher accuracy. It has set new state-of-the-art benchmarks on tests like SWE-bench Verified and Aider Polyglot.

- Safety and Hallucination Reduction: The model was trained with a new "safe completions" method aimed at reducing hallucinations. While it doesn't eliminate errors entirely, it is designed to provide more helpful responses, even when the prompt is sensitive or unclear.

GPT-5 vs. GPT-4.5: What Are the Differences?

The user request for a comparison with "GPT-4.5" points to a short-lived but important model in OpenAI's history. Codenamed "Orion," GPT-4.5 was an intermediate model released on February 27, 2025, and subsequently retired with the launch of GPT-5. The core difference between the two lies in their fundamental approach to intelligence:

- GPT-4.5's "Intuition": GPT-4.5 was primarily trained with unsupervised learning to excel at conversational tasks. Its strength was in its natural, fluid, and succinct responses, relying on pattern recognition rather than step-by-step reasoning. This made it feel more "human" for casual chats and creative writing but less reliable for logic-heavy tasks.

- GPT-5's "Reasoning": GPT-5 represents a return to and a significant scaling of reasoning capabilities. Its architecture, with the built-in router, explicitly distinguishes between fast, intuitive responses and deliberate, "chain-of-thought" reasoning. This makes it far more powerful for complex problem-solving in coding, mathematics, and science, where a structured approach is essential.

While GPT-4.5 garnered praise for its conversational style and reduced hallucination rates compared to GPT-4o, its performance on complex reasoning benchmarks was significantly lower than that of GPT-5.

How Much Does GPT-5 Cost?

{{blue-cta}}

Access to GPT-5 is tiered based on user type and subscription plan. For developers, it is available via the OpenAI API in three distinct models: gpt-5, gpt-5-mini, and gpt-5-nano, each optimized for different price and performance requirements. The costs are based on token usage.

For ChatGPT users, the cost is bundled into the monthly fee: The Plus subscription remains at $20 per month, providing access to GPT-5. The most powerful variant, referred to as "GPT-5 Pro" in the user interface, is rumored to be available on a separate, more expensive tier for select users.

GPT-5’s Context Window and Capabilities

The context window is a critical feature, and GPT-5's implementation has been a major point of discussion within the community. The API offers the largest context window, with a maximum of 400,000 tokens per request (272,000 input + 128,000 output). In the ChatGPT interface, the context window depends on the user's subscription: Free Tier users get 8,000 tokens, Plus users get 32,000 tokens, and Pro and Enterprise users have a 128,000-token window.

Beyond its core features, GPT-5's capabilities have been tested and verified across a range of applications:

- Advanced Coding: It can generate full-stack applications from high-level prompts, debug complex codebases, and act as a proficient development partner.

- Multimodal Reasoning: It can perform complex tasks that require both visual and textual understanding, such as analyzing a financial chart and then writing a detailed report on its trends.

- Function Calling: The model's improved function calling is especially powerful for business applications, where it can execute domain-specific tasks with high accuracy in fields like telecom and retail.

- Academic and Scientific Problem-Solving: It can solve advanced math and science problems and reason through complex logic puzzles.

Pros and Cons of GPT-5: A Balanced Review

The release of GPT-5 has generated a wide range of opinions, with users, developers, and journalists all weighing in on its strengths and weaknesses. The consensus is far from unified, with reactions often dependent on a user's specific needs and workflow.

Pros of GPT-5

For those in technical fields, the improvements are undeniable. As reported by Wired, GPT-5 is "a flagship language model," with performance gains that show up in everyday use. The CEO of Cursor, a coding platform, declared on a live stream that GPT-5 is the smartest model his team has ever seen. Its ability to generate full-stack applications and debug complex codebases has been a significant win for developers.

In addition, the new, single-model system has been praised for simplifying the user experience. Instead of manually choosing between different model variants, the system dynamically routes queries. CNET found that while the improvements were "subtle," GPT-5's outputs were "a little sharper, a little more polished and a little more helpful." This is particularly noticeable in data analysis and visual tasks, where GPT-5's presentations are more organized and insightful.

Cons of GPT-5

For many users, particularly those who rely on the model for creative writing or casual conversation, the change in tone was a major source of frustration. The new model, with its emphasis on safety and accuracy, was widely described on Reddit as "robotic" and less spontaneous. As reported by the Economic Times, OpenAI had to quickly update GPT-5's personality to make it "warmer and friendlier" after users complained of its "cold, corporate tone."

Furthermore, Tech critic Ed Zitron was particularly harsh, arguing that the removal of model choices and the new, seemingly arbitrary rate limits for "thinking" messages, suggesting that "meaningful functionality… is being completely removed for ChatGPT Plus and Team subscribers." Equally, despite OpenAI's claims, some critics, including PCMag's Ruben Circelli, argue that GPT-5 is an "insignificant update." He wrote that while it has some upgrades, it "doesn't solve the problems that actually matter" and that he has not "noticed a significant improvement" in areas like hallucination reduction.

Frequently Asked Questions About GPT-5

{{blue-cta}}

Is GPT-5 actually better than 4o or is it a downgrade? My creative writing is worse and it feels more robotic.

The data suggests that GPT-5 is a significant improvement on complex reasoning, problem-solving, and coding, but some users have reported that it feels less creative or “human” than earlier models. The "right" model depends on your use case.

Why is GPT-5 so slow? My wait times are way longer now for simple questions.

GPT-5’s new architecture is designed to "think" longer for complex queries, which can lead to increased latency. In live contact center interactions, speed is non-negotiable. The solution is to use a system that intelligently routes simple questions to a faster, lower-cost model and only engages the deeper GPT-5 reasoning for hard problems.

Why does it keep ignoring my custom instructions and context? It feels like it has a terrible memory.

This is a common pain point. While GPT-5 has an expanded context window, user-level issues can still occur. A well-designed agent with a clear workflow and guardrails can enforce context and instructions, ensuring the model stays on track.

Is it true that GPT-5 hallucinates more than older models? I'm getting weird, made-up answers.

This is a widely reported frustration. While internal tests show a significant reduction in hallucination rates, real-world performance can vary. Building a robust agent includes implementing a retrieval-augmented generation (RAG) system that grounds the AI’s responses in your company’s verified knowledge base, minimizing the risk of incorrect or fabricated answers.

They're forcing us to use GPT-5 and removed the old models. What's a good alternative for the things it's bad at?

You’re not stuck with a single vendor. GPT-5 is strong at structured reasoning and tool use, but some users find it too “cold” in tone, too verbose, or less creative than earlier models. If that’s the pain point, there are real alternatives:

- Anthropic Claude 3.5: Often praised for being more human-sounding and conversational. Great at writing with personality, summarizing long docs, and handling nuanced, softer language. Many contact center teams use Claude for empathetic responses.

- Mistral / Mixtral models: Open weights that you can run privately. They’re less polished but useful if compliance, cost, or self-hosting is a concern. Good for organizations that don’t want to be locked into OpenAI’s router!

What are the best use cases for GPT-5 in a contact center? Does it actually help with tasks like summarization and sentiment analysis?

Absolutely. Contact centers are where GPT-5’s mix of speed and reasoning really shines. The obvious win is summarization. After a long back-and-forth with a customer, GPT-5 can produce a clean, accurate recap that’s ready for CRM notes or escalation teams. The model’s improved honesty also means fewer “hallucinated” details compared to older versions, which matters when you’re logging compliance-sensitive information.

A quick tip: For a seamless GPT-5 experience in your contact center, consider a platform like Voiceflow. It allows you to integrate GPT-5 with your existing systems and provides the robust tools needed to build, test, and deploy AI agents at scale, all without needing to write a single line of code.

How do you deal with the increased message limits on GPT-5? It feels like I'm wasting my prompts when I have to ask for a fix.

That’s a common frustration. GPT-5 gives you much higher context and message limits, which is great for long conversations, but it also means a single “fix this one thing” request can chew through more tokens than you’d like. The trick is to be specific and incremental. Instead of sending the whole transcript back every time, frame your follow-up as “based on the last answer, change X to Y” so the model only has to reprocess the delta.

Another tactic is to set rules up front. For example, tell GPT-5: “Always keep responses concise, and only rewrite the section I point out.” This way, the model self-limits rather than giving you a fresh essay each time. And if you’re building on top of GPT-5 for production use, you can wrap it in a prompt-management layer that automatically trims context, summarizes history, or re-anchors the conversation so you’re not paying for unnecessary re-tokens. In short, lean on structure and guardrails to get the efficiency benefits of the bigger window without feeling like you’re burning prompts on small tweaks.

Get the latest AI agent news

Join Voiceflow CEO, Braden Ream, as he explores the future of agentic tech in business on the Humans Talking Agents podcast.