One of the most impactful choices you can make is the LLM (AI model) you use with the Prompt step, Set step (when setting a variable using a prompt, or Agent step. You can set the model a step uses in its settings.

Deciding which LLM to use

Voiceflow supports various LLMs, each of which have pros and cons. Different LLMs are priced differently. For example, GPT-4o mini is a smaller, more cost effective model than GPT-4o, but it lacks some capabilities of GPT-4o.

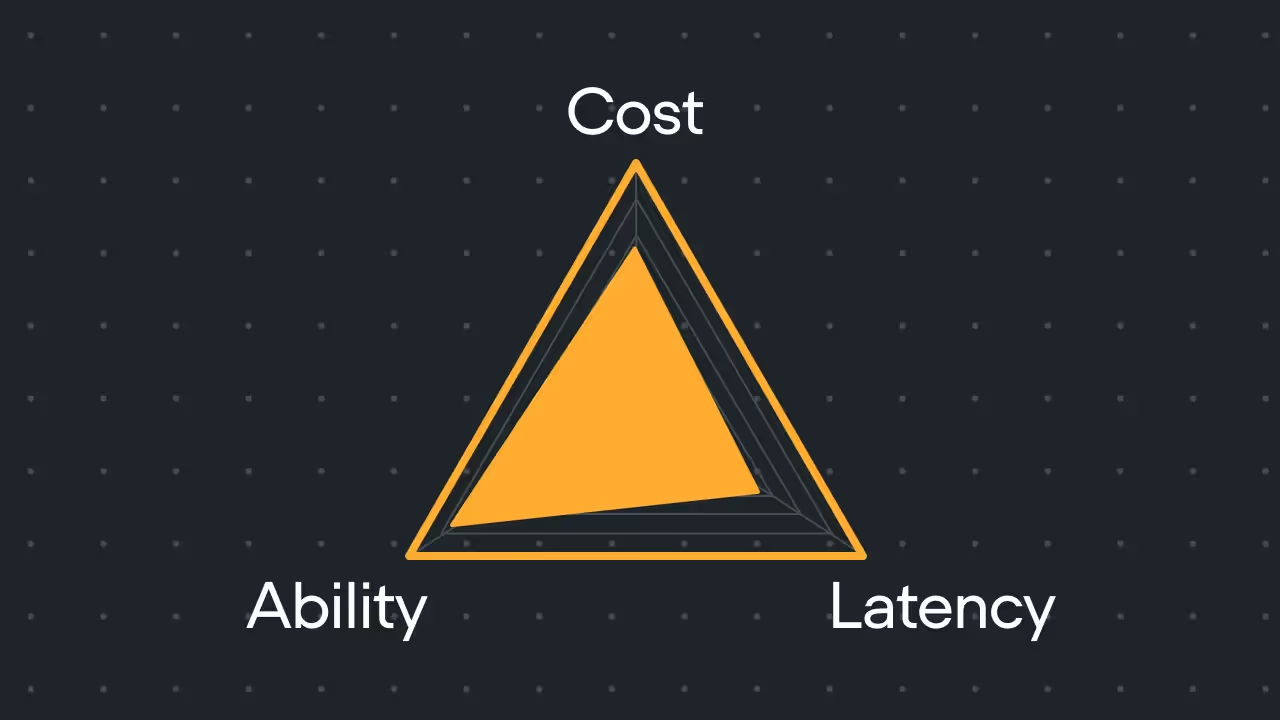

When selecting which LLM to use, you’ll need to balance cost, ability, and latency (especially when building for voice). In general, more capable models cost more, and many introduce latency. Smaller, more lightweight models can generate responses quicker, and cost less, but are less capable.

While each model has its own pros and cons, we can group them into three main buckets.

- Smaller, cheaper models such as GPT-4o mini and Claude 3.5 Haiku are good for classifying data, responding to basic queries with minimal latency, and content moderation.

- More capable models, like GPT-4o and Claude 3.5 Sonnet are a better fit for use-cases where the model needs to reason, handle nuanced instructions, generate step-by-step instructions based on data from the Knowledge Base, and other similar situations.

- Heavier models, like GPT-4 and and Claude 3.5 Opus are the most capable but most expensive models available on Voiceflow. They can be a good fit for use-cases that need to prioritize answering difficult questions accurately or need to implement advanced reasoning.

Although some legacy models are available on Voiceflow, such as GPT-3.5 Turbo, we don’t generally recommend building agents using them.

Experimenting with models

While optimizing your agent, you might discover a situation where a less optimal model seems to be used. For example, you might be using a heavy model such as Claude 3.5 Opus for basic classification on the set step, where a model like GPT-4o mini would be a better fit. When changing the model a step uses, we recommend always doing the following:

- Test extensively. Each model performs differently, and while some models might be on the same “tier” or have similar names, they may perform very differently in the real world. We recommend making only a few tweaks at a time, testing them extensively inside Voiceflow’s editor, and once you’re confident about their performance, publishing your project to production.

- Monitor your agent’s transcripts. After publishing changes that involve a model change, you should then monitor your agent’s transcripts (accessible through the speech bubble icon on the Voiceflow CMS). You should verify that performance hasn’t degraded as a result of your change.

- Continue to iterate. If a model change is successful and reduces your credit usage, try identifying similar prompts that could also be switched to that model. Then, test those changes, launch them, and monitor your agent’s transcripts.

While it can be tempting to quickly update all of the models your agent uses to lower-cost options, we don’t recommend doing this. Different models are better fits for different use-cases. Choosing a model should always be an intentional decision.

Resources

Build AI Agents for customer support and beyond

Ready to explore how Voiceflow can help your team? Let’s talk.