Generative AI: What is it and How Does it Work?

Generative Artificial Intelligence (AI) has emerged as one of the most transformative technologies of our time. Unlike traditional AI systems that primarily analyze existing data, generative AI creates new content that resembles the data it was trained on. This revolutionary capability is rapidly reshaping industries, creative processes, and how humans interact with technology.

At its core, generative AI refers to machine learning models designed to generate novel content such as text, images, audio, video, code, and other forms of data. From writing essays and composing music to designing graphics and generating synthetic data for scientific research, generative AI is expanding the boundaries of what machines can accomplish.

The recent explosion in generative AI applications has been driven by significant advances in neural network architectures, computational resources, and massive datasets. Systems like OpenAI's ChatGPT, DALL-E, and Stable Diffusion have demonstrated capabilities that seemed impossible just a few years ago.

What Is Generative AI?

Generative AI can be defined as a subset of artificial intelligence that uses machine learning models to create new content based on patterns learned from training data. As MIT researchers explain, "Generative AI can be thought of as a machine-learning model that is trained to create new data, rather than making a prediction about a specific dataset."

These systems don't simply recall or recombine existing examples—they learn the underlying distribution of the data and can generate entirely new instances that never existed before but could plausibly belong to the same dataset.

{{blue-cta}}

Comparison with Traditional AI

Traditional AI systems, particularly those based on discriminative models, are designed to analyze existing data and make specific predictions or classifications. For example, a traditional AI might determine whether an X-ray shows signs of a tumor or predict if a loan applicant is likely to default.

The fundamental difference lies in their objectives:

- Discriminative models answer: "Given this input, which category does it belong to?"

- Generative models answer: "Given this category or prompt, what are possible examples that would belong to it?"

This distinction makes generative AI particularly suited for creative tasks and applications where producing new content is the goal, rather than classifying existing content.

How Generative AI Works

Generative AI systems operate through a sophisticated multi-step process that combines pattern recognition with content generation.

Fundamental Principles

At its core, generative AI operates on the principle of learning probability distributions from data. These systems analyze patterns, structures, and relationships within training datasets to understand the underlying statistical properties that characterize the data.

The process typically involves converting inputs into tokens—numerical representations of chunks of data. As explained by MIT researchers, "What all of these approaches have in common is that they convert inputs into a set of tokens, which are numerical representations of chunks of data."

Neural Networks and Deep Learning

Modern generative AI systems are built on deep neural networks—computational structures loosely inspired by the human brain. These networks consist of multiple layers of interconnected nodes that process and transform data as it passes through the system.

The specific architectures used in generative AI vary depending on the application, but they all share the ability to learn complex patterns from data and generate new examples based on that learning. The most successful generative models today contain billions of parameters and require enormous computational resources to train.

Training Process

Training a generative AI model involves exposing it to vast amounts of data from which it learns patterns and relationships. The process generally involves:

- Data Collection and Preparation: Gathering diverse, high-quality datasets relevant to the target domain.

- Model Training: Adjusting the model's parameters through optimization to minimize the difference between its outputs and expected results.

- Validation and Testing: Evaluating the model's performance on unseen data.

- Fine-tuning: Additional training to improve performance for particular applications.

The scale of data required for modern generative AI is staggering, with models like ChatGPT trained on vast corpora of text from the internet, books, articles, and other sources.

Major Generative AI Architectures

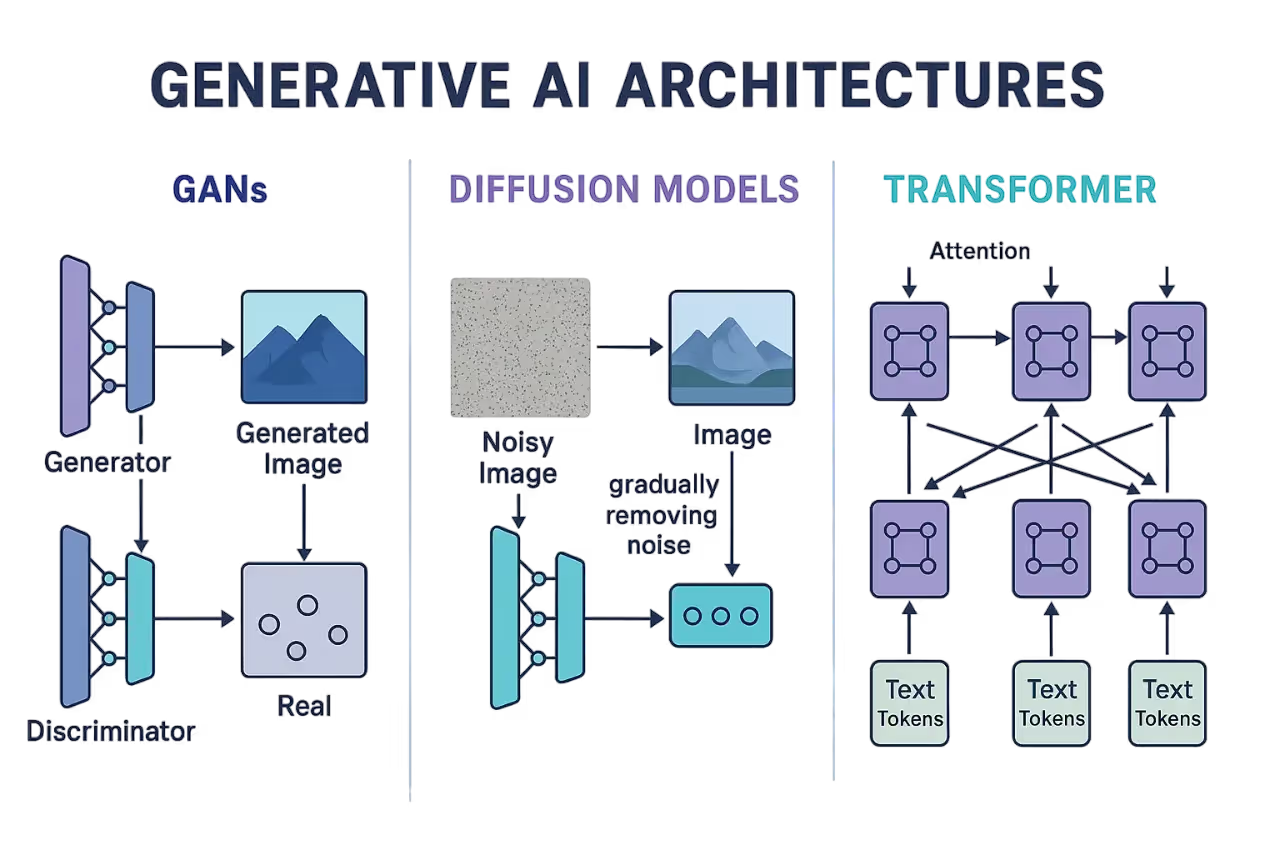

The field of generative AI encompasses several distinct architectural approaches, each with unique strengths and applications.

Generative Adversarial Networks (GANs)

GANs, introduced in 2014, employ a competitive setup between two neural networks:

- A generator network that creates new data samples

- A discriminator network that attempts to distinguish between real data and the generator's output

This adversarial process creates a feedback loop where both networks improve over time. The generator tries to create increasingly realistic outputs to fool the discriminator, while the discriminator becomes better at identifying generated content.

GANs excel at producing high-quality outputs and can generate samples quickly, making them particularly successful in image generation.

Diffusion Models

Diffusion models work through a two-step process:

- Forward diffusion: Gradually adding random noise to training data

- Reverse diffusion: Learning to reverse this noise-addition process to generate new data

This approach allows diffusion models to generate highly detailed and diverse outputs. Systems like Stable Diffusion, which power many popular text-to-image generation tools, are built on diffusion model architecture.

Large Language Models (LLMs)

Transformer networks, introduced by Google researchers in 2017, form the foundation of today's large language models. Unlike earlier sequential models, transformers process entire sequences of data simultaneously through a mechanism called self-attention.

This architecture allows transformers to capture long-range dependencies in data, making them exceptionally powerful for understanding and generating text. Models like GPT (Generative Pre-trained Transformer) scale this approach to billions of parameters, enabling sophisticated text generation capabilities.

Applications and Use Cases for Generative AI

Generative AI has rapidly expanded beyond research laboratories to transform numerous industries and creative processes.

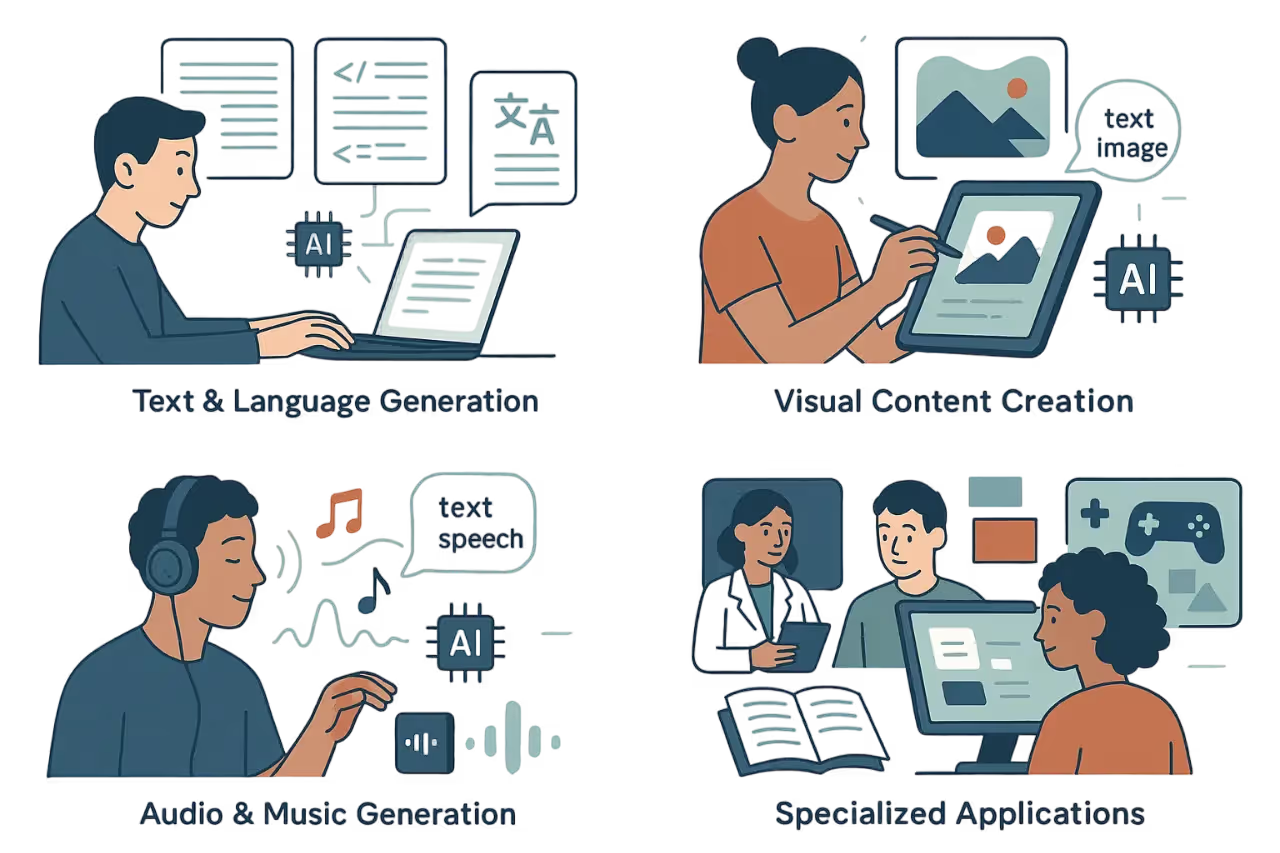

Text and Language Generation

Text generation represents one of the most mature applications of generative AI:

- Content creation: Generate articles, blog posts, marketing copy

- Conversational AI: Power chatbots and virtual assistants

- Code generation: Write and debug computer code

- Translation and summarization: Process and transform text content

As noted by MIT researchers, these language capabilities are transforming how people interact with technology, making complex systems more accessible. For example, platforms like Voiceflow utilize generative AI to help designers create sophisticated conversational experiences without extensive coding knowledge, streamlining the development of voice assistants and chatbots.

{{blue-cta}}

Image and Visual Content Creation

Generative AI has revolutionized visual content creation:

- Text-to-image generation: Create images from text descriptions

- Image editing: Modify existing images through inpainting and style transfer

- Design assistance: Aid in creating logos, illustrations, and other graphic elements

These capabilities are democratizing visual creation, allowing people without traditional artistic training to express their ideas visually.

Audio and Music Generation

Generative AI is increasingly capable of creating sophisticated audio content:

- Text-to-speech: Convert written text to natural-sounding speech

- Music composition: Create original musical compositions

- Sound effect generation: Produce environmental sounds for media production

Specialized Industry Applications

Generative AI is creating impact across numerous industries:

Healthcare and Life Sciences

- Drug discovery and medical imaging

- Personalized medicine recommendations

Entertainment and Media

- Game asset creation and film special effects

- Content personalization

Education and Research

- Personalized learning materials

- Research assistance and hypothesis generation

Benefits of Generative AI

The rise of generative AI has opened up a new era of automation, personalization, and creativity. From startups to global enterprises, organizations are beginning to see real returns as they use generative AI across operations, marketing, customer support, and product development. But what exactly are the benefits of this emerging technology?

1. Accelerated Content Creation

One of the most immediate advantages of generative AI tools is speed. Whether you're producing blog posts, ad copy, or customer service scripts, gen AI can generate new content drafts in seconds. This frees up human teams to focus on strategy and refinement instead of starting from scratch.

2. Enhanced Personalization at Scale

Generative AI enables businesses to deliver personalized experiences without manually customizing each interaction. AI agents can tailor product recommendations, email campaigns, and chatbot responses based on user behavior and preferences—something that was previously impractical at scale. As AI use continues to grow, so does the ability to create more meaningful, one-to-one interactions.

3. Improved Decision-Making

By combining generative models with other AI technology like predictive analytics, companies can simulate potential outcomes and generate insights to guide decisions. For instance, in ecommerce, gen AI can be used to predict which product bundles are likely to convert best and automatically generate promotional content around them.

4. Creativity Without Boundaries

From art and music to UX design and storytelling, generative AI has the potential to unlock new levels of creative exploration. Because these systems can mimic the intricacies of human expression using natural language processing and other deep learning techniques, they act as powerful collaborators rather than simple tools.

5. Rapid Prototyping and Innovation

Foundation model architectures allow developers to rapidly iterate on product ideas, test new user interfaces, or simulate customer interactions. This makes it easier to validate concepts before investing significant development resources—shortening the path from idea to implementation.

6. Accessibility and Democratization of Expertise

Generative AI lowers the barrier to entry for tasks that once required niche expertise. For example, small teams can now use generative design to produce visuals without a graphic designer, or deploy AI-powered chatbots using platforms like Voiceflow, without writing code. In this way, AI use democratizes innovation.

7. Cost Efficiency and Resource Optimization

By automating repetitive and time-consuming tasks, businesses can cut costs while maintaining or even improving output quality. Whether it's content generation, customer service automation, or synthetic data creation, the efficiency gains are tangible and growing.

In short, the ability to use generative AI doesn't just streamline operations—it transforms them. As foundation model performance continues to improve and more businesses adopt generative AI tools, the line between human and machine creativity will continue to blur in exciting, productive ways.

Evaluating Generative AI Technology

As generative AI continues to advance, evaluation becomes increasingly important.

Quality Metrics

Quality assessment typically involves both objective and subjective measures:

- Fidelity: How closely the generated content resembles real examples

- Coherence: Whether the content is internally consistent and logical

- Relevance: How well the output matches the provided prompt

Evaluation approaches include human assessment, comparative studies, and technical metrics specific to each domain.

Challenges and Limitations

Despite remarkable capabilities, generative AI faces significant challenges:

Technical Challenges

- Enormous computational requirements for training and operation

- Problems with reliability, including "hallucinations" (generating factually incorrect information)

- Difficulty in precisely controlling outputs

Ethical Considerations

- Models can inherit and amplify biases present in training data

- Potential for misuse in creating deepfakes or misinformation

- Privacy concerns related to training data

Copyright and Intellectual Property

- Questions about the legality of using copyrighted material for training

- Unclear ownership of AI-generated content

Future Directions

As generative AI continues to evolve, several promising research directions are emerging:

Emerging Research Areas

- Multimodal Models: Systems that work across multiple types of data

- Improved Control: More fine-grained user control over generated content

- Efficiency Improvements: Making models more accessible through reduced computational requirements

- Enhanced Reasoning: Reducing hallucinations and improving logical consistency

Integration with Other Technologies

Future impact will be amplified through integration with:

- Augmented and virtual reality experiences

- Internet of Things and edge computing

- Blockchain for verifiable creation and ownership

Conclusion

Generative AI represents one of the most significant technological developments of our time. From its origins in simple statistical models to today's sophisticated neural networks, the field has made remarkable progress in its ability to create increasingly realistic and diverse content.

As these technologies become more accessible and integrated into workflows, they are likely to transform not just what we can create but how we approach the creative process itself. By understanding both the possibilities and limitations of generative AI, we can better harness its potential while addressing the challenges it presents.

Get the latest AI agent news

Join Voiceflow CEO, Braden Ream, as he explores the future of agentic tech in business on the Humans Talking Agents podcast.